In graph theory, the shortest path problem is the problem of finding a path between two vertices in a graph such that the sum of the weights of its constituent edges is minimized.

Round-robin (RR) is one of the algorithms employed by process and network schedulers in computing. As the term is generally used, time slices are assigned to each process in equal portions and in circular order, handling all processes without priority. Round-robin scheduling is simple, easy to implement, and starvation-free. Round-robin scheduling can be applied to other scheduling problems, such as data packet scheduling in computer networks. It is an operating system concept.

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput.

Weighted round robin (WRR) is a network scheduler for data flows, but also used to schedule processes.

Deficit Round Robin (DRR), also Deficit Weighted Round Robin (DWRR), is a scheduling algorithm for the network scheduler. DRR is, like weighted fair queuing (WFQ), a packet-based implementation of the ideal Generalized Processor Sharing (GPS) policy. It was proposed by M. Shreedhar and G. Varghese in 1995 as an efficient and fair algorithm.

FAST TCP is a TCP congestion avoidance algorithm especially targeted at long-distance, high latency links, developed at the Netlab, California Institute of Technology and now being commercialized by FastSoft. FastSoft was acquired by Akamai Technologies in 2012.

Random early detection (RED), also known as random early discard or random early drop, is a queuing discipline for a network scheduler suited for congestion avoidance.

Generalized processor sharing (GPS) is an ideal scheduling algorithm for process schedulers and network schedulers. It is related to the fair-queuing principle which groups packets into classes and shares the service capacity between them. GPS shares this capacity according to some fixed weights.

Proportional-fair scheduling is a compromise-based scheduling algorithm. It is based upon maintaining a balance between two competing interests: Trying to maximize the total throughput of the network while at the same time allowing all users at least a minimal level of service. This is done by assigning each data flow a data rate or a scheduling priority that is inversely proportional to its anticipated resource consumption.

Network calculus is "a set of mathematical results which give insights into man-made systems such as concurrent programs, digital circuits and communication networks." Network calculus gives a theoretical framework for analysing performance guarantees in computer networks. As traffic flows through a network it is subject to constraints imposed by the system components, for example:

Fair queuing is a family of scheduling algorithms used in some process and network schedulers. The algorithm is designed to achieve fairness when a limited resource is shared, for example to prevent flows with large packets or processes that generate small jobs from consuming more throughput or CPU time than other flows or processes.

Bandwidth management is the process of measuring and controlling the communications on a network link, to avoid filling the link to capacity or overfilling the link, which would result in network congestion and poor performance of the network. Bandwidth is described by bit rate and measured in units of bits per second (bit/s) or bytes per second (B/s).

In routers and switches, active queue management (AQM) is the policy of dropping packets inside a buffer associated with a network interface controller (NIC) before that buffer becomes full, often with the goal of reducing network congestion or improving end-to-end latency. This task is performed by the network scheduler, which for this purpose uses various algorithms such as random early detection (RED), Explicit Congestion Notification (ECN), or controlled delay (CoDel). RFC 7567 recommends active queue management as a best practice.

Virtual output queueing (VOQ) is a technique used in certain network switch architectures where, rather than keeping all traffic in a single queue, separate queues are maintained for each possible output location. It addresses a common problem known as head-of-line blocking.

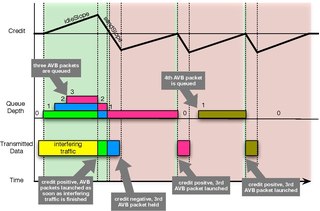

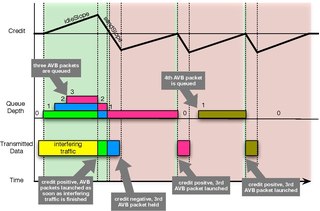

Credit-based fair queuing is a computationally efficient alternative to fair queueing. Credit is accumulated to queues as they wait for service. Credit is spent by queues while they are being serviced. Queues with positive credit are eligible for service. The rate of credit accumulation and release can be adjusted on a queue-by-queue basis to produce a weighted queuing behavior.

Bufferbloat is a cause of high latency and jitter in packet-switched networks caused by excess buffering of packets. Bufferbloat can also cause packet delay variation, as well as reduce the overall network throughput. When a router or switch is configured to use excessively large buffers, even very high-speed networks can become practically unusable for many interactive applications like voice over IP (VoIP), audio streaming, online gaming, and even ordinary web browsing.

In queueing theory, a discipline within the mathematical theory of probability, the backpressure routing algorithm is a method for directing traffic around a queueing network that achieves maximum network throughput, which is established using concepts of Lyapunov drift. Backpressure routing considers the situation where each job can visit multiple service nodes in the network. It is an extension of max-weight scheduling where each job visits only a single service node.

CoDel is an active queue management (AQM) algorithm in network routing, developed by Van Jacobson and Kathleen Nichols and published as RFC8289. It is designed to overcome bufferbloat in networking hardware, such as routers, by setting limits on the delay network packets experience as they pass through buffers in this equipment. CoDel aims to improve on the overall performance of the random early detection (RED) algorithm by addressing some of its fundamental misconceptions, as perceived by Jacobson, and by being easier to manage.

Processor sharing or egalitarian processor sharing is a service policy where the customers, clients or jobs are all served simultaneously, each receiving an equal fraction of the service capacity available. In such a system all jobs start service immediately.

Enhanced Transmission Selection (ETS) is a network scheduler scheduling algorithm that has been defined by the Data Center Bridging Task Group of the IEEE 802.1 Working Group. It is a hierarchical scheduler that combines static priority scheduling and a bandwidth sharing algorithms.