Video is an electronic medium for the recording, copying, playback, broadcasting, and display of moving visual media. Video was first developed for mechanical television systems, which were quickly replaced by cathode-ray tube (CRT) systems, which, in turn, were replaced by flat-panel displays of several types.

The JPEG File Interchange Format (JFIF) is an image file format standard published as ITU-T Recommendation T.871 and ISO/IEC 10918-5. It defines supplementary specifications for the container format that contains the image data encoded with the JPEG algorithm. The base specifications for a JPEG container format are defined in Annex B of the JPEG standard, known as JPEG Interchange Format (JIF). JFIF builds over JIF to solve some of JIF's limitations, including unnecessary complexity, component sample registration, resolution, aspect ratio, and color space. Because JFIF is not the original JPG standard, one might expect another MIME type. However, it is still registered as "image/jpeg".

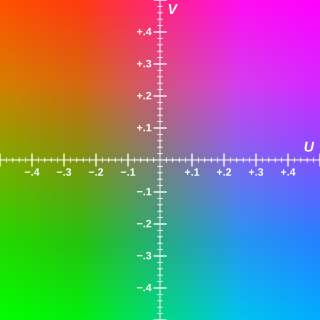

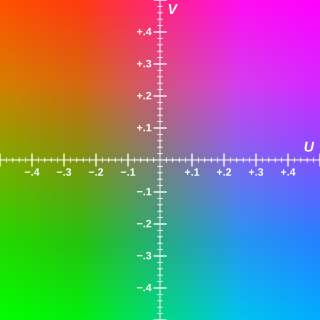

Y′UV, also written YUV, is the color model found in the PAL analogue color TV standard. A color is described as a Y′ component (luma) and two chroma components U and V. The prime symbol (') denotes that the luma is calculated from gamma-corrected RGB input and that it is different from true luminance. Today, the term YUV is commonly used in the computer industry to describe colorspaces that are encoded using YCbCr.

Chroma subsampling is the practice of encoding images by implementing less resolution for chroma information than for luma information, taking advantage of the human visual system's lower acuity for color differences than for luminance.

ITU-R Recommendation BT.601, more commonly known by the abbreviations Rec. 601 or BT.601, is a standard originally issued in 1982 by the CCIR for encoding interlaced analog video signals in digital video form. It includes methods of encoding 525-line 60 Hz and 625-line 50 Hz signals, both with an active region covering 720 luminance samples and 360 chrominance samples per line. The color encoding system is known as YCbCr 4:2:2.

Serial digital interface (SDI) is a family of digital video interfaces first standardized by SMPTE in 1989. For example, ITU-R BT.656 and SMPTE 259M define digital video interfaces used for broadcast-grade video. A related standard, known as high-definition serial digital interface (HD-SDI), is standardized in SMPTE 292M; this provides a nominal data rate of 1.485 Gbit/s.

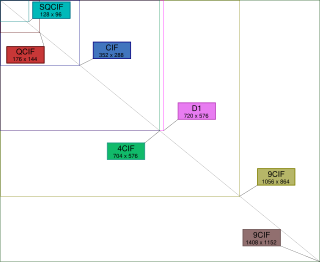

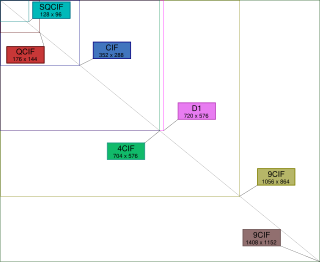

CIF, also known as FCIF, is a standardized format for the picture resolution, frame rate, color space, and color subsampling of digital video sequences used in video teleconferencing systems. It was first defined in the H.261 standard in 1988.

YCbCr, Y′CbCr, or Y Pb/Cb Pr/Cr, also written as YCBCR or Y′CBCR, is a family of color spaces used as a part of the color image pipeline in video and digital photography systems. Y′ is the luma component and CB and CR are the blue-difference and red-difference chroma components. Y′ is distinguished from Y, which is luminance, meaning that light intensity is nonlinearly encoded based on gamma corrected RGB primaries.

In television technology, Wide Screen Signaling (WSS) is digital metadata embedded in invisible part of the analog TV signal describing qualities of the broadcast, in particular the intended aspect ratio of the image. This allows television broadcasters to enable both 4:3 and 16:9 television sets to optimally present pictures transmitted in either format, by displaying them in full screen, letterbox, widescreen, pillar-box, zoomed letterbox, etc.

YPbPr or Y'PbPr, also written as YPBPR, is a color space used in video electronics, in particular in reference to component video cables. Like YCbCr, it is based on gamma corrected RGB primaries; the two are numerically equivalent but YPBPR is designed for use in analog systems while YCBCR is intended for digital video. The EOTF may be different from common sRGB EOTF and BT.1886 EOTF. Sync is carried on the Y channel and is a bi-level sync signal, but in HD formats a tri-level sync is used and is typically carried on all channels.

480i is the video mode used for standard-definition digital video in the Caribbean, Japan, South Korea, Taiwan, Philippines, Myanmar, Western Sahara, and most of the Americas. The other common standard definition digital standard, used in the rest of the world, is 576i.

576i is a standard-definition digital video mode, originally used for digitizing analogue television in most countries of the world where the utility frequency for electric power distribution is 50 Hz. Because of its close association with the legacy colour encoding systems, it is often referred to as PAL, PAL/SECAM or SECAM when compared to its 60 Hz NTSC-colour-encoded counterpart, 480i.

576p is the shorthand name for a video display resolution. The p stands for progressive scan, i.e. non-interlaced, the 576 for a vertical resolution of 576 pixels. Usually it corresponds to a digital video mode with a 4:3 anamorphic resolution of 720x576 and a frame rate of 25 frames per second (576p25), and thus using the same bandwidth and carrying the same amount of pixel data as 576i, but other resolutions and frame rates are possible.

H.262 or MPEG-2 Part 2 is a video coding format standardised and jointly maintained by ITU-T Study Group 16 Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG), and developed with the involvement of many companies. It is the second part of the ISO/IEC MPEG-2 standard. The ITU-T Recommendation H.262 and ISO/IEC 13818-2 documents are identical.

SMPTE 292 is a digital video transmission line standard published by the Society of Motion Picture and Television Engineers (SMPTE). This technical standard is usually referred to as HD-SDI; it is part of a family of standards that define a Serial Digital Interface based on a coaxial cable, intended to be used for transport of uncompressed digital video and audio in a television studio environment.

A Pixel aspect ratio is a mathematical ratio that describes how the width of a pixel in a digital image compared to the height of that pixel.

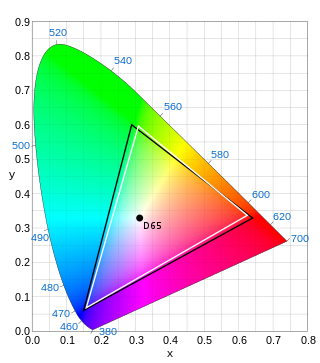

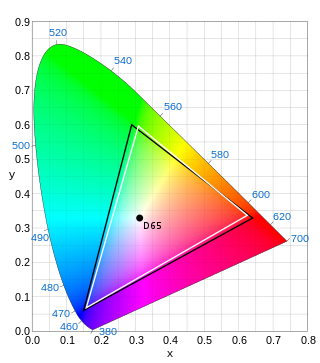

xvYCC or extended-gamut YCbCr is a color space that can be used in the video electronics of television sets to support a gamut 1.8 times as large as that of the sRGB color space. xvYCC was proposed by Sony, specified by the IEC in October 2005 and published in January 2006 as IEC 61966-2-4. xvYCC extends the ITU-R BT.709 tone curve by defining over-ranged values. xvYCC-encoded video retains the same color primaries and white point as BT.709, and uses either a BT.601 or BT.709 RGB-to-YCC conversion matrix and encoding. This allows it to travel through existing digital limited range YCC data paths, and any colors within the normal gamut will be compatible. It works by allowing negative RGB inputs and expanding the output chroma. These are used to encode more saturated colors by using a greater part of the RGB values that can be encoded in the YCbCr signal compared with those used in Broadcast Safe Level. The extra-gamut colors can then be displayed by a device whose underlying technology is not limited by the standard primaries.

Rec. 709, also known as Rec.709, BT.709, and ITU 709, is a standard developed by ITU-R for image encoding and signal characteristics of high-definition television.

ITU-R Recommendation BT.2020, more commonly known by the abbreviations Rec. 2020 or BT.2020, defines various aspects of ultra-high-definition television (UHDTV) with standard dynamic range (SDR) and wide color gamut (WCG), including picture resolutions, frame rates with progressive scan, bit depths, color primaries, RGB and luma-chroma color representations, chroma subsamplings, and an opto-electronic transfer function. The first version of Rec. 2020 was posted on the International Telecommunication Union (ITU) website on August 23, 2012, and two further editions have been published since then.

ITU-R Recommendation BT.2100, more commonly known by the abbreviations Rec. 2100 or BT.2100, introduced high-dynamic-range television (HDR-TV) by recommending the use of the perceptual quantizer or hybrid log–gamma (HLG) transfer functions instead of the traditional "gamma" previously used for SDR-TV.