Related Research Articles

In computing, a data warehouse, also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise.

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed and loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs.

Data profiling is the process of examining the data available from an existing information source and collecting statistics or informative summaries about that data. The purpose of these statistics may be to:

- Find out whether existing data can be easily used for other purposes

- Improve the ability to search data by tagging it with keywords, descriptions, or assigning it to a category

- Assess data quality, including whether the data conforms to particular standards or patterns

- Assess the risk involved in integrating data in new applications, including the challenges of joins

- Discover metadata of the source database, including value patterns and distributions, key candidates, foreign-key candidates, and functional dependencies

- Assess whether known metadata accurately describes the actual values in the source database

- Understanding data challenges early in any data intensive project, so that late project surprises are avoided. Finding data problems late in the project can lead to delays and cost overruns.

- Have an enterprise view of all data, for uses such as master data management, where key data is needed, or data governance for improving data quality.

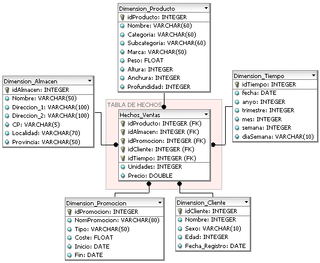

In computing, the star schema is the simplest style of data mart schema and is the approach most widely used to develop data warehouses and dimensional data marts. The star schema consists of one or more fact tables referencing any number of dimension tables. The star schema is an important special case of the snowflake schema, and is more effective for handling simpler queries.

William H. Inmon is an American computer scientist, recognized by many as the father of the data warehouse. Inmon wrote the first book, held the first conference, wrote the first column in a magazine and was the first to offer classes in data warehousing. Inmon created the accepted definition of what a data warehouse is - a subject oriented, nonvolatile, integrated, time variant collection of data in support of management's decisions. Compared with the approach of the other pioneering architect of data warehousing, Ralph Kimball, Inmon's approach is often characterized as a top-down approach.

In data warehousing, a fact table consists of the measurements, metrics or facts of a business process. It is located at the center of a star schema or a snowflake schema surrounded by dimension tables. Where multiple fact tables are used, these are arranged as a fact constellation schema. A fact table typically has two types of columns: those that contain facts and those that are a foreign key to dimension tables. The primary key of a fact table is usually a composite key that is made up of all of its foreign keys. Fact tables contain the content of the data warehouse and store different types of measures like additive, non-additive, and semi-additive measures.

In computing, a snowflake schema is a logical arrangement of tables in a multidimensional database such that the entity relationship diagram resembles a snowflake shape. The snowflake schema is represented by centralized fact tables which are connected to multiple dimensions. "Snowflaking" is a method of normalizing the dimension tables in a star schema. When it is completely normalized along all the dimension tables, the resultant structure resembles a snowflake with the fact table in the middle. The principle behind snowflaking is normalization of the dimension tables by removing low cardinality attributes and forming separate tables.

A dimension is a structure that categorizes facts and measures in order to enable users to answer business questions. Commonly used dimensions are people, products, place and time.

Ralph Kimball is an author on the subject of data warehousing and business intelligence. He is one of the original architects of data warehousing and is known for long-term convictions that data warehouses must be designed to be understandable and fast. His bottom-up methodology, also known as dimensional modeling or the Kimball methodology, is one of the two main data warehousing methodologies alongside Bill Inmon.

In accounting, the inventory turnover is a measure of the number of times inventory is sold or used in a time period such as a year. It is calculated to see if a business has an excessive inventory in comparison to its sales level. The equation for inventory turnover equals the cost of goods sold divided by the average inventory. Inventory turnover is also known as inventory turns, merchandise turnover, stockturn, stock turns, turns, and stock turnover.

According to Ralph Kimball, in a data warehouse, a degenerate dimension is a dimension key in the fact table that does not have its own dimension table, because all the interesting attributes have been placed in analytic dimensions. The term "degenerate dimension" was originated by Ralph Kimball.

A slowly changing dimension (SCD) in data management and data warehousing is a dimension which contains relatively static data which can change slowly but unpredictably, rather than according to a regular schedule. Some examples of typical slowly changing dimensions are entities as names of geographical locations, customers, or products.

Dimensional modeling (DM) is part of the Business Dimensional Lifecycle methodology developed by Ralph Kimball which includes a set of methods, techniques and concepts for use in data warehouse design. The approach focuses on identifying the key business processes within a business and modelling and implementing these first before adding additional business processes, as a bottom-up approach. An alternative approach from Inmon advocates a top down design of the model of all the enterprise data using tools such as entity-relationship modeling (ER).

In business intelligence, data classification has close ties to data clustering, but where data clustering is descriptive, data classification is predictive. In essence data classification consists of using variables with known values to predict the unknown or future values of other variables. It can be used in e.g. direct marketing, insurance fraud detection or medical diagnosis.

The dimensional fact model (DFM) is an ad hoc and graphical formalism specifically devised to support the conceptual modeling phase in a data warehouse project. DFM is extremely intuitive and can be used by analysts and non-technical users as well. A short-term working is sufficient to realize a clear and exhaustive representation of multidimensional concepts. It can be used from the initial data warehouse life-cycle steps, to rapidly devise a conceptual model to share with customers.

The Kimball Lifecycle is a methodology for developing data warehouses, and has been developed by Ralph Kimball and a variety of colleagues.

Aggregates are used in dimensional models of the data warehouse to produce positive effects on the time it takes to query large sets of data. At the simplest form an aggregate is a simple summary table that can be derived by performing a Group by SQL query. A more common use of aggregates is to take a dimension and change the granularity of this dimension. When changing the granularity of the dimension the fact table has to be partially summarized to fit the new grain of the new dimension, thus creating new dimensional and fact tables, fitting this new level of grain. Aggregates are sometimes referred to as pre-calculated summary data, since aggregations are usually precomputed, partially summarized data, that are stored in new aggregated tables. When facts are aggregated, it is either done by eliminating dimensionality or by associating the facts with a rolled up dimension. Rolled up dimensions should be shrunken versions of the dimensions associated with the granular base facts. This way, the aggregated dimension tables should conform to the base dimension tables. So the reason why aggregates can make such a dramatic increase in the performance of the data warehouse is the reduction of the number of rows to be accessed when responding to a query.

The Enterprise Bus Matrix is a Data Warehouse planning tool and model created by Ralph Kimball, and is part of the Data Warehouse Bus Architecture. The Matrix is the logical definition of one of the core concepts of Kimball’s approach to Dimensional Modeling – Conformed dimensions.

A financial ratio or accounting ratio is a relative magnitude of two selected numerical values taken from an enterprise's financial statements. Often used in accounting, there are many standard ratios used to try to evaluate the overall financial condition of a corporation or other organization. Financial ratios may be used by managers within a firm, by current and potential shareholders (owners) of a firm, and by a firm's creditors. Financial analysts use financial ratios to compare the strengths and weaknesses in various companies. If shares in a company are traded in a financial market, the market price of the shares is used in certain financial ratios.

Joe Caserta is an American information specialist and author. He is best known as the founder and president of data and analytics consulting, architecture, and implementation firm Caserta founded in 2001. Management consulting firm McKinsey & Company acquired Caserta on June 1, 2022.

References

- Kimball, Ralph et al. (1998); The Data Warehouse Lifecycle Toolkit, p17. Pub. Wiley. ISBN 0-471-25547-5.

- Kimball, Ralph (1996); The Data Warehouse Toolkit, p100. Pub. Wiley. ISBN 0-471-15337-0.