In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is The parameter is the mean or expectation of the distribution, while the parameter is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate.

In probability theory and statistics, the exponential distribution or negative exponential distribution is the probability distribution of the distance between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate; the distance parameter could be any meaningful mono-dimensional measure of the process, such as time between production errors, or length along a roll of fabric in the weaving manufacturing process. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts.

In special relativity, a four-vector is an object with four components, which transform in a specific way under Lorentz transformations. Specifically, a four-vector is an element of a four-dimensional vector space considered as a representation space of the standard representation of the Lorentz group, the representation. It differs from a Euclidean vector in how its magnitude is determined. The transformations that preserve this magnitude are the Lorentz transformations, which include spatial rotations and boosts.

In the special theory of relativity, four-force is a four-vector that replaces the classical force.

Variational Bayesian methods are a family of techniques for approximating intractable integrals arising in Bayesian inference and machine learning. They are typically used in complex statistical models consisting of observed variables as well as unknown parameters and latent variables, with various sorts of relationships among the three types of random variables, as might be described by a graphical model. As typical in Bayesian inference, the parameters and latent variables are grouped together as "unobserved variables". Variational Bayesian methods are primarily used for two purposes:

- To provide an analytical approximation to the posterior probability of the unobserved variables, in order to do statistical inference over these variables.

- To derive a lower bound for the marginal likelihood of the observed data. This is typically used for performing model selection, the general idea being that a higher marginal likelihood for a given model indicates a better fit of the data by that model and hence a greater probability that the model in question was the one that generated the data.

In differential geometry, the four-gradient is the four-vector analogue of the gradient from vector calculus.

In differential geometry, a tensor density or relative tensor is a generalization of the tensor field concept. A tensor density transforms as a tensor field when passing from one coordinate system to another, except that it is additionally multiplied or weighted by a power W of the Jacobian determinant of the coordinate transition function or its absolute value. A tensor density with a single index is called a vector density. A distinction is made among (authentic) tensor densities, pseudotensor densities, even tensor densities and odd tensor densities. Sometimes tensor densities with a negative weight W are called tensor capacity. A tensor density can also be regarded as a section of the tensor product of a tensor bundle with a density bundle.

The Pearson distribution is a family of continuous probability distributions. It was first published by Karl Pearson in 1895 and subsequently extended by him in 1901 and 1916 in a series of articles on biostatistics.

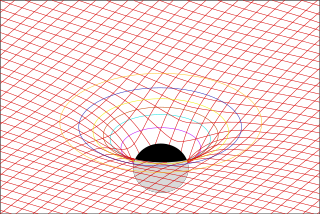

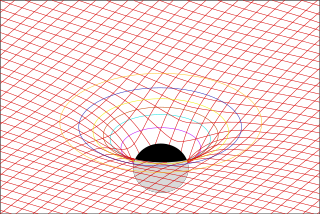

In general relativity, a geodesic generalizes the notion of a "straight line" to curved spacetime. Importantly, the world line of a particle free from all external, non-gravitational forces is a particular type of geodesic. In other words, a freely moving or falling particle always moves along a geodesic.

In probability theory and statistics, the generalized inverse Gaussian distribution (GIG) is a three-parameter family of continuous probability distributions with probability density function

In physics, Maxwell's equations in curved spacetime govern the dynamics of the electromagnetic field in curved spacetime or where one uses an arbitrary coordinate system. These equations can be viewed as a generalization of the vacuum Maxwell's equations which are normally formulated in the local coordinates of flat spacetime. But because general relativity dictates that the presence of electromagnetic fields induce curvature in spacetime, Maxwell's equations in flat spacetime should be viewed as a convenient approximation.

The Newman–Penrose (NP) formalism is a set of notation developed by Ezra T. Newman and Roger Penrose for general relativity (GR). Their notation is an effort to treat general relativity in terms of spinor notation, which introduces complex forms of the usual variables used in GR. The NP formalism is itself a special case of the tetrad formalism, where the tensors of the theory are projected onto a complete vector basis at each point in spacetime. Usually this vector basis is chosen to reflect some symmetry of the spacetime, leading to simplified expressions for physical observables. In the case of the NP formalism, the vector basis chosen is a null tetrad: a set of four null vectors—two real, and a complex-conjugate pair. The two real members often asymptotically point radially inward and radially outward, and the formalism is well adapted to treatment of the propagation of radiation in curved spacetime. The Weyl scalars, derived from the Weyl tensor, are often used. In particular, it can be shown that one of these scalars— in the appropriate frame—encodes the outgoing gravitational radiation of an asymptotically flat system.

Bayesian linear regression is a type of conditional modeling in which the mean of one variable is described by a linear combination of other variables, with the goal of obtaining the posterior probability of the regression coefficients and ultimately allowing the out-of-sample prediction of the regressandconditional on observed values of the regressors. The simplest and most widely used version of this model is the normal linear model, in which given is distributed Gaussian. In this model, and under a particular choice of prior probabilities for the parameters—so-called conjugate priors—the posterior can be found analytically. With more arbitrarily chosen priors, the posteriors generally have to be approximated.

Expected shortfall (ES) is a risk measure—a concept used in the field of financial risk measurement to evaluate the market risk or credit risk of a portfolio. The "expected shortfall at q% level" is the expected return on the portfolio in the worst of cases. ES is an alternative to value at risk that is more sensitive to the shape of the tail of the loss distribution.

A ratio distribution is a probability distribution constructed as the distribution of the ratio of random variables having two other known distributions. Given two random variables X and Y, the distribution of the random variable Z that is formed as the ratio Z = X/Y is a ratio distribution.

In financial mathematics, tail value at risk (TVaR), also known as tail conditional expectation (TCE) or conditional tail expectation (CTE), is a risk measure associated with the more general value at risk. It quantifies the expected value of the loss given that an event outside a given probability level has occurred.

In probability theory and statistics, the normal-inverse-gamma distribution is a four-parameter family of multivariate continuous probability distributions. It is the conjugate prior of a normal distribution with unknown mean and variance.

In mathematics, the Fox–Wright function (also known as Fox–Wright Psi function, not to be confused with Wright Omega function) is a generalisation of the generalised hypergeometric function pFq(z) based on ideas of Charles Fox (1928) and E. Maitland Wright (1935):

In the Newman–Penrose (NP) formalism of general relativity, independent components of the Ricci tensors of a four-dimensional spacetime are encoded into seven Ricci scalars which consist of three real scalars , three complex scalars and the NP curvature scalar . Physically, Ricci-NP scalars are related with the energy–momentum distribution of the spacetime due to Einstein's field equation.

In theoretical physics, relativistic Lagrangian mechanics is Lagrangian mechanics applied in the context of special relativity and general relativity.