Related Research Articles

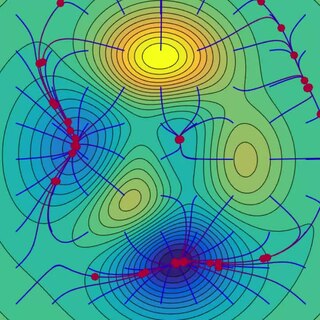

Numerical analysis is the study of algorithms that use numerical approximation for the problems of mathematical analysis. It is the study of numerical methods that attempt at finding approximate solutions of problems rather than the exact ones. Numerical analysis finds application in all fields of engineering and the physical sciences, and in the 21st century also the life and social sciences, medicine, business and even the arts. Current growth in computing power has enabled the use of more complex numerical analysis, providing detailed and realistic mathematical models in science and engineering. Examples of numerical analysis include: ordinary differential equations as found in celestial mechanics, numerical linear algebra in data analysis, and stochastic differential equations and Markov chains for simulating living cells in medicine and biology.

Quadratic programming (QP) is the process of solving certain mathematical optimization problems involving quadratic functions. Specifically, one seeks to optimize a multivariate quadratic function subject to linear constraints on the variables. Quadratic programming is a type of nonlinear programming.

Mathematical optimization or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.

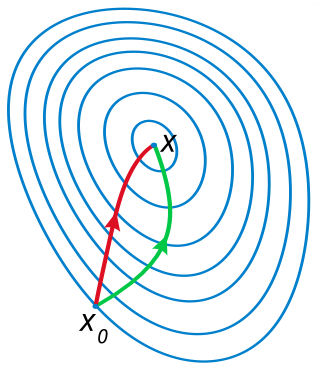

In mathematics, gradient descent is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. The idea is to take repeated steps in the opposite direction of the gradient of the function at the current point, because this is the direction of steepest descent. Conversely, stepping in the direction of the gradient will lead to a local maximum of that function; the procedure is then known as gradient ascent. It is particularly useful in machine learning for minimizing the cost or loss function. Gradient descent should not be confused with local search algorithms, although both are iterative methods for optimization.

In calculus, Newton's method (also called Newton–Raphson) is an iterative method for finding the roots of a differentiable function F, which are solutions to the equation F (x) = 0. As such, Newton's method can be applied to the derivative f ′ of a twice-differentiable function f to find the roots of the derivative (solutions to f ′(x) = 0), also known as the critical points of f. These solutions may be minima, maxima, or saddle points; see section "Several variables" in Critical point (mathematics) and also section "Geometric interpretation" in this article. This is relevant in optimization, which aims to find (global) minima of the function f.

Interior-point methods are a certain class of algorithms that solve linear and nonlinear convex optimization problems.

In numerical optimization, the Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm is an iterative method for solving unconstrained nonlinear optimization problems. Like the related Davidon–Fletcher–Powell method, BFGS determines the descent direction by preconditioning the gradient with curvature information. It does so by gradually improving an approximation to the Hessian matrix of the loss function, obtained only from gradient evaluations via a generalized secant method.

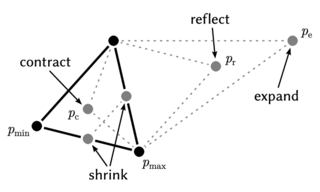

The Nelder–Mead method is a numerical method used to find the minimum or maximum of an objective function in a multidimensional space. It is a direct search method and is often applied to nonlinear optimization problems for which derivatives may not be known. However, the Nelder–Mead technique is a heuristic search method that can converge to non-stationary points on problems that can be solved by alternative methods.

In mathematical optimization, a trust region is the subset of the region of the objective function that is approximated using a model function. If an adequate model of the objective function is found within the trust region, then the region is expanded; conversely, if the approximation is poor, then the region is contracted.

Limited-memory BFGS is an optimization algorithm in the family of quasi-Newton methods that approximates the Broyden–Fletcher–Goldfarb–Shanno algorithm (BFGS) using a limited amount of computer memory. It is a popular algorithm for parameter estimation in machine learning. The algorithm's target problem is to minimize over unconstrained values of the real-vector where is a differentiable scalar function.

Sequential quadratic programming (SQP) is an iterative method for constrained nonlinear optimization which may be considered a quasi-Newton method. SQP methods are used on mathematical problems for which the objective function and the constraints are twice continuously differentiable.

Quasi-Newton methods are methods used to either find zeroes or local maxima and minima of functions, as an alternative to Newton's method. They can be used if the Jacobian or Hessian is unavailable or is too expensive to compute at every iteration. The "full" Newton's method requires the Jacobian in order to search for zeros, or the Hessian for finding extrema. Some iterative methods that reduce to Newton's method, such as SLSQP, may be considered quasi-Newtonian.

In numerical analysis, Broyden's method is a quasi-Newton method for finding roots in k variables. It was originally described by C. G. Broyden in 1965.

The Symmetric Rank 1 (SR1) method is a quasi-Newton method to update the second derivative (Hessian) based on the derivatives (gradients) calculated at two points. It is a generalization to the secant method for a multidimensional problem. This update maintains the symmetry of the matrix but does not guarantee that the update be positive definite.

Hendrik "Henk" Albertus van der Vorst is a Dutch mathematician and Emeritus Professor of Numerical Analysis at Utrecht University. According to the Institute for Scientific Information (ISI), his paper on the BiCGSTAB method was the most cited paper in the field of mathematics in the 1990s. He is a member of the Royal Netherlands Academy of Arts and Sciences (KNAW) since 2002 and the Netherlands Academy of Technology and Innovation. In 2006 he was awarded a knighthood of the Order of the Netherlands Lion. Henk van der Vorst is a Fellow of Society for Industrial and Applied Mathematics (SIAM).

Pattern search is a family of numerical optimization methods that does not require a gradient. As a result, it can be used on functions that are not continuous or differentiable. One such pattern search method is "convergence", which is based on the theory of positive bases. Optimization attempts to find the best match in a multidimensional analysis space of possibilities.

Augmented Lagrangian methods are a certain class of algorithms for solving constrained optimization problems. They have similarities to penalty methods in that they replace a constrained optimization problem by a series of unconstrained problems and add a penalty term to the objective; the difference is that the augmented Lagrangian method adds yet another term designed to mimic a Lagrange multiplier. The augmented Lagrangian is related to, but not identical with the method of Lagrange multipliers.

Yurii Nesterov is a Russian mathematician, an internationally recognized expert in convex optimization, especially in the development of efficient algorithms and numerical optimization analysis. He is currently a professor at the University of Louvain (UCLouvain).

MINOS is a Fortran software package for solving linear and nonlinear mathematical optimization problems. MINOS may be used for linear programming, quadratic programming, and more general objective functions and constraints, and for finding a feasible point for a set of linear or nonlinear equalities and inequalities.

References

- ↑ Dembo, Ron S.; Steihaug, Trond (1983). "Truncated-Newton algorithms for large-scale unconstrained optimization". Mathematical Programming. Springer. 26 (2): 190–212. doi:10.1007/BF02592055. S2CID 40537623.. Convergence results for this algorithm can be found in Dembo, Ron S.; Eisenstat, Stanley C.; Steihaug, Trond (1982). "Inexact newton methods". SIAM Journal on Numerical Analysis. 19 (2): 400–408. Bibcode:1982SJNA...19..400D. doi:10.1137/0719025. JSTOR 2156954..

- 1 2 Martens, James (2010). Deep learning via Hessian-free optimization (PDF). Proc. International Conference on Machine Learning.

- ↑ Nash, Stephen G. (2000). "A survey of truncated-Newton methods". Journal of Computational and Applied Mathematics. 124 (1–2): 45–59. Bibcode:2000JCoAM.124...45N. doi: 10.1016/S0377-0427(00)00426-X .

- ↑ Nash, Stephen G. (1985). "Preconditioning of truncated-Newton methods" (PDF). SIAM J. Sci. Stat. Comput. 6 (3): 599–616. doi:10.1137/0906042.