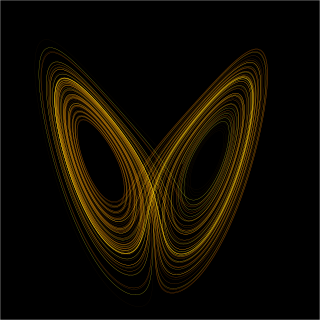

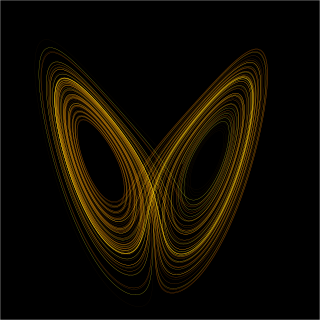

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space, such as in a parametric curve. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, the random motion of particles in the air, and the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a set, without the need of a smooth space-time structure defined on it.

In probability and statistics, a Bernoulli process is a finite or infinite sequence of binary random variables, so it is a discrete-time stochastic process that takes only two values, canonically 0 and 1. The component Bernoulli variablesXi are identically distributed and independent. Prosaically, a Bernoulli process is a repeated coin flipping, possibly with an unfair coin. Every variable Xi in the sequence is associated with a Bernoulli trial or experiment. They all have the same Bernoulli distribution. Much of what can be said about the Bernoulli process can also be generalized to more than two outcomes ; this generalization is known as the Bernoulli scheme.

In mathematics, a function from a set X to a set Y assigns to each element of X exactly one element of Y. The set X is called the domain of the function and the set Y is called the codomain of the function.

In mathematics, Hall's marriage theorem, proved by Philip Hall (1935), is a theorem with two equivalent formulations:

In graph theory, a quiver is a directed graph where loops and multiple arrows between two vertices are allowed, i.e. a multidigraph. They are commonly used in representation theory: a representation V of a quiver assigns a vector space V(x) to each vertex x of the quiver and a linear map V(a) to each arrow a.

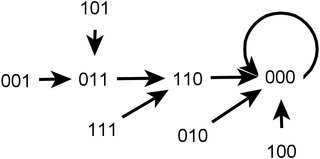

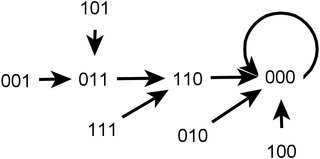

In the theory of computation, a branch of theoretical computer science, a deterministic finite automaton (DFA)—also known as deterministic finite acceptor (DFA), deterministic finite-state machine (DFSM), or deterministic finite-state automaton (DFSA)—is a finite-state machine that accepts or rejects a given string of symbols, by running through a state sequence uniquely determined by the string. Deterministic refers to the uniqueness of the computation run. In search of the simplest models to capture finite-state machines, Warren McCulloch and Walter Pitts were among the first researchers to introduce a concept similar to finite automata in 1943.

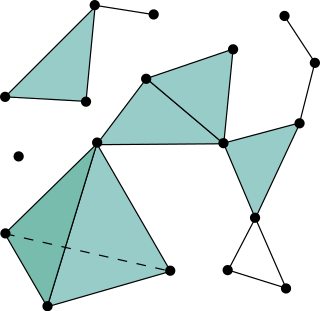

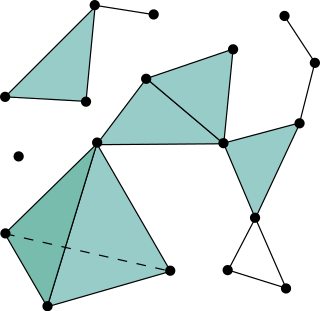

In combinatorics, an abstract simplicial complex (ASC), often called an abstract complex or just a complex, is a family of sets that is closed under taking subsets, i.e., every subset of a set in the family is also in the family. It is a purely combinatorial description of the geometric notion of a simplicial complex. For example, in a 2-dimensional simplicial complex, the sets in the family are the triangles, their edges, and their vertices.

A finite-state transducer (FST) is a finite-state machine with two memory tapes, following the terminology for Turing machines: an input tape and an output tape. This contrasts with an ordinary finite-state automaton, which has a single tape. An FST is a type of finite-state automaton (FSA) that maps between two sets of symbols. An FST is more general than an FSA. An FSA defines a formal language by defining a set of accepted strings, while an FST defines relations between sets of strings.

In mathematics, a Markov decision process (MDP) is a discrete-time stochastic control process. It provides a mathematical framework for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker. MDPs are useful for studying optimization problems solved via dynamic programming. MDPs were known at least as early as the 1950s; a core body of research on Markov decision processes resulted from Ronald Howard's 1960 book, Dynamic Programming and Markov Processes. They are used in many disciplines, including robotics, automatic control, economics and manufacturing. The name of MDPs comes from the Russian mathematician Andrey Markov as they are an extension of Markov chains.

A stochastic differential equation (SDE) is a differential equation in which one or more of the terms is a stochastic process, resulting in a solution which is also a stochastic process. SDEs have many applications throughout pure mathematics and are used to model various behaviours of stochastic models such as stock prices, random growth models or physical systems that are subjected to thermal fluctuations.

In mathematics, subshifts of finite type are used to model dynamical systems, and in particular are the objects of study in symbolic dynamics and ergodic theory. They also describe the set of all possible sequences executed by a finite state machine. The most widely studied shift spaces are the subshifts of finite type.

In mathematics, ergodicity expresses the idea that a point of a moving system, either a dynamical system or a stochastic process, will eventually visit all parts of the space that the system moves in, in a uniform and random sense. This implies that the average behavior of the system can be deduced from the trajectory of a "typical" point. Equivalently, a sufficiently large collection of random samples from a process can represent the average statistical properties of the entire process. Ergodicity is a property of the system; it is a statement that the system cannot be reduced or factored into smaller components. Ergodic theory is the study of systems possessing ergodicity.

In mathematics, two functions are said to be topologically conjugate if there exists a homeomorphism that will conjugate the one into the other. Topological conjugacy, and related-but-distinct § Topological equivalence of flows, are important in the study of iterated functions and more generally dynamical systems, since, if the dynamics of one iterative function can be determined, then that for a topologically conjugate function follows trivially.

In topology, a branch of mathematics, the ends of a topological space are, roughly speaking, the connected components of the "ideal boundary" of the space. That is, each end represents a topologically distinct way to move to infinity within the space. Adding a point at each end yields a compactification of the original space, known as the end compactification.

In the mathematical field of dynamical systems, a random dynamical system is a dynamical system in which the equations of motion have an element of randomness to them. Random dynamical systems are characterized by a state space S, a set of maps from S into itself that can be thought of as the set of all possible equations of motion, and a probability distribution Q on the set that represents the random choice of map. Motion in a random dynamical system can be informally thought of as a state evolving according to a succession of maps randomly chosen according to the distribution Q.

Sequential dynamical systems (SDSs) are a class of graph dynamical systems. They are discrete dynamical systems which generalize many aspects of for example classical cellular automata, and they provide a framework for studying asynchronous processes over graphs. The analysis of SDSs uses techniques from combinatorics, abstract algebra, graph theory, dynamical systems and probability theory.

The Abelian sandpile model (ASM) is the more popular name of the original Bak–Tang–Wiesenfeld model (BTW). BTW model was the first discovered example of a dynamical system displaying self-organized criticality. It was introduced by Per Bak, Chao Tang and Kurt Wiesenfeld in a 1987 paper.

In the mathematics of infinite graphs, an end of a graph represents, intuitively, a direction in which the graph extends to infinity. Ends may be formalized mathematically as equivalence classes of infinite paths, as havens describing strategies for pursuit–evasion games on the graph, or as topological ends of topological spaces associated with the graph.

Stochastic cellular automata or probabilistic cellular automata (PCA) or random cellular automata or locally interacting Markov chains are an important extension of cellular automaton. Cellular automata are a discrete-time dynamical system of interacting entities, whose state is discrete.

In mathematics, a Markov odometer is a certain type of topological dynamical system. It plays a fundamental role in ergodic theory and especially in orbit theory of dynamical systems, since a theorem of H. Dye asserts that every ergodic nonsingular transformation is orbit-equivalent to a Markov odometer.