Related Research Articles

Cognitive behavioral therapy (CBT) is a psycho-social intervention that aims to reduce symptoms of various mental health conditions, primarily depression and anxiety disorders. Cognitive behavioral therapy is one of the most effective means of treatment for substance abuse and co-occurring mental health disorders. CBT focuses on challenging and changing cognitive distortions and their associated behaviors to improve emotional regulation and develop personal coping strategies that target solving current problems. Though it was originally designed to treat depression, its uses have been expanded to include many issues and the treatment of many mental health conditions, including anxiety, substance use disorders, marital problems, ADHD, and eating disorders. CBT includes a number of cognitive or behavioral psychotherapies that treat defined psychopathologies using evidence-based techniques and strategies.

Evidence-based medicine (EBM) is "the conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients." The aim of EBM is to integrate the experience of the clinician, the values of the patient, and the best available scientific information to guide decision-making about clinical management. The term was originally used to describe an approach to teaching the practice of medicine and improving decisions by individual physicians about individual patients.

Meta-analysis is the statistical combination of the results of multiple studies addressing a similar research question. An important part of this method involves computing an effect size across all of the studies; this involves extracting effect sizes and variance measures from various studies. Meta-analyses are integral in supporting research grant proposals, shaping treatment guidelines, and influencing health policies. They are also pivotal in summarizing existing research to guide future studies, thereby cementing their role as a fundamental methodology in metascience. Meta-analyses are often, but not always, important components of a systematic review procedure. For instance, a meta-analysis may be conducted on several clinical trials of a medical treatment, in an effort to obtain a better understanding of how well the treatment works.

A placebo can be roughly defined as a sham medical treatment. Common placebos include inert tablets, inert injections, sham surgery, and other procedures.

A randomized controlled trial is a form of scientific experiment used to control factors not under direct experimental control. Examples of RCTs are clinical trials that compare the effects of drugs, surgical techniques, medical devices, diagnostic procedures or other medical treatments.

Clinical trials are prospective biomedical or behavioral research studies on human participants designed to answer specific questions about biomedical or behavioral interventions, including new treatments and known interventions that warrant further study and comparison. Clinical trials generate data on dosage, safety and efficacy. They are conducted only after they have received health authority/ethics committee approval in the country where approval of the therapy is sought. These authorities are responsible for vetting the risk/benefit ratio of the trial—their approval does not mean the therapy is 'safe' or effective, only that the trial may be conducted.

In a blind or blinded experiment, information which may influence the participants of the experiment is withheld until after the experiment is complete. Good blinding can reduce or eliminate experimental biases that arise from a participants' expectations, observer's effect on the participants, observer bias, confirmation bias, and other sources. A blind can be imposed on any participant of an experiment, including subjects, researchers, technicians, data analysts, and evaluators. In some cases, while blinding would be useful, it is impossible or unethical. For example, it is not possible to blind a patient to their treatment in a physical therapy intervention. A good clinical protocol ensures that blinding is as effective as possible within ethical and practical constraints.

The number needed to treat (NNT) or number needed to treat for an additional beneficial outcome (NNTB) is an epidemiological measure used in communicating the effectiveness of a health-care intervention, typically a treatment with medication. The NNT is the average number of patients who need to be treated to prevent one additional bad outcome. It is defined as the inverse of the absolute risk reduction, and computed as , where is the incidence in the control (unexposed) group, and is the incidence in the treated (exposed) group. This calculation implicitly assumes monotonicity, that is, no individual can be harmed by treatment. The modern approach, based on counterfactual conditionals, relaxes this assumption and yields bounds on NNT.

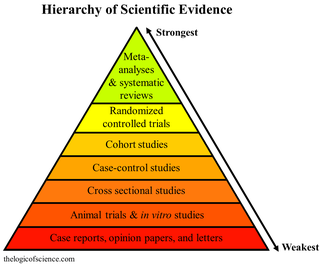

A hierarchy of evidence, comprising levels of evidence (LOEs), that is, evidence levels (ELs), is a heuristic used to rank the relative strength of results obtained from experimental research, especially medical research. There is broad agreement on the relative strength of large-scale, epidemiological studies. More than 80 different hierarchies have been proposed for assessing medical evidence. The design of the study and the endpoints measured affect the strength of the evidence. In clinical research, the best evidence for treatment efficacy is mainly from meta-analyses of randomized controlled trials (RCTs). Systematic reviews of completed, high-quality randomized controlled trials – such as those published by the Cochrane Collaboration – rank the same as systematic review of completed high-quality observational studies in regard to the study of side effects. Evidence hierarchies are often applied in evidence-based practices and are integral to evidence-based medicine (EBM).

Zelen's design is an experimental design for randomized clinical trials proposed by Harvard School of Public Health statistician Marvin Zelen (1927-2014). In this design, patients are randomized to either the treatment or control group before giving informed consent. Because the group to which a given patient is assigned is known, consent can be sought conditionally.

In a randomized experiment, allocation concealment hides the sorting of trial participants into treatment groups so that this knowledge cannot be exploited. Adequate allocation concealment serves to prevent study participants from influencing treatment allocations for subjects. Studies with poor allocation concealment are prone to selection bias.

In science, randomized experiments are the experiments that allow the greatest reliability and validity of statistical estimates of treatment effects. Randomization-based inference is especially important in experimental design and in survey sampling.

Treatment of ME/CFS is variable and uncertain, and the condition is primarily managed rather than cured.

Clinical trials are medical research studies conducted on human subjects. The human subjects are assigned to one or more interventions, and the investigators evaluate the effects of those interventions. The progress and results of clinical trials are analyzed statistically.

The Jadad scale, sometimes known as Jadad scoring or the Oxford quality scoring system, is a procedure to assess the methodological quality of a clinical trial by objective criteria. It is named after Canadian-Colombian physician Alex Jadad who in 1996 described a system for allocating such trials a score of between zero and five (rigorous). It is the most widely used such assessment in the world, and as of 2022, its seminal paper has been cited in over 23,000 scientific works.

Placebo-controlled studies are a way of testing a medical therapy in which, in addition to a group of subjects that receives the treatment to be evaluated, a separate control group receives a sham "placebo" treatment which is specifically designed to have no real effect. Placebos are most commonly used in blinded trials, where subjects do not know whether they are receiving real or placebo treatment. Often, there is also a further "natural history" group that does not receive any treatment at all.

In medicine, a stepped-wedge trial is a type of randomised controlled trial (RCT). An RCT is a scientific experiment that is designed to reduce bias when testing a new medical treatment, a social intervention, or another testable hypothesis.

The GRADE approach is a method of assessing the certainty in evidence and the strength of recommendations in health care. It provides a structured and transparent evaluation of the importance of outcomes of alternative management strategies, acknowledgment of patients and the public values and preferences, and comprehensive criteria for downgrading and upgrading certainty in evidence. It has important implications for those summarizing evidence for systematic reviews, health technology assessments, and clinical practice guidelines as well as other decision makers.

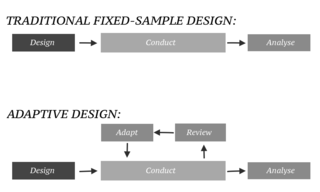

In an adaptive design of a clinical trial, the parameters and conduct of the trial for a candidate drug or vaccine may be changed based on an interim analysis. Adaptive design typically involves advanced statistics to interpret a clinical trial endpoint. This is in contrast to traditional single-arm clinical trials or randomized clinical trials (RCTs) that are static in their protocol and do not modify any parameters until the trial is completed. The adaptation process takes place at certain points in the trial, prescribed in the trial protocol. Importantly, this trial protocol is set before the trial begins with the adaptation schedule and processes specified. Adaptions may include modifications to: dosage, sample size, drug undergoing trial, patient selection criteria and/or "cocktail" mix. The PANDA provides not only a summary of different adaptive designs, but also comprehensive information on adaptive design planning, conduct, analysis and reporting.

A platform trial is a type of prospective, disease-focused, adaptive, randomized clinical trial (RCT) that compares multiple, simultaneous and possibly differently-timed interventions against a single, constant control group. As a disease-focused trial design, platform trials attempt to answer the question "which therapy will best treat this disease". Platform trials are unique in their utilization of both: a common control group and their opportunity to alter the therapies it investigates during its active enrollment phase. Platform trials commonly take advantage of Bayesian statistics, but may incorporate elements of frequentist statistics and/or machine learning.

References

- ↑ Hollis, Sally; Campbell, Fiona (September 1999). "What is meant by intention to treat analysis? Survey of published randomised controlled trials". BMJ. 319 (7211): 670–674. doi:10.1136/bmj.319.7211.670. PMC 28218 . PMID 10480822.

- ↑ Alshurafa, Mohamad; Briel, Matthias; Akl, Elie A.; Haines, Ted; Moayyedi, Paul; Gentles, Stephen J.; Rios, Lorena; Tran, Chau; Bhatnagar, Neera; Lamontagne, Francois; Walter, Stephen D.; Guyatt, Gordon H. (2012). "Inconsistent Definitions for Intention-To-Treat in Relation to Missing Outcome Data: Systematic Review of the Methods Literature". PLOS ONE. 7 (11): e49163. Bibcode:2012PLoSO...749163A. doi: 10.1371/journal.pone.0049163 . PMC 3499557 . PMID 23166608.

- ↑ "Further issues in meta-analysis". Archived from the original on 2013-11-10. Retrieved 2012-11-02.

- ↑ Montedori A; Bonacini MI; Casazza G; Luchetta ML; Duca P; Cozzolino F; Abraha I. (February 2011). "Modified versus standard intention-to-treat reporting: are there differences in methodological quality, sponsorship, and findings in randomized trials? A cross-sectional study". Trials. 12 (1): 58. doi: 10.1186/1745-6215-12-58 . PMC 3055831 . PMID 21356072.

- ↑ Lachin JM (June 2000). "Statistical Considerations in the Intent-to-Treat Principle". Controlled Clinical Trials. 21 (3): 167–189. CiteSeerX 10.1.1.463.2948 . doi:10.1016/S0197-2456(00)00046-5. PMID 10822117.

- ↑ Per-protocol analysis [ dead link ] – Glossary [ dead link ] of the Nature Clinical Practice

- ↑ Chêne, Geneviève; Morlat, Philippe; Leport, Catherine; Hafner, Richard; Dequae, Laurence; Charreau, Isabelle; Aboulker, Jean-Pierre; Luft, Benjamin; Aubertin, Jean; Vildé, Jean-Louis; Salamon, Roger (June 1998). "Intention-to-Treat vs. On-Treatment Analyses of Clinical Trial Data". Controlled Clinical Trials. 19 (3): 233–248. doi:10.1016/s0197-2456(97)00145-1. PMID 9620807.