In machine learning, a neural network is a model inspired by the structure and function of biological neural networks in animal brains.

A hidden Markov model (HMM) is a Markov model in which the observations are dependent on a latent Markov process. An HMM requires that there be an observable process whose outcomes depend on the outcomes of in a known way. Since cannot be observed directly, the goal is to learn about state of by observing By definition of being a Markov model, an HMM has an additional requirement that the outcome of at time must be "influenced" exclusively by the outcome of at and that the outcomes of and at must be conditionally independent of at given at time Estimation of the parameters in an HMM can be performed using maximum likelihood. For linear chain HMMs, the Baum–Welch algorithm can be used to estimate the parameters.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

Unsupervised learning is a method in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Within such an approach, a machine learning model tries to find any similarities, differences, patterns, and structure in data by itself. No prior human intervention is needed.

Nonlinear dimensionality reduction, also known as manifold learning, is any of various related techniques that aim to project high-dimensional data onto lower-dimensional latent manifolds, with the goal of either visualizing the data in the low-dimensional space, or learning the mapping itself. The techniques described below can be understood as generalizations of linear decomposition methods used for dimensionality reduction, such as singular value decomposition and principal component analysis.

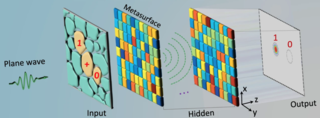

An optical neural network (ONN) is a physical implementation of an artificial neural network with optical components.

Neural coding is a neuroscience field concerned with characterising the hypothetical relationship between the stimulus and the neuronal responses, and the relationship among the electrical activities of the neurons in the ensemble. Based on the theory that sensory and other information is represented in the brain by networks of neurons, it is believed that neurons can encode both digital and analog information.

An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data. An autoencoder learns two functions: an encoding function that transforms the input data, and a decoding function that recreates the input data from the encoded representation. The autoencoder learns an efficient representation (encoding) for a set of data, typically for dimensionality reduction.

Spiking neural networks (SNNs) are artificial neural networks (ANN) that more closely mimic natural neural networks. In addition to neuronal and synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that neurons in the SNN do not transmit information at each propagation cycle, but rather transmit information only when a membrane potential—an intrinsic quality of the neuron related to its membrane electrical charge—reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a spiking neuron model.

Hierarchical temporal memory (HTM) is a biologically constrained machine intelligence technology developed by Numenta. Originally described in the 2004 book On Intelligence by Jeff Hawkins with Sandra Blakeslee, HTM is primarily used today for anomaly detection in streaming data. The technology is based on neuroscience and the physiology and interaction of pyramidal neurons in the neocortex of the mammalian brain.

There are many types of artificial neural networks (ANN).

Deep learning is the subset of machine learning methods based on neural networks with representation learning. The adjective "deep" refers to the use of multiple layers in the network. Methods used can be either supervised, semi-supervised or unsupervised.

The wake-sleep algorithm is an unsupervised learning algorithm for deep generative models, especially Helmholtz Machines. The algorithm is similar to the expectation-maximization algorithm, and optimizes the model likelihood for observed data. The name of the algorithm derives from its use of two learning phases, the “wake” phase and the “sleep” phase, which are performed alternately. It can be conceived as a model for learning in the brain, but is also being applied for machine learning.

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task.

Quantum machine learning is the integration of quantum algorithms within machine learning programs.

Multimodal learning, in the context of machine learning, is a type of deep learning using a combination of various modalities of data, such as text, audio, or images, in order to create a more robust model of the real-world phenomena in question. In contrast, singular modal learning would analyze text or imaging data independently. Multimodal machine learning combines these fundamentally different statistical analyses using specialized modeling strategies and algorithms, resulting in a model that comes closer to representing the real world.

The following outline is provided as an overview of and topical guide to machine learning:

In machine learning, a variational autoencoder (VAE) is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling. It is part of the families of probabilistic graphical models and variational Bayesian methods.

A latent space, also known as a latent feature space or embedding space, is an embedding of a set of items within a manifold in which items resembling each other are positioned closer to one another. Position within the latent space can be viewed as being defined by a set of latent variables that emerge from the resemblances from the objects.

Ila Fiete is an Indian–American physicist and computational neuroscientist as well as a Professor in the Department of Brain and Cognitive Sciences within the McGovern Institute for Brain Research at the Massachusetts Institute of Technology. Fiete builds theoretical models and analyses neural data and to uncover how neural circuits perform computations and how the brain represents and manipulates information involved in memory and reasoning.