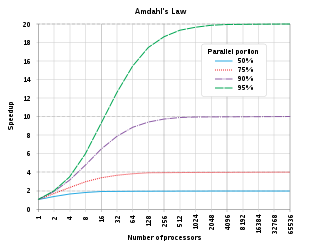

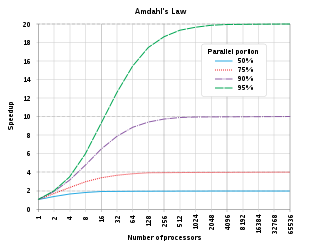

In computer architecture, Amdahl's law is a formula which gives the theoretical speedup in latency of the execution of a task at fixed workload that can be expected of a system whose resources are improved. It states that "the overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used". It is named after computer scientist Gene Amdahl, and was presented at the American Federation of Information Processing Societies (AFIPS) Spring Joint Computer Conference in 1967.

Queueing theory is the mathematical study of waiting lines, or queues. A queueing model is constructed so that queue lengths and waiting time can be predicted. Queueing theory is generally considered a branch of operations research because the results are often used when making business decisions about the resources needed to provide a service.

Parallel computing is a type of computation in which many calculations or processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling. As power consumption by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors.

In mathematical queueing theory, Little's law is a theorem by John Little which states that the long-term average number L of customers in a stationary system is equal to the long-term average effective arrival rate λ multiplied by the average time W that a customer spends in the system. Expressed algebraically the law is

Scalability is the property of a system to handle a growing amount of work. One definition for software systems specifies that this may be done by adding resources to the system.

In direct-current circuit theory, Norton's theorem, also called the Mayer–Norton theorem, is a simplification that can be applied to networks made of linear time-invariant resistances, voltage sources, and current sources. At a pair of terminals of the network, it can be replaced by a current source and a single resistor in parallel.

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

Gene Myron Amdahl was an American computer architect and high-tech entrepreneur, chiefly known for his work on mainframe computers at IBM and later his own companies, especially Amdahl Corporation. He formulated Amdahl's law, which states a fundamental limitation of parallel computing.

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on mathematical aspects of computer science such as the theory of computation, formal language theory, the lambda calculus and type theory.

Amdahl Corporation was an information technology company which specialized in IBM mainframe-compatible computer products, some of which were regarded as supercomputers competing with those from Cray Research. Founded in 1970 by Gene Amdahl, a former IBM computer engineer best known as chief architect of System/360, it has been a wholly owned subsidiary of Fujitsu since 1997. The company was located in Sunnyvale, California.

MOSIX is a proprietary distributed operating system. Although early versions were based on older UNIX systems, since 1999 it focuses on Linux clusters and grids. In a MOSIX cluster/grid there is no need to modify or to link applications with any library, to copy files or login to remote nodes, or even to assign processes to different nodes – it is all done automatically, like in an SMP.

In computer architecture, Gustafson's law gives the speedup in the execution time of a task that theoretically gains from parallel computing, using a hypothetical run of the task on a single-core machine as the baseline. To put it another way, it is the theoretical "slowdown" of an already parallelized task if running on a serial machine. It is named after computer scientist John L. Gustafson and his colleague Edwin H. Barsis, and was presented in the article Reevaluating Amdahl's Law in 1988.

A discrete-event simulation (DES) models the operation of a system as a (discrete) sequence of events in time. Each event occurs at a particular instant in time and marks a change of state in the system. Between consecutive events, no change in the system is assumed to occur; thus the simulation time can directly jump to the occurrence time of the next event, which is called next-event time progression.

In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. When it comes to high computer performance, one or more of the following factors might be involved:

In multiprocessor computer systems, software lockout is the issue of performance degradation due to the idle wait times spent by the CPUs in kernel-level critical sections. Software lockout is the major cause of scalability degradation in a multiprocessor system, posing a limit on the maximum useful number of processors. To mitigate the phenomenon, the kernel must be designed to have its critical sections as short as possible, therefore decomposing each data structure in smaller substructures.

In queueing theory, a discipline within the mathematical theory of probability, mean value analysis (MVA) is a recursive technique for computing expected queue lengths, waiting time at queueing nodes and throughput in equilibrium for a closed separable system of queues. The first approximate techniques were published independently by Schweitzer and Bard, followed later by an exact version by Lavenberg and Reiser published in 1980.

Within theoretical computer science, the Sun–Ni law is a memory-bounded speedup model which states that as computing power increases the corresponding increase in problem size is constrained by the system’s memory capacity. In general, as a system grows in computational power, the problems run on the system increase in size. Analogous to Amdahl's law, which says that the problem size remains constant as system sizes grow, and Gustafson's law, which proposes that the problem size should scale but be bound by a fixed amount of time, the Sun–Ni law states the problem size should scale but be bound by the memory capacity of the system. Sun–Ni law was initially proposed by Xian-He Sun and Lionel Ni at the Proceedings of IEEE Supercomputing Conference 1990.

MQX is a real-time operating system (RTOS) developed by Precise Software Technologies, Inc., and currently sold by Synopsys, Embedded Access, Inc., and NXP Semiconductors.

Oyekunle Ayinde "Kunle" Olukotun is a British-born Nigerian computer scientist who is the Cadence Design Systems Professor of the Stanford School of Engineering, Professor of Electrical Engineering and Computer Science at Stanford University and the director of the Stanford Pervasive Parallelism Lab. Olukotun is known as the “father of the multi-core processor”, and the leader of the Stanford Hydra Chip Multiprocessor research project. Olukotun's achievements include designing the first general-purpose multi-core CPU, innovating single-chip multiprocessor and multi-threaded processor design, and pioneering multicore CPUs and GPUs, transactional memory technology and domain-specific languages programming models. Olukotun's research interests include computer architecture, parallel programming environments and scalable parallel systems, domain specific languages and high-level compilers.