In computing, a legacy system is an old method, technology, computer system, or application program, "of, relating to, or being a previous or outdated computer system", yet still in use. Often referencing a system as "legacy" means that it paved the way for the standards that would follow it. This can also imply that the system is out of date or in need of replacement.

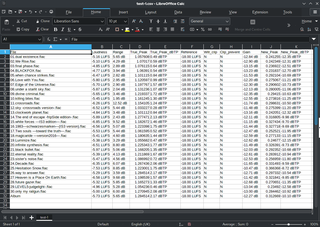

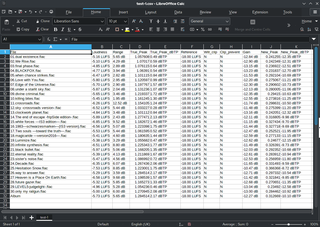

A spreadsheet is a computer application for computation, organization, analysis and storage of data in tabular form. Spreadsheets were developed as computerized analogs of paper accounting worksheets. The program operates on data entered in cells of a table. Each cell may contain either numeric or text data, or the results of formulas that automatically calculate and display a value based on the contents of other cells. The term spreadsheet may also refer to one such electronic document.

Software testing is the act of examining the artifacts and the behavior of the software under test by verification and validation. Software testing can also provide an objective, independent view of the software to allow the business to appreciate and understand the risks of software implementation. Test techniques include, but are not limited to:

In computer networking, a thin client, sometimes called slim client or lean client, is a simple (low-performance) computer that has been optimized for establishing a remote connection with a server-based computing environment. They are sometimes known as network computers, or in their simplest form as zero clients. The server does most of the work, which can include launching software programs, performing calculations, and storing data. This contrasts with a rich client or a conventional personal computer; the former is also intended for working in a client–server model but has significant local processing power, while the latter aims to perform its function mostly locally.

A software bug is an error, flaw or fault in the design, development, or operation of computer software that causes it to produce an incorrect or unexpected result, or to behave in unintended ways. The process of finding and correcting bugs is termed "debugging" and often uses formal techniques or tools to pinpoint bugs. Since the 1950s, some computer systems have been designed to detect or auto-correct various software errors during operations.

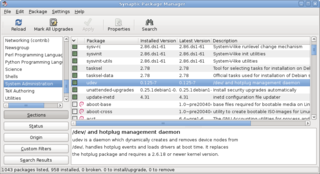

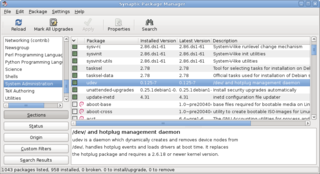

A package manager or package-management system is a collection of software tools that automates the process of installing, upgrading, configuring, and removing computer programs for a computer in a consistent manner.

Genera is a commercial operating system and integrated development environment for Lisp machines created by Symbolics. It is essentially a fork of an earlier operating system originating on the Massachusetts Institute of Technology (MIT) AI Lab's Lisp machines which Symbolics had used in common with Lisp Machines, Inc. (LMI), and Texas Instruments (TI). Genera was also sold by Symbolics as Open Genera, which runs Genera on computers based on a Digital Equipment Corporation (DEC) Alpha processor using Tru64 UNIX. In 2021 a new version was released as Portable Genera which runs on Tru64 UNIX on Alpha, Linux on x86-64 and Arm64 Linux, and macOS on x86-64 and Arm64. It is released and licensed as proprietary software.

A filename extension, file name extension or file extension is a suffix to the name of a computer file. The extension indicates a characteristic of the file contents or its intended use. A filename extension is typically delimited from the rest of the filename with a period, but in some systems it is separated with spaces.

In computing, a crash, or system crash, occurs when a computer program such as a software application or an operating system stops functioning properly and exits. On some operating systems or individual applications, a crash reporting service will report the crash and any details relating to it, usually to the developer(s) of the application. If the program is a critical part of the operating system, the entire system may crash or hang, often resulting in a kernel panic or fatal system error.

Test-driven development (TDD) is a software development process relying on software requirements being converted to test cases before software is fully developed, and tracking all software development by repeatedly testing the software against all test cases. This is as opposed to software being developed first and test cases created later.

In systems engineering and software engineering, requirements analysis focuses on the tasks that determine the needs or conditions to meet the new or altered product or project, taking account of the possibly conflicting requirements of the various stakeholders, analyzing, documenting, validating and managing software or system requirements.

Skeleton programming is a style of computer programming based on simple high-level program structures and so called dummy code. Program skeletons resemble pseudocode, but allow parsing, compilation and testing of the code. Dummy code is inserted in a program skeleton to simulate processing and avoid compilation error messages. It may involve empty function declarations, or functions that return a correct result only for a simple test case where the expected response of the code is known.

Software rot is either a slow deterioration of software quality over time or its diminishing responsiveness that will eventually lead to software becoming faulty, unusable, or in need of upgrade. This is not a physical phenomenon; the software does not actually decay, but rather suffers from a lack of being responsive and updated with respect to the changing environment in which it resides.

In manufacturing and design, a mockup, or mock-up, is a scale or full-size model of a design or device, used for teaching, demonstration, design evaluation, promotion, and other purposes. A mockup may be a prototype if it provides at least part of the functionality of a system and enables testing of a design.

Desktop virtualization is a software technology that separates the desktop environment and associated application software from the physical client device that is used to access it.

In programming and software design, an event is an action or occurrence recognized by software, often originating asynchronously from the external environment, that may be handled by the software. Computer events can be generated or triggered by the system, by the user, or in other ways. Typically, events are handled synchronously with the program flow; that is, the software may have one or more dedicated places where events are handled, frequently an event loop.

In software development, the V-model represents a development process that may be considered an extension of the waterfall model, and is an example of the more general V-model. Instead of moving down in a linear way, the process steps are bent upwards after the coding phase, to form the typical V shape. The V-Model demonstrates the relationships between each phase of the development life cycle and its associated phase of testing. The horizontal and vertical axes represent time or project completeness (left-to-right) and level of abstraction, respectively.

In computer science, data type limitations and software bugs can cause errors in time and date calculation or display. These are most commonly manifestations of arithmetic overflow, but can also be the result of other issues. The most well-known consequence of this type is the Y2K problem, but many other milestone dates or times exist that have caused or will cause problems depending on various programming deficiencies.

Turbo is a set of software products and services developed by the Code Systems Corporation for application virtualization, portable application creation, and digital distribution. Code Systems Corporation is an American corporation headquartered in Seattle, Washington, and is best known for its Turbo products that include Browser Sandbox, Turbo Studio, TurboServer, and Turbo.

The Point Cloud Library (PCL) is an open-source library of algorithms for point cloud processing tasks and 3D geometry processing, such as occur in three-dimensional computer vision. The library contains algorithms for filtering, feature estimation, surface reconstruction, 3D registration, model fitting, object recognition, and segmentation. Each module is implemented as a smaller library that can be compiled separately. PCL has its own data format for storing point clouds - PCD, but also allows datasets to be loaded and saved in many other formats. It is written in C++ and released under the BSD license.