Related Research Articles

Software testing is the act of examining the artifacts and the behavior of the software under test by verification and validation. Software testing can also provide an objective, independent view of the software to allow the business to appreciate and understand the risks of software implementation. Test techniques include, but are not limited to:

In computer programming, unit testing is a software testing method by which individual units of source code—sets of one or more computer program modules together with associated control data, usage procedures, and operating procedures—are tested to determine whether they are fit for use. It is a standard step in development and implementation approaches such as Agile.

Test-driven development (TDD) is a software development process relying on software requirements being converted to test cases before software is fully developed, and tracking all software development by repeatedly testing the software against all test cases. This is as opposed to software being developed first and test cases created later.

A programming tool or software development tool is a computer program that software developers use to create, debug, maintain, or otherwise support other programs and applications. The term usually refers to relatively simple programs, that can be combined to accomplish a task, much as one might use multiple hands to fix a physical object. The most basic tools are a source code editor and a compiler or interpreter, which are used ubiquitously and continuously. Other tools are used more or less depending on the language, development methodology, and individual engineer, often used for a discrete task, like a debugger or profiler. Tools may be discrete programs, executed separately – often from the command line – or may be parts of a single large program, called an integrated development environment (IDE). In many cases, particularly for simpler use, simple ad hoc techniques are used instead of a tool, such as print debugging instead of using a debugger, manual timing instead of a profiler, or tracking bugs in a text file or spreadsheet instead of a bug tracking system.

In software testing, test automation is the use of software separate from the software being tested to control the execution of tests and the comparison of actual outcomes with predicted outcomes. Test automation can automate some repetitive but necessary tasks in a formalized testing process already in place, or perform additional testing that would be difficult to do manually. Test automation is critical for continuous delivery and continuous testing.

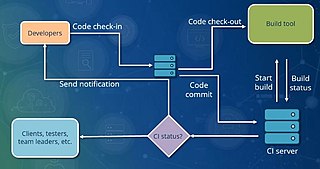

In software engineering, continuous integration (CI) is the practice of merging all developers' working copies to a shared mainline several times a day. Nowadays it is typically implemented in such a way that it triggers an automated build with testing. Grady Booch first proposed the term CI in his 1991 method, although he did not advocate integrating several times a day. Extreme programming (XP) adopted the concept of CI and did advocate integrating more than once per day – perhaps as many as tens of times per day.

Dynamic program analysis is analysis of computer software that involves executing the program in question. Dynamic program analysis includes familiar techniques from software engineering such as unit testing, debugging, and measuring code coverage, but also includes lesser-known techniques like program slicing and invariant inference. Dynamic program analysis is widely applied in security in the form of runtime memory error detection, fuzzing, dynamic symbolic execution, and taint tracking.

Dynamic testing is a term used in software engineering to describe the testing of the dynamic behavior of code.

A software regression is a type of software bug where a feature that has worked before stops working. This may happen after changes are applied to the software's source code, including the addition of new features and bug fixes. They may also be introduced by changes to the environment in which the software is running, such as system upgrades, system patching or a change to daylight saving time. A software performance regression is a situation where the software still functions correctly, but performs more slowly or uses more memory or resources than before. Various types of software regressions have been identified in practice, including the following:

A test strategy is an outline that describes the testing approach of the software development cycle. The purpose of a test strategy is to provide a rational deduction from organizational, high-level objectives to actual test activities to meet those objectives from a quality assurance perspective. The creation and documentation of a test strategy should be done in a systematic way to ensure that all objectives are fully covered and understood by all stakeholders. It should also frequently be reviewed, challenged and updated as the organization and the product evolve over time. Furthermore, a test strategy should also aim to align different stakeholders of quality assurance in terms of terminology, test and integration levels, roles and responsibilities, traceability, planning of resources, etc.

Parasoft is an independent software vendor specializing in automated software testing and application security with headquarters in Monrovia, California. It was founded in 1987 by four graduates of the California Institute of Technology who planned to commercialize the parallel computing software tools they had been working on for the Caltech Cosmic Cube, which was the first working hypercube computer built.

In computer programming and software development, debugging is the process of finding and resolving bugs within computer programs, software, or systems.

Continuous testing is the process of executing automated tests as part of the software delivery pipeline to obtain immediate feedback on the business risks associated with a software release candidate. Continuous testing was originally proposed as a way of reducing waiting time for feedback to developers by introducing development environment-triggered tests as well as more traditional developer/tester-triggered tests.

Bisection is a method used in software development to identify change sets that result in a specific behavior change. It is mostly employed for finding the patch that introduced a bug. Another application area is finding the patch that indirectly fixed a bug.

Parasoft C/C++test is an integrated set of tools for testing C and C++ source code that software developers use to analyze, test, find defects, and measure the quality and security of their applications. It supports software development practices that are part of development testing, including static code analysis, dynamic code analysis, unit test case generation and execution, code coverage analysis, regression testing, runtime error detection, requirements traceability, and code review. It's a commercial tool that supports operation on Linux, Windows, and Solaris platforms as well as support for on-target embedded testing and cross compilers.

Development testing is a software development process that involves synchronized application of a broad spectrum of defect prevention and detection strategies in order to reduce software development risks, time, and costs.

In computer programming and software testing, smoke testing is preliminary testing or sanity testing to reveal simple failures severe enough to, for example, reject a prospective software release. Smoke tests are a subset of test cases that cover the most important functionality of a component or system, used to aid assessment of whether main functions of the software appear to work correctly. When used to determine if a computer program should be subjected to further, more fine-grained testing, a smoke test may be called a pretest or an intake test. Alternatively, it is a set of tests run on each new build of a product to verify that the build is testable before the build is released into the hands of the test team. In the DevOps paradigm, use of a build verification test step is one hallmark of the continuous integration maturity stage.

In software development, a neutral build is a software build that reflects the current state of the source code checked into the source code version control system by the developers, and done in a neutral environment.

Automatic bug-fixing is the automatic repair of software bugs without the intervention of a human programmer. It is also commonly referred to as automatic patch generation, automatic bug repair, or automatic program repair. The typical goal of such techniques is to automatically generate correct patches to eliminate bugs in software programs without causing software regression.

This article discusses a set of tactics useful in software testing. It is intended as a comprehensive list of tactical approaches to Software Quality Assurance (more widely colloquially known as Quality Assurance and general application of the test method.

References

- ↑ Pezzè, Mauro; Young, Michal (2008). Software testing and analysis: process, principles, and techniques. Wiley.

Testing activities that focus on regression problems are called (non) regression testing. Usually "non" is omitted

- ↑ Basu, Anirban (2015). Software Quality Assurance, Testing and Metrics. PHI Learning. ISBN 978-81-203-5068-7.

- ↑ National Research Council Committee on Aging Avionics in Military Aircraft: Aging Avionics in Military Aircraft. The National Academies Press, 2001, page 2: ″Each technology-refresh cycle requires regression testing.″

- ↑ Boulanger, Jean-Louis (2015). CENELEC 50128 and IEC 62279 Standards. Wiley. ISBN 978-1119122487.

- ↑ Kolawa, Adam; Huizinga, Dorota (2007). Automated Defect Prevention: Best Practices in Software Management. Wiley-IEEE Computer Society Press. p. 73. ISBN 978-0-470-04212-0.

- ↑ Automate Regression Tests When Feasible, Automated Testing: Selected Best Practices, Elfriede Dustin, Safari Books Online

- ↑ daVeiga, Nada (2008-02-06). "Change Code Without Fear: Utilize a Regression Safety Net". Dr. Dobb's Journal .

- ↑ Dudney, Bill (2004-12-08). "Developer Testing Is 'In': An interview with Alberto Savoia and Kent Beck" . Retrieved 2007-11-29.

- 1 2 3 4 Duggal, Gaurav; Suri, Bharti (2008-03-29). Understanding Regression Testing Techniques. National Conference on Challenges and Opportunities. Mandi Gobindgarh, Punjab, India. CiteSeerX 10.1.1.460.5875 .

- 1 2 3 Yoo, S.; Harman, M. (2010). "Regression testing minimization, selection and prioritization: a survey". Software Testing, Verification and Reliability. 22 (2): 67–120. doi:10.1002/stvr.430.

- ↑ Kolawa, Adam. "Regression Testing, Programmer to Programmer". Wrox.