Music is generally defined as the art of arranging sound to create some combination of form, harmony, melody, rhythm, or otherwise expressive content. Definitions of music vary depending on culture, though it is an aspect of all human societies and a cultural universal. While scholars agree that music is defined by a few specific elements, there is no consensus on their precise definitions. The creation of music is commonly divided into musical composition, musical improvisation, and musical performance, though the topic itself extends into academic disciplines, criticism, philosophy, psychology, and therapeutic contexts. Music may be performed or improvised using a vast range of instruments, including the human voice.

Music notation or musical notation is any system used to visually represent aurally perceived music played with instruments or sung by the human voice through the use of written, printed, or otherwise-produced symbols, including notation for durations of absence of sound such as rests.

An overtone is any resonant frequency above the fundamental frequency of a sound. In other words, overtones are all pitches higher than the lowest pitch within an individual sound; the fundamental is the lowest pitch. While the fundamental is usually heard most prominently, overtones are actually present in any pitch except a true sine wave. The relative volume or amplitude of various overtone partials is one of the key identifying features of timbre, or the individual characteristic of a sound.

Time stretching is the process of changing the speed or duration of an audio signal without affecting its pitch. Pitch scaling is the opposite: the process of changing the pitch without affecting the speed. Pitch shift is pitch scaling implemented in an effects unit and intended for live performance. Pitch control is a simpler process which affects pitch and speed simultaneously by slowing down or speeding up a recording.

Orchestration is the study or practice of writing music for an orchestra or of adapting music composed for another medium for an orchestra. Also called "instrumentation", orchestration is the assignment of different instruments to play the different parts of a musical work. For example, a work for solo piano could be adapted and orchestrated so that an orchestra could perform the piece, or a concert band piece could be orchestrated for a symphony orchestra.

Music theory is the study of the practices and possibilities of music. The Oxford Companion to Music describes three interrelated uses of the term "music theory": The first is the "rudiments", that are needed to understand music notation ; the second is learning scholars' views on music from antiquity to the present; the third is a sub-topic of musicology that "seeks to define processes and general principles in music". The musicological approach to theory differs from music analysis "in that it takes as its starting-point not the individual work or performance but the fundamental materials from which it is built."

In music, timbre, also known as tone color or tone quality, is the perceived sound quality of a musical note, sound or tone. Timbre distinguishes different types of sound production, such as choir voices and musical instruments. It also enables listeners to distinguish different instruments in the same category.

Pitch is a perceptual property of sounds that allows their ordering on a frequency-related scale, or more commonly, pitch is the quality that makes it possible to judge sounds as "higher" and "lower" in the sense associated with musical melodies. Pitch is a major auditory attribute of musical tones, along with duration, loudness, and timbre.

Sheet music is a handwritten or printed form of musical notation that uses musical symbols to indicate the pitches, rhythms, or chords of a song or instrumental musical piece. Like its analogs – printed books or pamphlets in English, Arabic, or other languages – the medium of sheet music typically is paper. However, access to musical notation since the 1980s has included the presentation of musical notation on computer screens and the development of scorewriter computer programs that can notate a song or piece electronically, and, in some cases, "play back" the notated music using a synthesizer or virtual instruments.

Articles related to music include:

Music information retrieval (MIR) is the interdisciplinary science of retrieving information from music. Those involved in MIR may have a background in academic musicology, psychoacoustics, psychology, signal processing, informatics, machine learning, optical music recognition, computational intelligence or some combination of these.

In music, texture is how the tempo, melodic, and harmonic materials are combined in a musical composition, determining the overall quality of the sound in a piece. The texture is often described in regard to the density, or thickness, and range, or width, between lowest and highest pitches, in relative terms as well as more specifically distinguished according to the number of voices, or parts, and the relationship between these voices. For example, a thick texture contains many 'layers' of instruments. One of these layers could be a string section or another brass. The thickness also is changed by the amount and the richness of the instruments playing the piece. The thickness varies from light to thick. A piece's texture may be changed by the number and character of parts playing at once, the timbre of the instruments or voices playing these parts and the harmony, tempo, and rhythms used. The types categorized by number and relationship of parts are analyzed and determined through the labeling of primary textural elements: primary melody (PM), secondary melody (SM), parallel supporting melody (PSM), static support (SS), harmonic support (HS), rhythmic support (RS), and harmonic and rhythmic support (HRS).

Accompaniment is the musical part which provides the rhythmic and/or harmonic support for the melody or main themes of a song or instrumental piece. There are many different styles and types of accompaniment in different genres and styles of music. In homophonic music, the main accompaniment approach used in popular music, a clear vocal melody is supported by subordinate chords. In popular music and traditional music, the accompaniment parts typically provide the "beat" for the music and outline the chord progression of the song or instrumental piece.

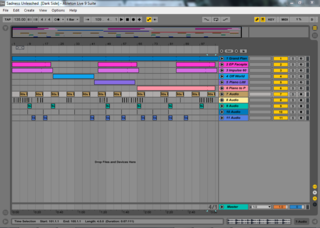

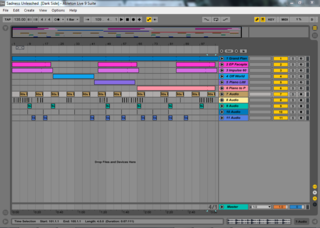

Ableton Live, also known as Live or sometimes colloquially as "Ableton", is a digital audio workstation for macOS and Windows developed by the German company Ableton.

The numbered musical notation is a cipher notation system used in Mainland China, Taiwan, Hong Kong, and to some extent in Japan, Indonesia, Malaysia, Australia, Ireland, the United Kingdom, the United States and English-speaking Canada. It dates back to the system designed by Pierre Galin, known as Galin-Paris-Chevé system. It is also known as Ziffernsystem, meaning "number system" or "cipher system" in German.

A pitch detection algorithm (PDA) is an algorithm designed to estimate the pitch or fundamental frequency of a quasiperiodic or oscillating signal, usually a digital recording of speech or a musical note or tone. This can be done in the time domain, the frequency domain, or both.

Computer audition (CA) or machine listening is the general field of study of algorithms and systems for audio interpretation by machines. Since the notion of what it means for a machine to "hear" is very broad and somewhat vague, computer audition attempts to bring together several disciplines that originally dealt with specific problems or had a concrete application in mind. The engineer Paris Smaragdis, interviewed in Technology Review, talks about these systems — "software that uses sound to locate people moving through rooms, monitor machinery for impending breakdowns, or activate traffic cameras to record accidents."

Impro-Visor is an educational tool for creating and playing a lead sheet, with a particular orientation toward representing jazz solos.

This is a glossary of jazz and popular music terms that are likely to be encountered in printed popular music songbooks, fake books and vocal scores, big band scores, jazz, and rock concert reviews, and album liner notes. This glossary includes terms for musical instruments, playing or singing techniques, amplifiers, effects units, sound reinforcement equipment, and recording gear and techniques which are widely used in jazz and popular music. Most of the terms are in English, but in some cases, terms from other languages are encountered.

Harmonic pitch class profiles (HPCP) is a group of features that a computer program extracts from an audio signal, based on a pitch class profile—a descriptor proposed in the context of a chord recognition system. HPCP are an enhanced pitch distribution feature that are sequences of feature vectors that, to a certain extent, describe tonality, measuring the relative intensity of each of the 12 pitch classes of the equal-tempered scale within an analysis frame. Often, the twelve pitch spelling attributes are also referred to as chroma and the HPCP features are closely related to what is called chroma features or chromagrams.