Related Research Articles

Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

In digital signal processing, spatial anti-aliasing is a technique for minimizing the distortion artifacts (aliasing) when representing a high-resolution image at a lower resolution. Anti-aliasing is used in digital photography, computer graphics, digital audio, and many other applications.

In computer graphics, photon mapping is a two-pass global illumination rendering algorithm developed by Henrik Wann Jensen between 1995 and 2001 that approximately solves the rendering equation for integrating light radiance at a given point in space. Rays from the light source and rays from the camera are traced independently until some termination criterion is met, then they are connected in a second step to produce a radiance value. The algorithm is used to realistically simulate the interaction of light with different types of objects. Specifically, it is capable of simulating the refraction of light through a transparent substance such as glass or water, diffuse interreflection between illuminated objects, the subsurface scattering of light in translucent materials, and some of the effects caused by particulate matter such as smoke or water vapor. Photon mapping can also be extended to more accurate simulations of light, such as spectral rendering. Progressive photon mapping (PPM) starts with ray tracing and then adds more and more photon mapping passes to provide a progressively more accurate render.

The GeForce 3 series (NV20) is the third generation of Nvidia's GeForce graphics processing units (GPUs). Introduced in February 2001, it advanced the GeForce architecture by adding programmable pixel and vertex shaders, multisample anti-aliasing and improved the overall efficiency of the rendering process.

In computer graphics, mipmaps or pyramids are pre-calculated, optimized sequences of images, each of which is a progressively lower resolution representation of the previous. The height and width of each image, or level, in the mipmap is a factor of two smaller than the previous level. Mipmaps do not have to be square. They are intended to increase rendering speed and reduce aliasing artifacts. A high-resolution mipmap image is used for high-density samples, such as for objects close to the camera; lower-resolution images are used as the object appears farther away. This is a more efficient way of downfiltering (minifying) a texture than sampling all texels in the original texture that would contribute to a screen pixel; it is faster to take a constant number of samples from the appropriately downfiltered textures. Mipmaps are widely used in 3D computer games, flight simulators, other 3D imaging systems for texture filtering, and 2D and 3D GIS software. Their use is known as mipmapping. The letters MIP in the name are an acronym of the Latin phrase multum in parvo, meaning "much in little".

Ray casting is the methodological basis for 3D CAD/CAM solid modeling and image rendering. It is essentially the same as ray tracing for computer graphics where virtual light rays are "cast" or "traced" on their path from the focal point of a camera through each pixel in the camera sensor to determine what is visible along the ray in the 3D scene. The term "Ray Casting" was introduced by Scott Roth while at the General Motors Research Labs from 1978–1980. His paper, "Ray Casting for Modeling Solids", describes modeled solid objects by combining primitive solids, such as blocks and cylinders, using the set operators union (+), intersection (&), and difference (-). The general idea of using these binary operators for solid modeling is largely due to Voelcker and Requicha's geometric modelling group at the University of Rochester. See Solid modeling for a broad overview of solid modeling methods. This figure on the right shows a U-Joint modeled from cylinders and blocks in a binary tree using Roth's ray casting system, circa 1979.

In 3D computer graphics, hidden-surface determination is the process of identifying what surfaces and parts of surfaces can be seen from a particular viewing angle. A hidden-surface determination algorithm is a solution to the visibility problem, which was one of the first major problems in the field of 3D computer graphics. The process of hidden-surface determination is sometimes called hiding, and such an algorithm is sometimes called a hider. When referring to line rendering it is known as hidden-line removal. Hidden-surface determination is necessary to render a scene correctly, so that one may not view features hidden behind the model itself, allowing only the naturally viewable portion of the graphic to be visible.

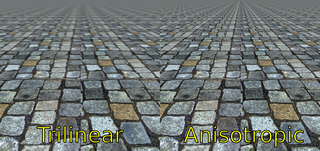

In 3D computer graphics, anisotropic filtering is a method of enhancing the image quality of textures on surfaces of computer graphics that are at oblique viewing angles with respect to the camera where the projection of the texture appears to be non-orthogonal.

In computer graphics, texture filtering or texture smoothing is the method used to determine the texture color for a texture mapped pixel, using the colors of nearby texels. There are two main categories of texture filtering, magnification filtering and minification filtering. Depending on the situation texture filtering is either a type of reconstruction filter where sparse data is interpolated to fill gaps (magnification), or a type of anti-aliasing (AA), where texture samples exist at a higher frequency than required for the sample frequency needed for texture fill (minification). Put simply, filtering describes how a texture is applied at many different shapes, size, angles and scales. Depending on the chosen filter algorithm the result will show varying degrees of blurriness, detail, spatial aliasing, temporal aliasing and blocking. Depending on the circumstances filtering can be performed in software or in hardware for real time or GPU accelerated rendering or in a mixture of both. For most common interactive graphical applications modern texture filtering is performed by dedicated hardware which optimizes memory access through memory cacheing and pre-fetch and implements a selection of algorithms available to the user and developer.

Reyes rendering is a computer software architecture used in 3D computer graphics to render photo-realistic images. It was developed in the mid-1980s by Loren Carpenter and Robert L. Cook at Lucasfilm's Computer Graphics Research Group, which is now Pixar. It was first used in 1982 to render images for the Genesis effect sequence in the movie Star Trek II: The Wrath of Khan. Pixar's RenderMan was one implementation of the Reyes algorithm, until its removal in 2016. According to the original paper describing the algorithm, the Reyes image rendering system is "An architecture for fast high-quality rendering of complex images." Reyes was proposed as a collection of algorithms and data processing systems. However, the terms "algorithm" and "architecture" have come to be used synonymously in this context and are used interchangeably in this article.

Beam tracing is an algorithm to simulate wave propagation. It was developed in the context of computer graphics to render 3D scenes, but it has been also used in other similar areas such as acoustics and electromagnetism simulations.

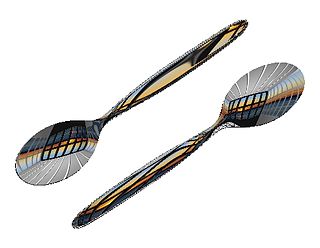

In computer graphics, environment mapping, or reflection mapping, is an efficient image-based lighting technique for approximating the appearance of a reflective surface by means of a precomputed texture. The texture is used to store the image of the distant environment surrounding the rendered object.

Path tracing is a computer graphics Monte Carlo method of rendering images of three-dimensional scenes such that the global illumination is faithful to reality. Fundamentally, the algorithm is integrating over all the illuminance arriving to a single point on the surface of an object. This illuminance is then reduced by a surface reflectance function (BRDF) to determine how much of it will go towards the viewpoint camera. This integration procedure is repeated for every pixel in the output image. When combined with physically accurate models of surfaces, accurate models of real light sources, and optically correct cameras, path tracing can produce still images that are indistinguishable from photographs.

The Xenos is a custom graphics processing unit (GPU) designed by ATI, used in the Xbox 360 video game console developed and produced for Microsoft. Developed under the codename "C1", it is in many ways related to the R520 architecture and therefore very similar to an ATI Radeon X1800 XT series of PC graphics cards as far as features and performance are concerned. However, the Xenos introduced new design ideas that were later adopted in the TeraScale microarchitecture, such as the unified shader architecture. The package contains two separate dies, the GPU and an eDRAM, featuring a total of 337 million transistors.

Radiance is a suite of tools for performing lighting simulation originally written by Greg Ward. It includes a renderer as well as many other tools for measuring the simulated light levels. It uses ray tracing to perform all lighting calculations, accelerated by the use of an octree data structure. It pioneered the concept of high-dynamic-range imaging, where light levels are (theoretically) open-ended values instead of a decimal proportion of a maximum or integer fraction of a maximum. It also implements global illumination using the Monte Carlo method to sample light falling on a point.

In computer graphics, a texture mapping unit (TMU) is a component in modern graphics processing units (GPUs). They are able to rotate, resize, and distort a bitmap image to be placed onto an arbitrary plane of a given 3D model as a texture, in a process called texture mapping. In modern graphics cards it is implemented as a discrete stage in a graphics pipeline, whereas when first introduced it was implemented as a separate processor, e.g. as seen on the Voodoo2 graphics card.

Multisample anti-aliasing (MSAA) is a type of spatial anti-aliasing, a technique used in computer graphics to remove jaggies.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

The study of image formation encompasses the radiometric and geometric processes by which 2D images of 3D objects are formed. In the case of digital images, the image formation process also includes analog to digital conversion and sampling.

This is a glossary of terms relating to computer graphics.

References

- ↑ Amanatides, John (1984). "Ray tracing with cones". ACM SIGGRAPH Computer Graphics. 18 (3): 129. CiteSeerX 10.1.1.129.582 . doi:10.1145/964965.808589.

- ↑ Matt Pharr, Wenzel Jakob, Greg Humphreys. "Physically based rendering: From theory to implementation - 7.1 Sampling Theory". https://www.pbr-book.org/3ed-2018/Sampling_and_Reconstruction/Sampling_Theory

- ↑ Matt Pettineo. "Experimenting with Reconstruction Filters for MSAA Resolve". https://therealmjp.github.io/posts/msaa-resolve-filters/

- ↑ Homan Igehy. "Tracing Ray Differentials". http://www.graphics.stanford.edu/papers/trd/