Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Scanline rendering is an algorithm for visible surface determination, in 3D computer graphics, that works on a row-by-row basis rather than a polygon-by-polygon or pixel-by-pixel basis. All of the polygons to be rendered are first sorted by the top y coordinate at which they first appear, then each row or scan line of the image is computed using the intersection of a scanline with the polygons on the front of the sorted list, while the sorted list is updated to discard no-longer-visible polygons as the active scan line is advanced down the picture.

Texture mapping is a method for mapping a texture on a computer-generated graphic. Texture here can be high frequency detail, surface texture, or color.

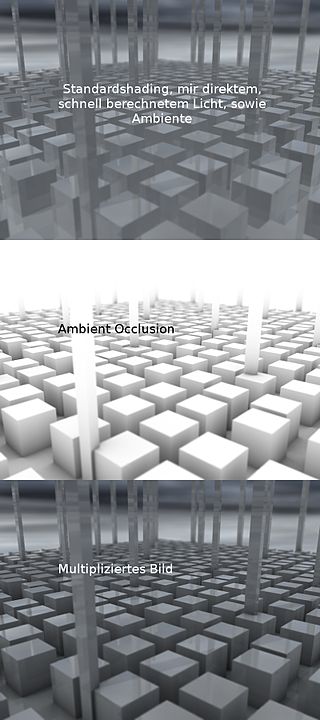

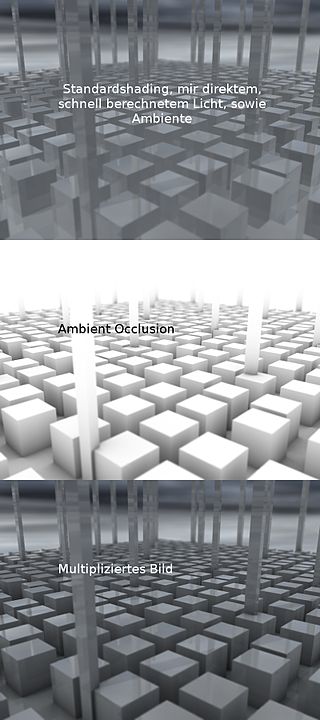

Shading refers to the depiction of depth perception in 3D models or illustrations by varying the level of darkness. Shading tries to approximate local behavior of light on the object's surface and is not to be confused with techniques of adding shadows, such as shadow mapping or shadow volumes, which fall under global behavior of light.

The GeForce 3 series (NV20) is the third generation of Nvidia's GeForce graphics processing units (GPUs). Introduced in February 2001, it advanced the GeForce architecture by adding programmable pixel and vertex shaders, multisample anti-aliasing and improved the overall efficiency of the rendering process.

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene—a process known as shading. Shaders have evolved to perform a variety of specialized functions in computer graphics special effects and video post-processing, as well as general-purpose computing on graphics processing units.

In 3D computer graphics, modeling, and animation, ambient occlusion is a shading and rendering technique used to calculate how exposed each point in a scene is to ambient lighting. For example, the interior of a tube is typically more occluded than the exposed outer surfaces, and becomes darker the deeper inside the tube one goes.

A shading language is a graphics programming language adapted to programming shader effects. Shading languages usually consist of special data types like "vector", "matrix", "color" and "normal".

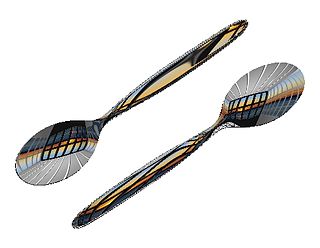

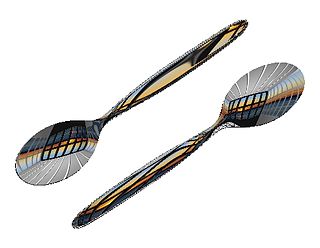

In computer graphics, environment mapping, or reflection mapping, is an efficient image-based lighting technique for approximating the appearance of a reflective surface by means of a precomputed texture. The texture is used to store the image of the distant environment surrounding the rendered object.

In computer graphics, per-pixel lighting refers to any technique for lighting an image or scene that calculates illumination for each pixel on a rendered image. This is in contrast to other popular methods of lighting such as vertex lighting, which calculates illumination at each vertex of a 3D model and then interpolates the resulting values over the model's faces to calculate the final per-pixel color values.

Shadow mapping or shadowing projection is a process by which shadows are added to 3D computer graphics. This concept was introduced by Lance Williams in 1978, in a paper entitled "Casting curved shadows on curved surfaces." Since then, it has been used both in pre-rendered and realtime scenes in many console and PC games.

Multisample anti-aliasing (MSAA) is a type of spatial anti-aliasing, a technique used in computer graphics to remove jaggies.

Computer graphics lighting is the collection of techniques used to simulate light in computer graphics scenes. While lighting techniques offer flexibility in the level of detail and functionality available, they also operate at different levels of computational demand and complexity. Graphics artists can choose from a variety of light sources, models, shading techniques, and effects to suit the needs of each application.

Tiled rendering is the process of subdividing a computer graphics image by a regular grid in optical space and rendering each section of the grid, or tile, separately. The advantage to this design is that the amount of memory and bandwidth is reduced compared to immediate mode rendering systems that draw the entire frame at once. This has made tile rendering systems particularly common for low-power handheld device use. Tiled rendering is sometimes known as a "sort middle" architecture, because it performs the sorting of the geometry in the middle of the graphics pipeline instead of near the end.

Order-independent transparency (OIT) is a class of techniques in rasterisational computer graphics for rendering transparency in a 3D scene, which do not require rendering geometry in sorted order for alpha compositing.

PICA200 is a graphics processing unit (GPU) designed by Digital Media Professionals Inc. (DMP), a Japanese GPU design startup company, for use in embedded devices such as vehicle systems, mobile phones, cameras, and game consoles. The PICA200 is an IP Core which can be licensed to other companies to incorporate into their SOCs. It was most notably licensed for use in the Nintendo 3DS.

Fast approximate anti-aliasing (FXAA) is a screen-space anti-aliasing algorithm created by Timothy Lottes at Nvidia.

Luminous Engine, originally called Luminous Studio, is a multi-platform game engine developed and used internally by Square Enix and later on by Luminous Productions. The engine was developed for and targeted at eighth-generation hardware and DirectX 11-compatible platforms, such as Xbox One, the PlayStation 4, and versions of Microsoft Windows. It was conceived during the development of Final Fantasy XIII-2 to be compatible with next generation consoles that their existing platform, Crystal Tools, could not handle.

This is a glossary of terms relating to computer graphics.

Deep learning super sampling (DLSS) is a family of real-time deep learning image enhancement and upscaling technologies developed by Nvidia that are exclusive to its RTX line of graphics cards, and available in a number of video games. The goal of these technologies is to allow the majority of the graphics pipeline to run at a lower resolution for increased performance, and then infer a higher resolution image from this that approximates the same level of detail as if the image had been rendered at this higher resolution. This allows for higher graphical settings and/or frame rates for a given output resolution, depending on user preference.