Related Research Articles

Gamma correction or gamma is a nonlinear operation used to encode and decode luminance or tristimulus values in video or still image systems. Gamma correction is, in the simplest cases, defined by the following power-law expression:

In photography and videography, multi-exposure HDR capture is a technique that creates high dynamic range (HDR) images by taking and combining multiple exposures of the same subject matter at different exposure levels. Combining multiple images in this way results in an image with a greater dynamic range than what would be possible by taking one single image. The technique can also be used to capture video by taking and combining multiple exposures for each frame of the video. The term "HDR" is used frequently to refer to the process of creating HDR images from multiple exposures. Many smartphones have an automated HDR feature that relies on computational imaging techniques to capture and combine multiple exposures.

The CIELAB color space, also referred to as L*a*b*, is a color space defined by the International Commission on Illumination in 1976. It expresses color as three values: L* for perceptual lightness and a* and b* for the four unique colors of human vision: red, green, blue and yellow. CIELAB was intended as a perceptually uniform space, where a given numerical change corresponds to a similar perceived change in color. While the LAB space is not truly perceptually uniform, it nevertheless is useful in industry for detecting small differences in color.

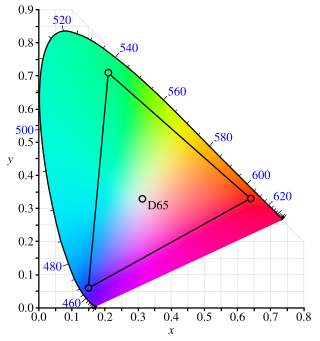

The Adobe RGB (1998) color space or opRGB is a color space developed by Adobe Inc. in 1998. It was designed to encompass most of the colors achievable on CMYK color printers, but by using RGB primary colors on a device such as a computer display. The Adobe RGB (1998) color space encompasses roughly 50% of the visible colors specified by the CIELAB color space – improving upon the gamut of the sRGB color space, primarily in cyan-green hues. It was subsequently standardized by the IEC as IEC 61966-2-5:1999 with a name opRGB and is used in HDMI.

The ProPhoto RGB color space, also known as ROMM RGB, is an output referred RGB color space developed by Kodak. It offers an especially large gamut designed for use with photographic output in mind. The ProPhoto RGB color space encompasses over 90% of possible surface colors in the CIE L*a*b* color space, and 100% of likely occurring real-world surface colors documented by Michael Pointer in 1980, making ProPhoto even larger than the Wide-gamut RGB color space. The ProPhoto RGB primaries were also chosen in order to minimize hue rotations associated with non-linear tone scale operations. One of the downsides to this color space is that approximately 13% of the representable colors are imaginary colors that do not exist and are not visible colors.

A camera raw image file contains unprocessed or minimally processed data from the image sensor of either a digital camera, a motion picture film scanner, or other image scanner. Raw files are so named because they are not yet processed, and contain large amounts of potentially redundant data. Normally, the image is processed by a raw converter, in a wide-gamut internal color space where precise adjustments can be made before conversion to a viewable file format such as JPEG or PNG for storage, printing, or further manipulation. There are dozens of raw formats in use by different manufacturers of digital image capture equipment.

High dynamic range (HDR), also known as wide dynamic range, extended dynamic range, or expanded dynamic range, is a dynamic range higher than usual.

xvYCC or extended-gamut YCbCr is a color space that can be used in the video electronics of television sets to support a gamut 1.8 times as large as that of the sRGB color space. xvYCC was proposed by Sony, specified by the IEC in October 2005 and published in January 2006 as IEC 61966-2-4. xvYCC extends the ITU-R BT.709 tone curve by defining over-ranged values. xvYCC-encoded video retains the same color primaries and white point as BT.709, and uses either a BT.601 or BT.709 RGB-to-YCC conversion matrix and encoding. This allows it to travel through existing digital limited range YCC data paths, and any colors within the normal gamut will be compatible. It works by allowing negative RGB inputs and expanding the output chroma. These are used to encode more saturated colors by using a greater part of the RGB values that can be encoded in the YCbCr signal compared with those used in Broadcast Safe Level. The extra-gamut colors can then be displayed by a device whose underlying technology is not limited by the standard primaries.

RGBE or Radiance HDR is an image format invented by Gregory Ward Larson for the Radiance rendering system. It stores pixels as one byte each for RGB values with a one byte shared exponent. Thus it stores four bytes per pixel.

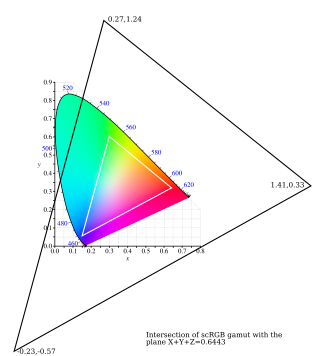

scRGB is a wide color gamut RGB color space created by Microsoft and HP that uses the same color primaries and white/black points as the sRGB color space but allows coordinates below zero and greater than one. The full range is −0.5 through just less than +7.5.

ITU-R Recommendation BT.2020, more commonly known by the abbreviations Rec. 2020 or BT.2020, defines various aspects of ultra-high-definition television (UHDTV) with standard dynamic range (SDR) and wide color gamut (WCG), including picture resolutions, frame rates with progressive scan, bit depths, color primaries, RGB and luma-chroma color representations, chroma subsamplings, and an opto-electronic transfer function. The first version of Rec. 2020 was posted on the International Telecommunication Union (ITU) website on August 23, 2012, and two further editions have been published since then.

Luminance HDR, formerly Qtpfsgui, is graphics software used for the creation and manipulation of high-dynamic-range images. Released under the terms of the GPL, it is available for Linux, Windows and Mac OS X. Luminance HDR supports several High Dynamic Range (HDR) as well as Low Dynamic Range (LDR) file formats.

The Academy Color Encoding System (ACES) is a color image encoding system created under the auspices of the Academy of Motion Picture Arts and Sciences. ACES is characterised by a color accurate workflow, with "seamless interchange of high quality motion picture images regardless of source".

Standard-dynamic-range video is a video technology which represents light intensity based on the brightness, contrast and color characteristics and limitations of a cathode ray tube (CRT) display. SDR video is able to represent a video or picture's colors with a maximum luminance around 100 cd/m2, a black level around 0.1 cd/m2 and Rec.709 / sRGB color gamut. It uses the gamma curve as its electro-optical transfer function.

ICTCP, ICtCp, or ITP is a color representation format specified in the Rec. ITU-R BT.2100 standard that is used as a part of the color image pipeline in video and digital photography systems for high dynamic range (HDR) and wide color gamut (WCG) imagery. It was developed by Dolby Laboratories from the IPT color space by Ebner and Fairchild. The format is derived from an associated RGB color space by a coordinate transformation that includes two matrix transformations and an intermediate nonlinear transfer function that is informally known as gamma pre-correction. The transformation produces three signals called I, CT, and CP. The ICTCP transformation can be used with RGB signals derived from either the perceptual quantizer (PQ) or hybrid log–gamma (HLG) nonlinearity functions, but is most commonly associated with the PQ function.

ITU-R Recommendation BT.2100, more commonly known by the abbreviations Rec. 2100 or BT.2100, introduced high-dynamic-range television (HDR-TV) by recommending the use of the perceptual quantizer (PQ) or hybrid log–gamma (HLG) transfer functions instead of the traditional "gamma" previously used for SDR-TV.

High-dynamic-range television (HDR-TV) is a technology that uses high dynamic range (HDR) to improve the quality of display signals. It is contrasted with the retroactively-named standard dynamic range (SDR). HDR changes the way the luminance and colors of videos and images are represented in the signal, and allows brighter and more detailed highlight representation, darker and more detailed shadows, and more intense colors.

JPEG XT is an image compression standard which specifies backward-compatible extensions of the base JPEG standard.

This article is about the transfer functions used in pictures and videos and describing the relationship between electrical signal, scene light and displayed light.

References

- ↑ LibTIFF Homepage Archived 2004-09-16 at the Wayback Machine

- ↑ (Amazon Link) High Dynamic Range Imaging by Erik Reinhard, Greg Ward, Sumanta Pattanaik, Paul Debevec

- ↑ Greg Ward Larson on LogLuv Encoding for TIFF Images

- ↑ LogLuv encoding for full-gamut, high-dynamic range images Appears to be the same paper as: Ward Larson, Gregory (1998). "LogLuv Encoding for Full-Gamut, High-Dynamic Range Images". Journal of Graphics Tools. 3 (1): 15–31. doi:10.1080/10867651.1998.10487485.

- ↑ A comparison of different HDR image encoding formats