In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable , which takes values in the alphabet and is distributed according to :

The principle of maximum entropy states that the probability distribution which best represents the current state of knowledge about a system is the one with largest entropy, in the context of precisely stated prior data.

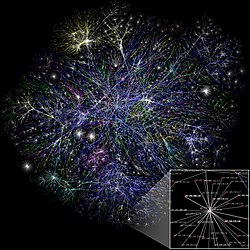

In mathematics, random graph is the general term to refer to probability distributions over graphs. Random graphs may be described simply by a probability distribution, or by a random process which generates them. The theory of random graphs lies at the intersection between graph theory and probability theory. From a mathematical perspective, random graphs are used to answer questions about the properties of typical graphs. Its practical applications are found in all areas in which complex networks need to be modeled – many random graph models are thus known, mirroring the diverse types of complex networks encountered in different areas. In a mathematical context, random graph refers almost exclusively to the Erdős–Rényi random graph model. In other contexts, any graph model may be referred to as a random graph.

Quantum statistical mechanics is statistical mechanics applied to quantum mechanical systems. In quantum mechanics a statistical ensemble is described by a density operator S, which is a non-negative, self-adjoint, trace-class operator of trace 1 on the Hilbert space H describing the quantum system. This can be shown under various mathematical formalisms for quantum mechanics. One such formalism is provided by quantum logic.

In statistical mechanics, the microcanonical ensemble is a statistical ensemble that represents the possible states of a mechanical system whose total energy is exactly specified. The system is assumed to be isolated in the sense that it cannot exchange energy or particles with its environment, so that the energy of the system does not change with time.

In statistical mechanics, a canonical ensemble is the statistical ensemble that represents the possible states of a mechanical system in thermal equilibrium with a heat bath at a fixed temperature. The system can exchange energy with the heat bath, so that the states of the system will differ in total energy.

In information theory, the Rényi entropy is a quantity that generalizes various notions of entropy, including Hartley entropy, Shannon entropy, collision entropy, and min-entropy. The Rényi entropy is named after Alfréd Rényi, who looked for the most general way to quantify information while preserving additivity for independent events. In the context of fractal dimension estimation, the Rényi entropy forms the basis of the concept of generalized dimensions.

In statistics and information theory, a maximum entropy probability distribution has entropy that is at least as great as that of all other members of a specified class of probability distributions. According to the principle of maximum entropy, if nothing is known about a distribution except that it belongs to a certain class, then the distribution with the largest entropy should be chosen as the least-informative default. The motivation is twofold: first, maximizing entropy minimizes the amount of prior information built into the distribution; second, many physical systems tend to move towards maximal entropy configurations over time.

In the study of graphs and networks, the degree of a node in a network is the number of connections it has to other nodes and the degree distribution is the probability distribution of these degrees over the whole network.

In network theory, a giant component is a connected component of a given random graph that contains a significant fraction of the entire graph's vertices.

In information theory, information dimension is an information measure for random vectors in Euclidean space, based on the normalized entropy of finely quantized versions of the random vectors. This concept was first introduced by Alfréd Rényi in 1959.

In statistical mechanics, thermal fluctuations are random deviations of an atomic system from its average state, that occur in a system at equilibrium. All thermal fluctuations become larger and more frequent as the temperature increases, and likewise they decrease as temperature approaches absolute zero.

In machine learning, the kernel embedding of distributions comprises a class of nonparametric methods in which a probability distribution is represented as an element of a reproducing kernel Hilbert space (RKHS). A generalization of the individual data-point feature mapping done in classical kernel methods, the embedding of distributions into infinite-dimensional feature spaces can preserve all of the statistical features of arbitrary distributions, while allowing one to compare and manipulate distributions using Hilbert space operations such as inner products, distances, projections, linear transformations, and spectral analysis. This learning framework is very general and can be applied to distributions over any space on which a sensible kernel function may be defined. For example, various kernels have been proposed for learning from data which are: vectors in , discrete classes/categories, strings, graphs/networks, images, time series, manifolds, dynamical systems, and other structured objects. The theory behind kernel embeddings of distributions has been primarily developed by Alex Smola, Le Song , Arthur Gretton, and Bernhard Schölkopf. A review of recent works on kernel embedding of distributions can be found in.

Maximal entropy random walk (MERW) is a popular type of biased random walk on a graph, in which transition probabilities are chosen accordingly to the principle of maximum entropy, which says that the probability distribution which best represents the current state of knowledge is the one with largest entropy. While standard random walk chooses for every vertex uniform probability distribution among its outgoing edges, locally maximizing entropy rate, MERW maximizes it globally by assuming uniform probability distribution among all paths in a given graph.

In network science, the configuration model is a method for generating random networks from a given degree sequence. It is widely used as a reference model for real-life social networks, because it allows the modeler to incorporate arbitrary degree distributions.

In applied mathematics, the soft configuration model (SCM) is a random graph model subject to the principle of maximum entropy under constraints on the expectation of the degree sequence of sampled graphs. Whereas the configuration model (CM) uniformly samples random graphs of a specific degree sequence, the SCM only retains the specified degree sequence on average over all network realizations; in this sense the SCM has very relaxed constraints relative to those of the CM ("soft" rather than "sharp" constraints). The SCM for graphs of size has a nonzero probability of sampling any graph of size , whereas the CM is restricted to only graphs having precisely the prescribed connectivity structure.

A set of networks that satisfies given structural characteristics can be treated as a network ensemble. Brought up by Ginestra Bianconi in 2007, the entropy of a network ensemble measures the level of the order or uncertainty of a network ensemble.

In network science, the network entropy is a disorder measure derived from information theory to describe the level of randomness and the amount of information encoded in a graph. It is a relevant metric to quantitatively characterize real complex networks and can also be used to quantify network complexity

A Stein discrepancy is a statistical divergence between two probability measures that is rooted in Stein's method. It was first formulated as a tool to assess the quality of Markov chain Monte Carlo samplers, but has since been used in diverse settings in statistics, machine learning and computer science.

In computational and mathematical biology, a biological lattice-gas cellular automaton (BIO-LGCA) is a discrete model for moving and interacting biological agents, a type of cellular automaton. The BIO-LGCA is based on the lattice-gas cellular automaton (LGCA) model used in fluid dynamics. A BIO-LGCA model describes cells and other motile biological agents as point particles moving on a discrete lattice, thereby interacting with nearby particles. Contrary to classic cellular automaton models, particles in BIO-LGCA are defined by their position and velocity. This allows to model and analyze active fluids and collective migration mediated primarily through changes in momentum, rather than density. BIO-LGCA applications include cancer invasion and cancer progression.