In numerical analysis and scientific computing, a sparse matrix or sparse array is a matrix in which most of the elements are zero. There is no strict definition regarding the proportion of zero-value elements for a matrix to qualify as sparse but a common criterion is that the number of non-zero elements is roughly equal to the number of rows or columns. By contrast, if most of the elements are non-zero, the matrix is considered dense. The number of zero-valued elements divided by the total number of elements is sometimes referred to as the sparsity of the matrix.

In computer science, array programming refers to solutions that allow the application of operations to an entire set of values at once. Such solutions are commonly used in scientific and engineering settings.

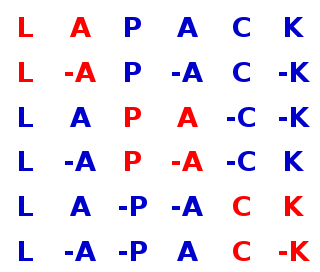

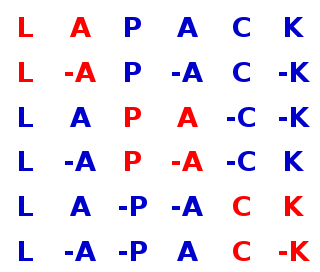

LAPACK is a standard software library for numerical linear algebra. It provides routines for solving systems of linear equations and linear least squares, eigenvalue problems, and singular value decomposition. It also includes routines to implement the associated matrix factorizations such as LU, QR, Cholesky and Schur decomposition. LAPACK was originally written in FORTRAN 77, but moved to Fortran 90 in version 3.2 (2008). The routines handle both real and complex matrices in both single and double precision. LAPACK relies on an underlying BLAS implementation to provide efficient and portable computational building blocks for its routines.

Basic Linear Algebra Subprograms (BLAS) is a specification that prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication. They are the de facto standard low-level routines for linear algebra libraries; the routines have bindings for both C and Fortran. Although the BLAS specification is general, BLAS implementations are often optimized for speed on a particular machine, so using them can bring substantial performance benefits. BLAS implementations will take advantage of special floating point hardware such as vector registers or SIMD instructions.

dnAnalytics is an open-source numerical library for .NET written in C# and F#. It features functionality similar to BLAS and LAPACK.

AMD Core Math Library (ACML) is an end-of-life software development library released by AMD, replaced by many open source libraries, including AMD libm 4.0. This library provides mathematical routines optimized for AMD processors.

Kazushige Gotō is a software engineer specializing in high performance, hand-written, machine code.

Automatically Tuned Linear Algebra Software (ATLAS) is a software library for linear algebra. It provides a mature open source implementation of BLAS APIs for C and Fortran77.

Trilinos is a collection of open-source software libraries, called packages, intended to be used as building blocks for the development of scientific applications. The word "Trilinos" is Greek and conveys the idea of "a string of pearls", suggesting a number of software packages linked together by a common infrastructure. Trilinos was developed at Sandia National Laboratories from a core group of existing algorithms and utilizes the functionality of software interfaces such as the BLAS, LAPACK, and MPI . In 2004, Trilinos received an R&D100 Award.

OpenCL is a framework for writing programs that execute across heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), digital signal processors (DSPs), field-programmable gate arrays (FPGAs) and other processors or hardware accelerators. OpenCL specifies programming languages for programming these devices and application programming interfaces (APIs) to control the platform and execute programs on the compute devices. OpenCL provides a standard interface for parallel computing using task- and data-based parallelism.

Armadillo is a linear algebra software library for the C++ programming language. It aims to provide efficient and streamlined base calculations, while at the same time having a straightforward and easy-to-use interface. Its intended target users are scientists and engineers.

Intel oneAPI Math Kernel Library is a library of optimized math routines for science, engineering, and financial applications. Core math functions include BLAS, LAPACK, ScaLAPACK, sparse solvers, fast Fourier transforms, and vector math.

MADNESS is a high-level software environment for the solution of integral and differential equations in many dimensions using adaptive and fast harmonic analysis methods with guaranteed precision based on multiresolution analysis and separated representations .

IT++ is a C++ library of classes and functions for linear algebra, numerical optimization, signal processing, communications, and statistics. It is being developed by researchers in these areas and is widely used by researchers, both in the communications industry and universities. The IT++ library originates from the former Department of Information Theory at the Chalmers University of Technology, Gothenburg, Sweden.

In scientific computing, GotoBLAS and GotoBLAS2 are open source implementations of the BLAS API with many hand-crafted optimizations for specific processor types. GotoBLAS was developed by Kazushige Goto at the Texas Advanced Computing Center. As of 2003, it was used in seven of the world's ten fastest supercomputers.

jblas is a linear algebra library, created by Mikio Braun, for the Java programming language built upon BLAS and LAPACK. Unlike most other Java linear algebra libraries, jblas is designed to be used with native code through the Java Native Interface (JNI) and comes with precompiled binaries. When used on one of the targeted architectures, it will automatically select the correct binary to use and load it. This allows it to be used out of the box and avoid a potentially tedious compilation process. jblas provides an easier to use high level API on top of the archaic API provided by BLAS and LAPACK, removing much of the tediousness.

In scientific computing, BLIS is an open-source framework for implementing a superset of BLAS functionality for specific processor types that was recently awarded the J. H. Wilkinson Prize for Numerical Software. It exposes that functionality through two traditional Application Programming Interfaces (APIs): the BLAS interface and the CBLAS interface. BLIS also includes two APIs native to the framework: a typed (BLAS-like) API and an object API. These native interfaces provide access to BLAS-like functionality that is not supported by, but closely related to, operations found in the BLAS . The framework is developed and supported by the Science of High-Performance Computing (SHPC) group of the Oden Institute for Computational Engineering and Sciences at The University of Texas at Austin and the Matthews Research Group at Southern Methodist University.

GraphBLAS is an API specification that defines standard building blocks for graph algorithms in the language of linear algebra. GraphBLAS is built upon the notion that a sparse matrix can be used to represent graphs as either an adjacency matrix or an incidence matrix. The GraphBLAS specification describes how graph operations can be efficiently implemented via linear algebraic methods over different semirings.