Rendering or image synthesis is the process of generating a photorealistic or non-photorealistic image from a 2D or 3D model by means of a computer program. The resulting image is referred to as the render. Multiple models can be defined in a scene file containing objects in a strictly defined language or data structure. The scene file contains geometry, viewpoint, texture, lighting, and shading information describing the virtual scene. The data contained in the scene file is then passed to a rendering program to be processed and output to a digital image or raster graphics image file. The term "rendering" is analogous to the concept of an artist's impression of a scene. The term "rendering" is also used to describe the process of calculating effects in a video editing program to produce the final video output.

Shadow volume is a technique used in 3D computer graphics to add shadows to a rendered scene. They were first proposed by Frank Crow in 1977 as the geometry describing the 3D shape of the region occluded from a light source. A shadow volume divides the virtual world in two: areas that are in shadow and areas that are not.

A sunbeam, in meteorological optics, is a beam of sunlight that appears to radiate from the position of the Sun. Shining through openings in clouds or between other objects such as mountains and buildings, these beams of particle-scattered sunlight are essentially parallel shafts separated by darker shadowed volumes. Their apparent convergence in the sky is a visual illusion from linear perspective. The same illusion causes the apparent convergence of parallel lines on a long straight road or hallway at a distant vanishing point. The scattering particles that make sunlight visible may be air molecules or particulates.

In scientific visualization and computer graphics, volume rendering is a set of techniques used to display a 2D projection of a 3D discretely sampled data set, typically a 3D scalar field.

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene - a process known as shading. Shaders have evolved to perform a variety of specialized functions in computer graphics special effects and video post-processing, as well as general-purpose computing on graphics processing units.

Reyes rendering is a computer software architecture used in 3D computer graphics to render photo-realistic images. It was developed in the mid-1980s by Loren Carpenter and Robert L. Cook at Lucasfilm's Computer Graphics Research Group, which is now Pixar. It was first used in 1982 to render images for the Genesis effect sequence in the movie Star Trek II: The Wrath of Khan. Pixar's RenderMan was one implementation of the Reyes algorithm, until its removal in 2016. According to the original paper describing the algorithm, the Reyes image rendering system is "An architecture for fast high-quality rendering of complex images." Reyes was proposed as a collection of algorithms and data processing systems. However, the terms "algorithm" and "architecture" have come to be used synonymously in this context and are used interchangeably in this article.

Real-time computer graphics or real-time rendering is the sub-field of computer graphics focused on producing and analyzing images in real time. The term can refer to anything from rendering an application's graphical user interface (GUI) to real-time image analysis, but is most often used in reference to interactive 3D computer graphics, typically using a graphics processing unit (GPU). One example of this concept is a video game that rapidly renders changing 3D environments to produce an illusion of motion.

Distributed ray tracing, also called distribution ray tracing and stochastic ray tracing, is a refinement of ray tracing that allows for the rendering of "soft" phenomena.

Subsurface scattering (SSS), also known as subsurface light transport (SSLT), is a mechanism of light transport in which light that penetrates the surface of a translucent object is scattered by interacting with the material and exits the surface at a different point. The light will generally penetrate the surface and be reflected a number of times at irregular angles inside the material before passing back out of the material at a different angle than it would have had if it had been reflected directly off the surface. Subsurface scattering is important for realistic 3D computer graphics, being necessary for the rendering of materials such as marble, skin, leaves, wax and milk. If subsurface scattering is not implemented, the material may look unnatural, like plastic or metal.

Path tracing is a computer graphics Monte Carlo method of rendering images of three-dimensional scenes such that the global illumination is faithful to reality. Fundamentally, the algorithm is integrating over all the illuminance arriving to a single point on the surface of an object. This illuminance is then reduced by a surface reflectance function (BRDF) to determine how much of it will go towards the viewpoint camera. This integration procedure is repeated for every pixel in the output image. When combined with physically accurate models of surfaces, accurate models of real light sources, and optically correct cameras, path tracing can produce still images that are indistinguishable from photographs.

In computer graphics, per-pixel lighting refers to any technique for lighting an image or scene that calculates illumination for each pixel on a rendered image. This is in contrast to other popular methods of lighting such as vertex lighting, which calculates illumination at each vertex of a 3D model and then interpolates the resulting values over the model's faces to calculate the final per-pixel color values.

Shadow mapping or shadowing projection is a process by which shadows are added to 3D computer graphics. This concept was introduced by Lance Williams in 1978, in a paper entitled "Casting curved shadows on curved surfaces." Since then, it has been used both in pre-rendered and realtime scenes in many console and PC games.

Volume ray casting, sometimes called volumetric ray casting, volumetric ray tracing, or volume ray marching, is an image-based volume rendering technique. It computes 2D images from 3D volumetric data sets. Volume ray casting, which processes volume data, must not be mistaken with ray casting in the sense used in ray tracing, which processes surface data. In the volumetric variant, the computation doesn't stop at the surface but "pushes through" the object, sampling the object along the ray. Unlike ray tracing, volume ray casting does not spawn secondary rays. When the context/application is clear, some authors simply call it ray casting. Because raymarching does not necessarily require an exact solution to ray intersection and collisions, it is suitable for real time computing for many applications for which ray tracing is unsuitable.

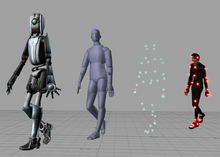

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles.

Reflection in computer graphics is used to emulate reflective objects like mirrors and shiny surfaces.

The term post-processing is used in the video/film business for quality-improvement image processing methods used in video playback devices, such as stand-alone DVD-Video players; video playing software; and transcoding software. It is also commonly used in real-time 3D rendering to add additional effects.

Luminous Engine, originally called Luminous Studio, is a multi-platform game engine developed and used internally by Square Enix and later on by Luminous Productions. The engine was developed for and targeted at eighth-generation hardware and DirectX 11-compatible platforms, such as Xbox One, the PlayStation 4, and versions of Microsoft Windows. It was conceived during the development of Final Fantasy XIII-2 to be compatible with next generation consoles that their existing platform, Crystal Tools, could not handle.

Ray marching is a class of rendering methods for 3D computer graphics where rays are traversed iteratively, effectively dividing each ray into smaller ray segments, sampling some function at each step. This function can encode volumetric data for volume ray casting, distance fields for accelerated intersection finding of surfaces, among other information.

Volumetric path tracing is a method for rendering images in computer graphics which was first introduced by Lafortune and Willems. This method enhances the rendering of the lighting in a scene by extending the path tracing method with the effect of light scattering. It is used for photorealistic effects of participating media like fire, explosions, smoke, clouds, fog or soft shadows.

This is a glossary of terms relating to computer graphics.