The Moving Picture Experts Group (MPEG) is an alliance of working groups established jointly by ISO and IEC that sets standards for media coding, including compression coding of audio, video, graphics, and genomic data; and transmission and file formats for various applications. Together with JPEG, MPEG is organized under ISO/IEC JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information.

MPEG-2 is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard.

MPEG-4 is a group of international standards for the compression of digital audio and visual data, multimedia systems, and file storage formats. It was originally introduced in late 1998 as a group of audio and video coding formats and related technology agreed upon by the ISO/IEC Moving Picture Experts Group (MPEG) under the formal standard ISO/IEC 14496 – Coding of audio-visual objects. Uses of MPEG-4 include compression of audiovisual data for Internet video and CD distribution, voice and broadcast television applications. The MPEG-4 standard was developed by a group led by Touradj Ebrahimi and Fernando Pereira.

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. It was designed to be the successor of the MP3 format and generally achieves higher sound quality than MP3 at the same bit rate.

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding. It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 91% of video industry developers as of September 2019. It supports a maximum resolution of 8K UHD.

MPEG-4 Part 3 or MPEG-4 Audio is the third part of the ISO/IEC MPEG-4 international standard developed by Moving Picture Experts Group. It specifies audio coding methods. The first version of ISO/IEC 14496-3 was published in 1999.

Harmonic Vector Excitation Coding, abbreviated as HVXC is a speech coding algorithm specified in MPEG-4 Part 3 standard for very low bit rate speech coding. HVXC supports bit rates of 2 and 4 kbit/s in the fixed and variable bit rate mode and sampling frequency of 8 kHz. It also operates at lower bitrates, such as 1.2 - 1.7 kbit/s, using a variable bit rate technique. The total algorithmic delay for the encoder and decoder is 36 ms.

High-Efficiency Advanced Audio Coding (HE-AAC) is an audio coding format for lossy data compression of digital audio defined as an MPEG-4 Audio profile in ISO/IEC 14496–3. It is an extension of Low Complexity AAC (AAC-LC) optimized for low-bitrate applications such as streaming audio. The usage profile HE-AAC v1 uses spectral band replication (SBR) to enhance the modified discrete cosine transform (MDCT) compression efficiency in the frequency domain. The usage profile HE-AAC v2 couples SBR with Parametric Stereo (PS) to further enhance the compression efficiency of stereo signals.

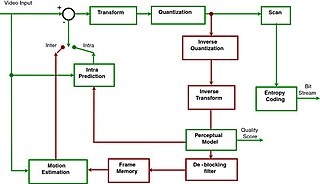

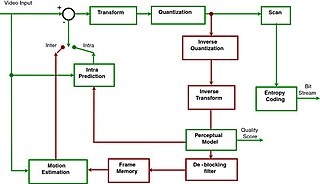

MPEG-4 Part 2, MPEG-4 Visual is a video encoding specification designed by the Moving Picture Experts Group (MPEG). It belongs to the MPEG-4 ISO/IEC family of encoders. It uses block-wise motion compensation and a discrete cosine transform (DCT), similar to previous encoders such as MPEG-1 Part 2 and H.262/MPEG-2 Part 2.

MPEG-4 Audio Lossless Coding, also known as MPEG-4 ALS, is an extension to the MPEG-4 Part 3 audio standard to allow lossless audio compression. The extension was finalized in December 2005 and published as ISO/IEC 14496-3:2005/Amd 2:2006 in 2006. The latest description of MPEG-4 ALS was published as subpart 11 of the MPEG-4 Audio standard in December 2019.

MPEG-4 SLS, or MPEG-4 Scalable to Lossless as per ISO/IEC 14496-3:2005/Amd 3:2006 (Scalable Lossless Coding), is an extension to the MPEG-4 Part 3 (MPEG-4 Audio) standard to allow lossless audio compression scalable to lossy MPEG-4 General Audio coding methods (e.g., variations of AAC). It was developed jointly by the Institute for Infocomm Research (I2R) and Fraunhofer, which commercializes its implementation of a limited subset of the standard under the name of HD-AAC. Standardization of the HD-AAC profile for MPEG-4 Audio is under development (as of September 2009).

MPEG Surround, also known as Spatial Audio Coding (SAC) is a lossy compression format for surround sound that provides a method for extending mono or stereo audio services to multi-channel audio in a backwards compatible fashion. The total bit rates used for the core and the MPEG Surround data are typically only slightly higher than the bit rates used for coding of the core. MPEG Surround adds a side-information stream to the core bit stream, containing spatial image data. Legacy stereo playback systems will ignore this side-information while players supporting MPEG Surround decoding will output the reconstructed multi-channel audio.

TDVision Systems, Inc., was a company that designed products and system architectures for stereoscopic video coding, stereoscopic video games, and head mounted displays. The company was founded by Manuel Gutierrez Novelo and Isidoro Pessah in Mexico in 2001 and moved to the United States in 2004.

2D-plus-Depth is a stereoscopic video coding format that is used for 3D displays, such as Philips WOWvx. Philips discontinued work on the WOWvx line in 2009, citing "current market developments". Currently, this Philips technology is used by SeeCubic company, led by former key 3D engineers and scientists of Philips. They offer autostereoscopic 3D displays which use the 2D-plus-Depth format for 3D video input.

Multi View Video Coding is a stereoscopic video coding standard for video compression that allows for encoding video sequences captured simultaneously from multiple camera angles in a single video stream. It uses the 2D plus Delta method and it is an amendment to the H.264 video compression standard, developed jointly by MPEG and VCEG, with the contributions from a number of companies, such as Panasonic and LG Electronics.

3D video coding is one of the processing stages required to manifest stereoscopic content into a home. There are three techniques which are used to achieve stereoscopic video:

- Color shifting (anaglyph)

- Pixel subsampling

- Enhanced video stream coding

The ISO base media file format (ISOBMFF) is a container file format that defines a general structure for files that contain time-based multimedia data such as video and audio. It is standardized in ISO/IEC 14496-12, a.k.a. MPEG-4 Part 12, and was formerly also published as ISO/IEC 15444-12, a.k.a. JPEG 2000 Part 12.

DVB 3D-TV is a deprecated standard that partially came out at the end of 2010 which included techniques and procedures to send a three-dimensional video signal through actual DVB transmission standards. There was a commercial requirement text for 3D TV broadcasters and set-top box manufacturers, but no technical information was in there.

MPEG media transport (MMT), specified as ISO/IEC 23008-1, is a digital container standard developed by Moving Picture Experts Group (MPEG) that supports High Efficiency Video Coding (HEVC) video. MMT was designed to transfer data using the all-Internet Protocol (All-IP) network.