Logic programming is a programming paradigm which is largely based on formal logic. Any program written in a logic programming language is a set of sentences in logical form, expressing facts and rules about some problem domain. Major logic programming language families include Prolog, answer set programming (ASP) and Datalog. In all of these languages, rules are written in the form of clauses:

Planner is a programming language designed by Carl Hewitt at MIT, and first published in 1969. First, subsets such as Micro-Planner and Pico-Planner were implemented, and then essentially the whole language was implemented as Popler by Julian Davies at the University of Edinburgh in the POP-2 programming language. Derivations such as QA4, Conniver, QLISP and Ether were important tools in artificial intelligence research in the 1970s, which influenced commercial developments such as Knowledge Engineering Environment (KEE) and Automated Reasoning Tool (ART).

In computer science, denotational semantics is an approach of formalizing the meanings of programming languages by constructing mathematical objects that describe the meanings of expressions from the languages. Other approaches providing formal semantics of programming languages including axiomatic semantics and operational semantics.

Computer science is the study of the theoretical foundations of information and computation and their implementation and application in computer systems. One well known subject classification system for computer science is the ACM Computing Classification System devised by the Association for Computing Machinery.

In computer science, concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the final outcome. This allows for parallel execution of the concurrent units, which can significantly improve overall speed of the execution in multi-processor and multi-core systems. In more technical terms, concurrency refers to the decomposability property of a program, algorithm, or problem into order-independent or partially-ordered components or units.

In computer science, message passing is a technique for invoking behavior on a computer. The invoking program sends a message to a process and relies on that process and its supporting infrastructure to then select and run some appropriate code. Message passing differs from conventional programming where a process, subroutine, or function is directly invoked by name. Message passing is key to some models of concurrency and object-oriented programming.

The actor model in computer science is a mathematical model of concurrent computation that treats actor as the universal primitive of concurrent computation. In response to a message it receives, an actor can: make local decisions, create more actors, send more messages, and determine how to respond to the next message received. Actors may modify their own private state, but can only affect each other indirectly through messaging.

In theoretical computer science, Actor model theory concerns theoretical issues for the Actor model.

In computer science, Actor model implementation concerns implementation issues for the Actor model.

In computer science, the Actor model and process calculi are two closely related approaches to the modelling of concurrent digital computation. See Actor model and process calculi history.

In computer science, future, promise, delay, and deferred refer to constructs used for synchronizing program execution in some concurrent programming languages. They describe an object that acts as a proxy for a result that is initially unknown, usually because the computation of its value is not yet complete.

In computer science, the Actor model, first published in 1973, is a mathematical model of concurrent computation.

In computer science, unbounded nondeterminism or unbounded indeterminacy is a property of concurrency by which the amount of delay in servicing a request can become unbounded as a result of arbitration of contention for shared resources while still guaranteeing that the request will eventually be serviced. Unbounded nondeterminism became an important issue in the development of the denotational semantics of concurrency, and later became part of research into the theoretical concept of hypercomputation.

In denotational semantics and domain theory, power domains are domains of nondeterministic and concurrent computations.

Indeterminacy in concurrent computation is concerned with the effects of indeterminacy in concurrent computation. Computation is an area in which indeterminacy is becoming increasingly important because of the massive increase in concurrency due to networking and the advent of many-core computer architectures. These computer systems make use of arbiters which give rise to indeterminacy.

The Actor model and process calculi share an interesting history and co-evolution.

The denotational semantics of the Actor model is the subject of denotational domain theory for Actors. The historical development of this subject is recounted in [Hewitt 2008b].

In computer science, the Actor model, first published in 1973, is a mathematical model of concurrent computation. This article reports on the middle history of the Actor model in which major themes were initial implementations, initial applications, and development of the first proof theory and denotational model. It is the follow on article to Actor model early history which reports on the early history of the Actor model which concerned the basic development of the concepts. The article Actor model later history reports on developments after the ones reported in this article.

Gul Agha is a professor of computer science at the University of Illinois at Urbana-Champaign, and director of the Open Systems Laboratory. He is known for his work on the actor model of concurrent computation, and was also Editor-in-Chief of ACM Computing Surveys from 1999 to 2007. Agha completed his B.S. with honors from the California Institute of Technology in the year 1977 and received his Ph.D. in Computer and Communication Science from the University of Michigan in 1986, under the supervision of John Holland. However, much of his doctoral research was carried out in Carl Hewitt's Message-Passing Semantics Group at Massachusetts Institute of Technology (MIT). Agha's dissertation was published by the MIT Press as Actors: a model of concurrent computation in distributed systems, a book which, according to the ACM Guide to Computing Literature, has been cited over 3000 times. Agha was born and completed his early schooling in Sindh, Pakistan. He received his B.S. with honors from the California Institute of Technology in 1977.

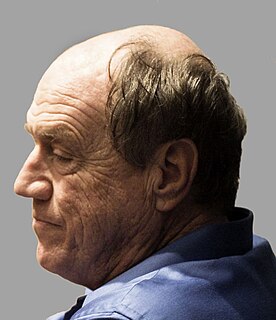

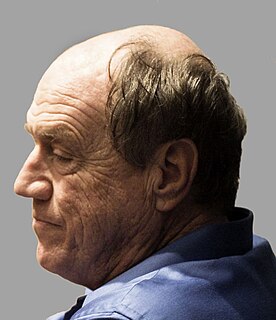

Carl Eddie Hewitt is an American computer scientist who designed the Planner programming language for automated planning and the actor model of concurrent computation, which have been influential in the development of logic, functional and object-oriented programming. Planner was the first programming language based on procedural plans invoked using pattern-directed invocation from assertions and goals. The actor model influenced the development of the Scheme programming language, the π-calculus, and served as an inspiration for several other programming languages.