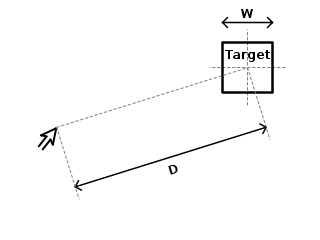

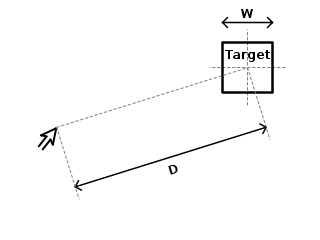

Fitts's law is a predictive model of human movement primarily used in human–computer interaction and ergonomics. The law predicts that the time required to rapidly move to a target area is a function of the ratio between the distance to the target and the width of the target. Fitts's law is used to model the act of pointing, either by physically touching an object with a hand or finger, or virtually, by pointing to an object on a computer monitor using a pointing device. It was initially developed by Paul Fitts.

The following outline is provided as an overview of and topical guide to human–computer interaction:

Swarm robotics is the study of how to design independent systems of robots without centralized control. The emerging swarming behavior of robotic swarms is created through the interactions between individual robots and the environment. This idea emerged on the field of artificial swarm intelligence, as well as the studies of insects, ants and other fields in nature, where swarm behavior occurs.

Cognitive ergonomics is a scientific discipline that studies, evaluates, and designs tasks, jobs, products, environments and systems and how they interact with humans and their cognitive abilities. It is defined by the International Ergonomics Association as "concerned with mental processes, such as perception, memory, reasoning, and motor response, as they affect interactions among humans and other elements of a system. Cognitive ergonomics is responsible for how work is done in the mind, meaning, the quality of work is dependent on the persons understanding of situations. Situations could include the goals, means, and constraints of work. The relevant topics include mental workload, decision-making, skilled performance, human-computer interaction, human reliability, work stress and training as these may relate to human-system design." Cognitive ergonomics studies cognition in work and operational settings, in order to optimize human well-being and system performance. It is a subset of the larger field of human factors and ergonomics.

A mobile robot is an automatic machine that is capable of locomotion. Mobile robotics is usually considered to be a subfield of robotics and information engineering.

Thomas B. Sheridan is an American professor of mechanical engineering and Applied Psychology Emeritus at the Massachusetts Institute of Technology. He is a pioneer of robotics and remote control technology.

Supervisory control is a general term for control of many individual controllers or control loops, such as within a distributed control system. It refers to a high level of overall monitoring of individual process controllers, which is not necessary for the operation of each controller, but gives the operator an overall plant process view, and allows integration of operation between controllers.

Neuroergonomics is the application of neuroscience to ergonomics. Traditional ergonomic studies rely predominantly on psychological explanations to address human factors issues such as: work performance, operational safety, and workplace-related risks. Neuroergonomics, in contrast, addresses the biological substrates of ergonomic concerns, with an emphasis on the role of the human nervous system.

The following outline is provided as an overview of and topical guide to automation:

In computer science, programming by demonstration (PbD) is an end-user development technique for teaching a computer or a robot new behaviors by demonstrating the task to transfer directly instead of programming it through machine commands.

Adaptable Robotics refers to a field of robotics with a focus on creating robotic systems capable of adjusting their hardware and software components to perform a wide range of tasks while adapting to varying environments. The 1960s introduced robotics into the industrial field. Since then, the need to make robots with new forms of actuation, adaptability, sensing and perception, and even the ability to learn stemmed the field of adaptable robotics. Significant developments such as the PUMA robot, manipulation research, soft robotics, swarm robotics, AI, cobots, bio-inspired approaches, and more ongoing research have advanced the adaptable robotics field tremendously. Adaptable robots are usually associated with their development kit, typically used to create autonomous mobile robots. In some cases, an adaptable kit will still be functional even when certain components break.

Ubiquitous robot is a term used in an analogous way to ubiquitous computing. Software useful for "integrating robotic technologies with technologies from the fields of ubiquitous and pervasive computing, sensor networks, and ambient intelligence".

Robotics is the interdisciplinary study and practice of the design, construction, operation, and use of robots.

A mobile manipulator is a robot system built from a robotic manipulator arm mounted on a mobile platform.

Adaptive collaborative control is the decision-making approach used in hybrid models consisting of finite-state machines with functional models as subcomponents to simulate behavior of systems formed through the partnerships of multiple agents for the execution of tasks and the development of work products. The term “collaborative control” originated from work developed in the late 1990s and early 2000 by Fong, Thorpe, and Baur (1999). It is important to note that according to Fong et al. in order for robots to function in collaborative control, they must be self-reliant, aware, and adaptive. In literature, the adjective “adaptive” is not always shown but is noted in the official sense as it is an important element of collaborative control. The adaptation of traditional applications of control theory in teleoperations sought initially to reduce the sovereignty of “humans as controllers/robots as tools” and had humans and robots working as peers, collaborating to perform tasks and to achieve common goals. Early implementations of adaptive collaborative control centered on vehicle teleoperation. Recent uses of adaptive collaborative control cover training, analysis, and engineering applications in teleoperations between humans and multiple robots, multiple robots collaborating among themselves, unmanned vehicle control, and fault tolerant controller design.

Human performance modeling (HPM) is a method of quantifying human behavior, cognition, and processes. It is a tool used by human factors researchers and practitioners for both the analysis of human function and for the development of systems designed for optimal user experience and interaction. It is a complementary approach to other usability testing methods for evaluating the impact of interface features on operator performance.

Human-Robot Collaboration is the study of collaborative processes in human and robot agents work together to achieve shared goals. Many new applications for robots require them to work alongside people as capable members of human-robot teams. These include robots for homes, hospitals, and offices, space exploration and manufacturing. Human-Robot Collaboration (HRC) is an interdisciplinary research area comprising classical robotics, human-computer interaction, artificial intelligence, process design, layout planning, ergonomics, cognitive sciences, and psychology.

Semi-automation is a process or procedure that is performed by the combined activities of man and machine with both human and machine steps typically orchestrated by a centralized computer controller.

Saverio Mascolo is an Italian information engineer, academic and researcher. He is the former Head of the Department of Electrical Engineering and Information Science and the professor of Automatic Control at Department of Ingegneria Elettrica e dell'Informazione (DEI) at Politecnico di Bari, Italy.

The out-of-the-loop performance problem arises when an operator suffers from performance decrement as a consequence of automation. The potential loss of skills and of situation awareness caused by vigilance and complacency problems might make operators of automated systems unable to operate manually in case of system failure. Highly automated systems reduce the operator to monitoring role, which diminishes the chances for the operator to understand the system. It is related to mind wandering.