In general terms, throughput is the rate of production or the rate at which something is processed.

The Advanced Research Projects Agency Network (ARPANET) was the first wide-area packet-switched network with distributed control and one of the first networks to implement the TCP/IP protocol suite. Both technologies became the technical foundation of the Internet. The ARPANET was established by the Advanced Research Projects Agency (ARPA) of the United States Department of Defense.

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput.

Weighted round robin (WRR) is a network scheduler for data flows, but also used to schedule processes.

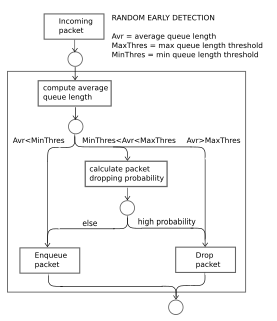

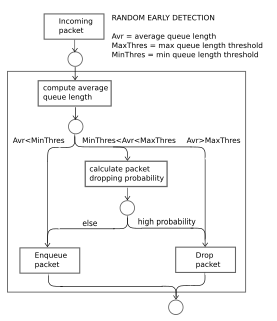

Random early detection (RED), also known as random early discard or random early drop is a queuing discipline for a network scheduler suited for congestion avoidance.

A load-balanced switch is a switch architecture which guarantees 100% throughput with no central arbitration at all, at the cost of sending each packet across the crossbar twice. Load-balanced switches are a subject of research for large routers scaled past the point of practical central arbitration.

Proportional-fair scheduling is a compromise-based scheduling algorithm. It is based upon maintaining a balance between two competing interests: Trying to maximize total throughput of the network while at the same time allowing all users at least a minimal level of service. This is done by assigning each data flow a data rate or a scheduling priority that is inversely proportional to its anticipated resource consumption.

Network coding is a field of research founded in a series of papers from the late 1990s to the early 2000s. However, the concept of network coding, in particular linear network coding, appeared much earlier. In a 1978 paper, a scheme for improving the throughput of a two-way communication through a satellite was proposed. In this scheme, two users trying to communicate with each other transmit their data streams to a satellite, which combines the two streams by summing them modulo 2 and then broadcasts the combined stream. Each of the two users, upon receiving the broadcast stream, can decode the other stream by using the information of their own stream.

Lawrence Gilman Roberts was an American engineer who received the Draper Prize in 2001 "for the development of the Internet", and the Principe de Asturias Award in 2002.

Fair queuing is a family of scheduling algorithms used in some process and network schedulers. The algorithm is designed to achieve fairness when a limited resource is shared, for example to prevent flows with large packets or processes that generate small jobs from consuming more throughput or CPU time than other flows or processes.

A computer network is a set of computers sharing resources located on or provided by network nodes. The computers use common communication protocols over digital interconnections to communicate with each other. These interconnections are made up of telecommunication network technologies, based on physically wired, optical, and wireless radio-frequency methods that may be arranged in a variety of network topologies.

Head-of-line blocking in computer networking is a performance-limiting phenomenon that occurs when a line of packets is held up by the first packet. Examples include input buffered network switches, out-of-order delivery and multiple requests in HTTP pipelining.

In routers and switches, active queue management (AQM) is the policy of dropping packets inside a buffer associated with a network interface controller (NIC) before that buffer becomes full, often with the goal of reducing network congestion or improving end-to-end latency. This task is performed by the network scheduler, which for this purpose uses various algorithms such as random early detection (RED), Explicit Congestion Notification (ECN), or controlled delay (CoDel). RFC 7567 recommends active queue management as a best practice.

Nicholas (Nick) William McKeown FREng, is the SVP/GM of the Network and Edge Group at Intel and a professor in the Electrical Engineering and Computer Science departments at Stanford University. He has also started technology companies in Silicon Valley.

Precoding is a generalization of beamforming to support multi-stream transmission in multi-antenna wireless communications. In conventional single-stream beamforming, the same signal is emitted from each of the transmit antennas with appropriate weighting such that the signal power is maximized at the receiver output. When the receiver has multiple antennas, single-stream beamforming cannot simultaneously maximize the signal level at all of the receive antennas. In order to maximize the throughput in multiple receive antenna systems, multi-stream transmission is generally required.

Virtual output queueing (VOQ) is a technique used in certain network switch architectures where, rather than keeping all traffic in a single queue, separate queues are maintained for each possible output location. It addresses a common problem known as head-of-line blocking.

Bufferbloat is a cause of high latency in packet-switched networks caused by excess buffering of packets. Bufferbloat can also cause packet delay variation, as well as reduce the overall network throughput. When a router or switch is configured to use excessively large buffers, even very high-speed networks can become practically unusable for many interactive applications like voice over IP (VoIP), online gaming, and even ordinary web surfing.

Design of robust and reliable networks and network services relies on an understanding of the traffic characteristics of the network. Throughout history, different models of network traffic have been developed and used for evaluating existing and proposed networks and services.