In mathematics, when X is a finite set with at least two elements, the permutations of X (i.e. the bijective functions from X to X) fall into two classes of equal size: the even permutations and the odd permutations. If any total ordering of X is fixed, the parity (oddness or evenness) of a permutation of X can be defined as the parity of the number of inversions for σ, i.e., of pairs of elements x, y of X such that x < y and σ(x) > σ(y).

In mathematics, the discriminant of a polynomial is a quantity that depends on the coefficients and allows deducing some properties of the roots without computing them. More precisely, it is a polynomial function of the coefficients of the original polynomial. The discriminant is widely used in polynomial factoring, number theory, and algebraic geometry.

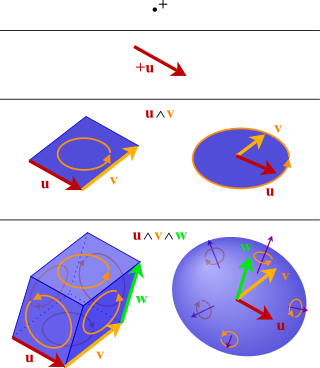

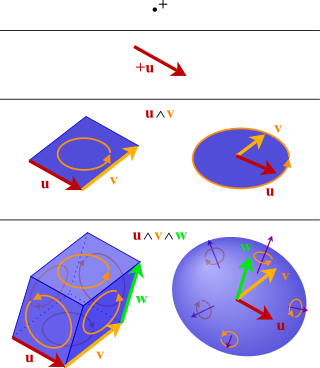

In mathematics, the exterior algebra or Grassmann algebra of a vector space is an associative algebra that contains which has a product, called exterior product or wedge product and denoted with , such that for every vector in The exterior algebra is named after Hermann Grassmann, and the names of the product come from the "wedge" symbol and the fact that the product of two elements of is "outside"

In mathematics, a Lie superalgebra is a generalisation of a Lie algebra to include a ‑grading. Lie superalgebras are important in theoretical physics where they are used to describe the mathematics of supersymmetry.

In linear algebra, a Vandermonde matrix, named after Alexandre-Théophile Vandermonde, is a matrix with the terms of a geometric progression in each row: an matrix

In transcendental number theory, the Lindemann–Weierstrass theorem is a result that is very useful in establishing the transcendence of numbers. It states the following:

Let be a partial differential ring with commuting derivatives . The Weyl algebra associated to is the noncommutative ring satisfying the relations for all .

In mathematics, the symmetric algebraS(V) (also denoted Sym(V)) on a vector space V over a field K is a commutative algebra over K that contains V, and is, in some sense, minimal for this property. Here, "minimal" means that S(V) satisfies the following universal property: for every linear map f from V to a commutative algebra A, there is a unique algebra homomorphism g : S(V) → A such that f = g ∘ i, where i is the inclusion map of V in S(V).

In abstract algebra, a representation of an associative algebra is a module for that algebra. Here an associative algebra is a ring. If the algebra is not unital, it may be made so in a standard way ; there is no essential difference between modules for the resulting unital ring, in which the identity acts by the identity mapping, and representations of the algebra.

In mathematics, a symmetric polynomial is a polynomial P(X1, X2, ..., Xn) in n variables, such that if any of the variables are interchanged, one obtains the same polynomial. Formally, P is a symmetric polynomial if for any permutation σ of the subscripts 1, 2, ..., n one has P(Xσ(1), Xσ(2), ..., Xσ(n)) = P(X1, X2, ..., Xn).

In mathematics, specifically in commutative algebra, the elementary symmetric polynomials are one type of basic building block for symmetric polynomials, in the sense that any symmetric polynomial can be expressed as a polynomial in elementary symmetric polynomials. That is, any symmetric polynomial P is given by an expression involving only additions and multiplication of constants and elementary symmetric polynomials. There is one elementary symmetric polynomial of degree d in n variables for each positive integer d ≤ n, and it is formed by adding together all distinct products of d distinct variables.

In mathematics, Schur polynomials, named after Issai Schur, are certain symmetric polynomials in n variables, indexed by partitions, that generalize the elementary symmetric polynomials and the complete homogeneous symmetric polynomials. In representation theory they are the characters of polynomial irreducible representations of the general linear groups. The Schur polynomials form a linear basis for the space of all symmetric polynomials. Any product of Schur polynomials can be written as a linear combination of Schur polynomials with non-negative integral coefficients; the values of these coefficients is given combinatorially by the Littlewood–Richardson rule. More generally, skew Schur polynomials are associated with pairs of partitions and have similar properties to Schur polynomials.

In mathematics, symmetrization is a process that converts any function in variables to a symmetric function in variables. Similarly, antisymmetrization converts any function in variables into an antisymmetric function.

In mathematics, specifically in commutative algebra, the power sum symmetric polynomials are a type of basic building block for symmetric polynomials, in the sense that every symmetric polynomial with rational coefficients can be expressed as a sum and difference of products of power sum symmetric polynomials with rational coefficients. However, not every symmetric polynomial with integral coefficients is generated by integral combinations of products of power-sum polynomials: they are a generating set over the rationals, but not over the integers.

In mathematics, Macdonald polynomialsPλ(x; t,q) are a family of orthogonal symmetric polynomials in several variables, introduced by Macdonald in 1987. He later introduced a non-symmetric generalization in 1995. Macdonald originally associated his polynomials with weights λ of finite root systems and used just one variable t, but later realized that it is more natural to associate them with affine root systems rather than finite root systems, in which case the variable t can be replaced by several different variables t=(t1,...,tk), one for each of the k orbits of roots in the affine root system. The Macdonald polynomials are polynomials in n variables x=(x1,...,xn), where n is the rank of the affine root system. They generalize many other families of orthogonal polynomials, such as Jack polynomials and Hall–Littlewood polynomials and Askey–Wilson polynomials, which in turn include most of the named 1-variable orthogonal polynomials as special cases. Koornwinder polynomials are Macdonald polynomials of certain non-reduced root systems. They have deep relationships with affine Hecke algebras and Hilbert schemes, which were used to prove several conjectures made by Macdonald about them.

In algebra, the Vandermonde polynomial of an ordered set of n variables , named after Alexandre-Théophile Vandermonde, is the polynomial:

In mathematics, a function of variables is symmetric if its value is the same no matter the order of its arguments. For example, a function of two arguments is a symmetric function if and only if for all and such that and are in the domain of The most commonly encountered symmetric functions are polynomial functions, which are given by the symmetric polynomials.

In ring theory, a branch of mathematics, a ring R is a polynomial identity ring if there is, for some N > 0, an element P ≠ 0 of the free algebra, Z⟨X1, X2, ..., XN⟩, over the ring of integers in N variables X1, X2, ..., XN such that

In mathematics, the ring of polynomial functions on a vector space V over a field k gives a coordinate-free analog of a polynomial ring. It is denoted by k[V]. If V is finite dimensional and is viewed as an algebraic variety, then k[V] is precisely the coordinate ring of V.

In mathematics, especially representation theory and combinatorics, a Frobenius characteristic map is an isometric isomorphism between the ring of characters of symmetric groups and the ring of symmetric functions. It builds a bridge between representation theory of the symmetric groups and algebraic combinatorics. This map makes it possible to study representation problems with help of symmetric functions and vice versa. This map is named after German mathematician Ferdinand Georg Frobenius.