MP3 is a coding format for digital audio developed largely by the Fraunhofer Society in Germany under the lead of Karlheinz Brandenburg, with support from other digital scientists in the United States and elsewhere. Originally defined as the third audio format of the MPEG-1 standard, it was retained and further extended — defining additional bit-rates and support for more audio channels — as the third audio format of the subsequent MPEG-2 standard. A third version, known as MPEG 2.5 — extended to better support lower bit rates — is commonly implemented, but is not a recognized standard.

Speech coding is an application of data compression to digital audio signals containing speech. Speech coding uses speech-specific parameter estimation using audio signal processing techniques to model the speech signal, combined with generic data compression algorithms to represent the resulting modeled parameters in a compact bitstream.

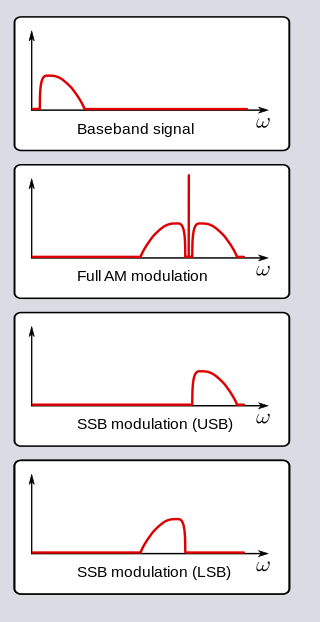

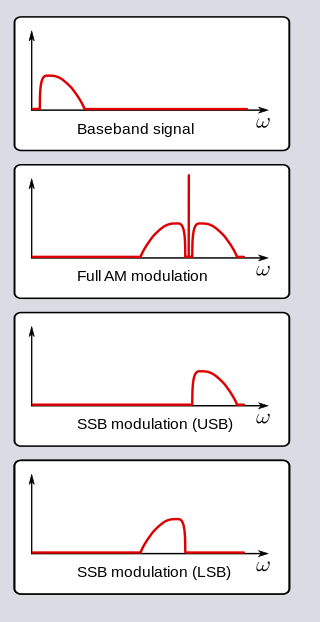

In radio communications, single-sideband modulation (SSB) or single-sideband suppressed-carrier modulation (SSB-SC) is a type of modulation used to transmit information, such as an audio signal, by radio waves. A refinement of amplitude modulation, it uses transmitter power and bandwidth more efficiently. Amplitude modulation produces an output signal the bandwidth of which is twice the maximum frequency of the original baseband signal. Single-sideband modulation avoids this bandwidth increase, and the power wasted on a carrier, at the cost of increased device complexity and more difficult tuning at the receiver.

A vocoder is a category of speech coding that analyzes and synthesizes the human voice signal for audio data compression, multiplexing, voice encryption or voice transformation.

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model.

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. Designed to be the successor of the MP3 format, AAC generally achieves higher sound quality than MP3 encoders at the same bit rate.

A polyphase quadrature filter, or PQF, is a filter bank which splits an input signal into a given number N of equidistant sub-bands. These sub-bands are subsampled by a factor of N, so they are critically sampled. An important application of the polyphase filters concerns the filtering and decimation of large band input signals, e.g. coming from a high rate ADC, which can not be directly processed by an FPGA or in some case by an ASIC either. If the ADC plus FPGA/ASIC interface implements a demultiplexer of the ADC samples in N internal FPGA/ASIC registers, the polyphase filter transforms the decimator FIR filter canonic structure in N parallel branches clocked at 1/N of the ADC clock, allowing digital processing when N=Clock(ADC)/Clock(FPGA).

MPEG-4 Part 3 or MPEG-4 Audio is the third part of the ISO/IEC MPEG-4 international standard developed by Moving Picture Experts Group. It specifies audio coding methods. The first version of ISO/IEC 14496-3 was published in 1999.

Digital Radio Mondiale is a set of digital audio broadcasting technologies designed to work over the bands currently used for analogue radio broadcasting including AM broadcasting—particularly shortwave—and FM broadcasting. DRM is more spectrally efficient than AM and FM, allowing more stations, at higher quality, into a given amount of bandwidth, using xHE-AAC audio coding format. Various other MPEG-4 and Opus codecs are also compatible, but the standard now specifies xHE-AAC.

A spectrum analyzer measures the magnitude of an input signal versus frequency within the full frequency range of the instrument. The primary use is to measure the power of the spectrum of known and unknown signals. The input signal that most common spectrum analyzers measure is electrical; however, spectral compositions of other signals, such as acoustic pressure waves and optical light waves, can be considered through the use of an appropriate transducer. Spectrum analyzers for other types of signals also exist, such as optical spectrum analyzers which use direct optical techniques such as a monochromator to make measurements.

Harmonic and Individual Lines and Noise (HILN) is a parametric codec for audio. The basic premise of the encoder is that most audio, and particularly speech, can be synthesized from only sinusoids and noise. The encoder describes individual sinusoids with amplitude and frequency, harmonic tones by fundamental frequency, amplitude and the spectral envelope of the partials, and the noise by amplitude and spectral envelope. This type of encoder is capable of encoding audio to between 6 and 16 kilobits per second for a typical audio bandwidth of 8 kHz. The framelength of this encoder is 32 ms.

Spectral band replication (SBR) is a technology to enhance audio or speech codecs, especially at low bit rates and is based on harmonic redundancy in the frequency domain.

Harmonic Vector Excitation Coding, abbreviated as HVXC is a speech coding algorithm specified in MPEG-4 Part 3 standard for very low bit rate speech coding. HVXC supports bit rates of 2 and 4 kbit/s in the fixed and variable bit rate mode and sampling frequency 8 kHz. It also operates at lower bitrates, such as 1.2 - 1.7 kbit/s, using a variable bit rate technique. The total algorithmic delay for the encoder and decoder is 36 ms.

Code-excited linear prediction (CELP) is a linear predictive speech coding algorithm originally proposed by Manfred R. Schroeder and Bishnu S. Atal in 1985. At the time, it provided significantly better quality than existing low bit-rate algorithms, such as residual-excited linear prediction (RELP) and linear predictive coding (LPC) vocoders. Along with its variants, such as algebraic CELP, relaxed CELP, low-delay CELP and vector sum excited linear prediction, it is currently the most widely used speech coding algorithm. It is also used in MPEG-4 Audio speech coding. CELP is commonly used as a generic term for a class of algorithms and not for a particular codec.

High-Efficiency Advanced Audio Coding (HE-AAC) is an audio coding format for lossy data compression of digital audio defined as an MPEG-4 Audio profile in ISO/IEC 14496–3. It is an extension of Low Complexity AAC (AAC-LC) optimized for low-bitrate applications such as streaming audio. The usage profile HE-AAC v1 uses spectral band replication (SBR) to enhance the modified discrete cosine transform (MDCT) compression efficiency in the frequency domain. The usage profile HE-AAC v2 couples SBR with Parametric Stereo (PS) to further enhance the compression efficiency of stereo signals.

Secure voice is a term in cryptography for the encryption of voice communication over a range of communication types such as radio, telephone or IP.

A pitch detection algorithm (PDA) is an algorithm designed to estimate the pitch or fundamental frequency of a quasiperiodic or oscillating signal, usually a digital recording of speech or a musical note or tone. This can be done in the time domain, the frequency domain, or both.

Parametric stereo is an audio compression algorithm used as an audio coding format for digital audio. It is considered an Audio Object Type of MPEG-4 Part 3 that serves to enhance the coding efficiency of low bandwidth stereo audio media. Parametric Stereo digitally codes a stereo audio signal by storing the audio as monaural alongside a small amount of extra information. This extra information describes how the monaural signal will behave across both stereo channels, which allows for the signal to exist in true stereo upon playback.

In mathematics and signal processing, the constant-Q transform and variable-Q transform, simply known as CQT and VQT, transforms a data series to the frequency domain. It is related to the Fourier transform and very closely related to the complex Morlet wavelet transform. Its design is suited for musical representation.

Constrained Energy Lapped Transform (CELT) is an open, royalty-free lossy audio compression format and a free software codec with especially low algorithmic delay for use in low-latency audio communication. The algorithms are openly documented and may be used free of software patent restrictions. Development of the format was maintained by the Xiph.Org Foundation and later coordinated by the Opus working group of the Internet Engineering Task Force (IETF).