In speech science and phonetics, a formant is the broad spectral maximum that results from an acoustic resonance of the human vocal tract. In acoustics, a formant is usually defined as a broad peak, or local maximum, in the spectrum. For harmonic sounds, with this definition, the formant frequency is sometimes taken as that of the harmonic that is most augmented by a resonance. The difference between these two definitions resides in whether "formants" characterise the production mechanisms of a sound or the produced sound itself. In practice, the frequency of a spectral peak differs slightly from the associated resonance frequency, except when, by luck, harmonics are aligned with the resonance frequency, or when the sound source is mostly non-harmonic, as in whispering and vocal fry.

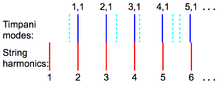

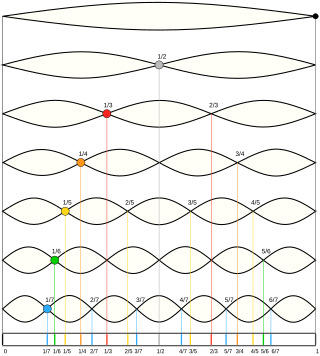

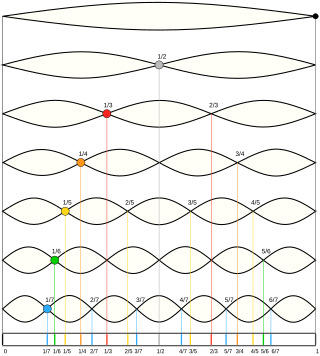

In physics, acoustics, and telecommunications, a harmonic is a sinusoidal wave with a frequency that is a positive integer multiple of the fundamental frequency of a periodic signal. The fundamental frequency is also called the 1st harmonic; the other harmonics are known as higher harmonics. As all harmonics are periodic at the fundamental frequency, the sum of harmonics is also periodic at that frequency. The set of harmonics forms a harmonic series.

In music, timbre, also known as tone color or tone quality, is the perceived sound quality of a musical note, sound or tone. Timbre distinguishes different types of sound production, such as choir voices and musical instruments. It also enables listeners to distinguish different instruments in the same category.

Pitch is a perceptual property that allows sounds to be ordered on a frequency-related scale, or more commonly, pitch is the quality that makes it possible to judge sounds as "higher" and "lower" in the sense associated with musical melodies. Pitch is a major auditory attribute of musical tones, along with duration, loudness, and timbre.

Auditory illusions are illusions of real sound or outside stimulus. These false perceptions are the equivalent of an optical illusion: the listener hears either sounds which are not present in the stimulus, or sounds that should not be possible given the circumstance on how they were created.

The tritone paradox is an auditory illusion in which a sequentially played pair of Shepard tones separated by an interval of a tritone, or half octave, is heard as ascending by some people and as descending by others. Different populations tend to favor one of a limited set of different spots around the chromatic circle as central to the set of "higher" tones. Roger Shepard in 1963 had argued that such tone pairs would be heard ambiguously as either ascending or descending. However, psychology of music researcher Diana Deutsch in 1986 discovered that when the judgments of individual listeners were considered separately, their judgments depended on the positions of the tones along the chromatic circle. For example, one listener would hear the tone pair C–F♯ as ascending and the tone pair G–C♯ as descending. Yet another listener would hear the tone pair C–F♯ as descending and the tone pair G–C♯ as ascending. Furthermore, the way these tone pairs were perceived varied depending on the listener's language or dialect.

In psychoacoustics, a pure tone is a sound with a sinusoidal waveform; that is, a sine wave of constant frequency, phase-shift, and amplitude. By extension, in signal processing a single-frequency tone or pure tone is a purely sinusoidal signal . A pure tone has the property – unique among real-valued wave shapes – that its wave shape is unchanged by linear time-invariant systems; that is, only the phase and amplitude change between such a system's pure-tone input and its output.

An equal-loudness contour is a measure of sound pressure level, over the frequency spectrum, for which a listener perceives a constant loudness when presented with pure steady tones. The unit of measurement for loudness levels is the phon and is arrived at by reference to equal-loudness contours. By definition, two sine waves of differing frequencies are said to have equal-loudness level measured in phons if they are perceived as equally loud by the average young person without significant hearing impairment.

A pitch detection algorithm (PDA) is an algorithm designed to estimate the pitch or fundamental frequency of a quasiperiodic or oscillating signal, usually a digital recording of speech or a musical note or tone. This can be done in the time domain, the frequency domain, or both.

In audiology and psychoacoustics the concept of critical bands, introduced by Harvey Fletcher in 1933 and refined in 1940, describes the frequency bandwidth of the "auditory filter" created by the cochlea, the sense organ of hearing within the inner ear. Roughly, the critical band is the band of audio frequencies within which a second tone will interfere with the perception of the first tone by auditory masking.

Volley theory states that groups of neurons of the auditory system respond to a sound by firing action potentials slightly out of phase with one another so that when combined, a greater frequency of sound can be encoded and sent to the brain to be analyzed. The theory was proposed by Ernest Wever and Charles Bray in 1930 as a supplement to the frequency theory of hearing. It was later discovered that this only occurs in response to sounds that are about 500 Hz to 5000 Hz.

The temporal theory of hearing, also called frequency theory or timing theory, states that human perception of sound depends on temporal patterns with which neurons respond to sound in the cochlea. Therefore, in this theory, the pitch of a pure tone is determined by the period of neuron firing patterns—either of single neurons, or groups as described by the volley theory. Temporal theory competes with the place theory of hearing, which instead states that pitch is signaled according to the locations of vibrations along the basilar membrane.

Bandwidth extension of signal is defined as the deliberate process of expanding the frequency range (bandwidth) of a signal in which it contains an appreciable and useful content, and/or the frequency range in which its effects are such. Its significant advancement in recent years has led to the technology being adopted commercially in several areas including psychacoustic bass enhancement of small loudspeakers and the high frequency enhancement of coded speech and audio.

Computational auditory scene analysis (CASA) is the study of auditory scene analysis by computational means. In essence, CASA systems are "machine listening" systems that aim to separate mixtures of sound sources in the same way that human listeners do. CASA differs from the field of blind signal separation in that it is based on the mechanisms of the human auditory system, and thus uses no more than two microphone recordings of an acoustic environment. It is related to the cocktail party problem.

In audio signal processing, auditory masking occurs when the perception of one sound is affected by the presence of another sound.

In physics, sound is a vibration that propagates as an acoustic wave through a transmission medium such as a gas, liquid or solid. In human physiology and psychology, sound is the reception of such waves and their perception by the brain. Only acoustic waves that have frequencies lying between about 20 Hz and 20 kHz, the audio frequency range, elicit an auditory percept in humans. In air at atmospheric pressure, these represent sound waves with wavelengths of 17 meters (56 ft) to 1.7 centimeters (0.67 in). Sound waves above 20 kHz are known as ultrasound and are not audible to humans. Sound waves below 20 Hz are known as infrasound. Different animal species have varying hearing ranges.

Psychoacoustics is the branch of psychophysics involving the scientific study of the perception of sound by the human auditory system. It is the branch of science studying the psychological responses associated with sound including noise, speech, and music. Psychoacoustics is an interdisciplinary field including psychology, acoustics, electronic engineering, physics, biology, physiology, and computer science.

Ernst Terhardt is a German engineer and psychoacoustician who made significant contributions in diverse areas of audio communication including pitch perception, music cognition, and Fourier transformation. He was professor in the area of acoustic communication at the Institute of Electroacoustics, Technical University of Munich, Germany.

Temporal envelope (ENV) and temporal fine structure (TFS) are changes in the amplitude and frequency of sound perceived by humans over time. These temporal changes are responsible for several aspects of auditory perception, including loudness, pitch and timbre perception and spatial hearing.

Brian C.J. Moore FMedSci, FRS is an Emeritus Professor of Auditory Perception in the University of Cambridge and an Emeritus Fellow of Wolfson College, Cambridge. His research focuses on psychoacoustics, audiology, and the development and assessment of hearing aids.