Related Research Articles

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

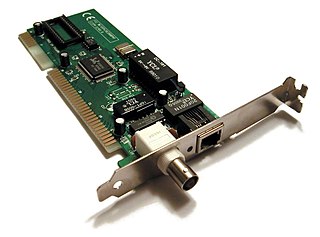

A network interface controller is a computer hardware component that connects a computer to a computer network.

Explicit Congestion Notification (ECN) is an extension to the Internet Protocol and to the Transmission Control Protocol and is defined in RFC 3168 (2001). ECN allows end-to-end notification of network congestion without dropping packets. ECN is an optional feature that may be used between two ECN-enabled endpoints when the underlying network infrastructure also supports it.

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput.

Deficit Round Robin (DRR), also Deficit Weighted Round Robin (DWRR), is a scheduling algorithm for the network scheduler. DRR is, like weighted fair queuing (WFQ), a packet-based implementation of the ideal Generalized Processor Sharing (GPS) policy. It was proposed by M. Shreedhar and G. Varghese in 1995 as an efficient and fair algorithm.

FAST TCP is a TCP congestion avoidance algorithm especially targeted at long-distance, high latency links, developed at the Netlab, California Institute of Technology and now being commercialized by FastSoft. FastSoft was acquired by Akamai Technologies in 2012.

Class-based queuing (CBQ) is a queuing discipline for the network scheduler that allows traffic to share bandwidth equally, after being grouped by classes. The classes can be based upon a variety of parameters, such as priority, interface, or originating program.

Netfilter is a framework provided by the Linux kernel that allows various networking-related operations to be implemented in the form of customized handlers. Netfilter offers various functions and operations for packet filtering, network address translation, and port translation, which provide the functionality required for directing packets through a network and prohibiting packets from reaching sensitive locations within a network.

Random early detection (RED), also known as random early discard or random early drop is a queuing discipline for a network scheduler suited for congestion avoidance.

Linux Virtual Server (LVS) is load balancing software for Linux kernel–based operating systems.

Transmission Control Protocol (TCP) uses a congestion control algorithm that includes various aspects of an additive increase/multiplicative decrease (AIMD) scheme, along with other schemes including slow start and congestion window (CWND), to achieve congestion avoidance. The TCP congestion-avoidance algorithm is the primary basis for congestion control in the Internet. Per the end-to-end principle, congestion control is largely a function of internet hosts, not the network itself. There are several variations and versions of the algorithm implemented in protocol stacks of operating systems of computers that connect to the Internet.

IP traceback is any method for reliably determining the origin of a packet on the Internet. The IP protocol does not provide for the authentication of the source IP address of an IP packet, enabling the source address to be falsified in a strategy called IP address spoofing, and creating potential internet security and stability problems.

TCP Vegas is a TCP congestion avoidance algorithm that emphasizes packet delay, rather than packet loss, as a signal to help determine the rate at which to send packets. It was developed at the University of Arizona by Lawrence Brakmo and Larry L. Peterson and introduced in 1994.

Bandwidth management is the process of measuring and controlling the communications on a network link, to avoid filling the link to capacity or overfilling the link, which would result in network congestion and poor performance of the network. Bandwidth is described by bit rate and measured in units of bits per second (bit/s) or bytes per second (B/s).

Compound TCP (CTCP) is a Microsoft algorithm that was introduced as part of the Windows Vista and Window Server 2008 TCP stack. It is designed to aggressively adjust the sender's congestion window to optimise TCP for connections with large bandwidth-delay products while trying not to harm fairness. It is also available for Linux, as well as for Windows XP and Windows Server 2003 via a hotfix.

In routers and switches, active queue management (AQM) is the policy of dropping packets inside a buffer associated with a network interface controller (NIC) before that buffer becomes full, often with the goal of reducing network congestion or improving end-to-end latency. This task is performed by the network scheduler, which for this purpose uses various algorithms such as random early detection (RED), Explicit Congestion Notification (ECN), or controlled delay (CoDel). RFC 7567 recommends active queue management as a best practice.

SYN cookie is a technique used to resist SYN flood attacks. The technique's primary inventor Daniel J. Bernstein defines SYN cookies as "particular choices of initial TCP sequence numbers by TCP servers." In particular, the use of SYN cookies allows a server to avoid dropping connections when the SYN queue fills up. Instead of storing additional connections, a SYN queue entry is encoded into the sequence number sent in the SYN+ACK response. If the server then receives a subsequent ACK response from the client with the incremented sequence number, the server is able to reconstruct the SYN queue entry using information encoded in the TCP sequence number and proceed as usual with the connection.

Robust random early detection (RRED) is a queueing discipline for a network scheduler. The existing random early detection (RED) algorithm and its variants are found vulnerable to emerging attacks, especially the Low-rate Denial-of-Service attacks (LDoS). Experiments have confirmed that the existing RED-like algorithms are notably vulnerable under LDoS attacks due to the oscillating TCP queue size caused by the attacks.

CoDel is an active queue management (AQM) algorithm in network routing, developed by Van Jacobson and Kathleen Nichols and published as RFC8289. It is designed to overcome bufferbloat in networking hardware, such as routers, by setting limits on the delay network packets experience as they pass through buffers in this equipment. CoDel aims to improve on the overall performance of the random early detection (RED) algorithm by addressing some of its fundamental misconceptions, as perceived by Jacobson, and by being easier to manage.

A network scheduler, also called packet scheduler, queueing discipline (qdisc) or queueing algorithm, is an arbiter on a node in a packet switching communication network. It manages the sequence of network packets in the transmit and receive queues of the protocol stack and network interface controller. There are several network schedulers available for the different operating systems, that implement many of the existing network scheduling algorithms.

References

- ↑ Wu-chang Feng; Dilip D. Kandlur; Debanjan Saha; Kang G. Shin (April 1999). "BLUE: A New Class of Active Queue Management Algorithms" (PDF). Computer Science Technical Report. University of Michigan (CSE–TR–387–99). Retrieved June 8, 2013.

- ↑ Wu-Chang Feng; Dilip D. Kandlur; Debanjan Saha; Kang G. Shin (April 2001). "Stochastic fair blue: A queue management algorithm for enforcing fairness". Proceedings IEEE INFOCOM 2001. Conference on Computer Communications. Twentieth Annual Joint Conference of the IEEE Computer and Communications Society (Cat. No.01CH37213) (PDF). Vol. 3. pp. 1520–1529. CiteSeerX 10.1.1.11.4235 . doi:10.1109/INFCOM.2001.916648. ISBN 978-0-7803-7016-6. S2CID 5902623 . Retrieved June 8, 2013.

- ↑ Changwang Zhang; Jianping Yin & Zhiping Cai (2009). RSFB: a Resilient Stochastic Fair Blue algorithm against spoofing DDoS attacks (PDF). International Symposium on Communication and Information Technology (ISCIT). pp. 1566–1567. ISBN 978-1-4244-4521-9 . Retrieved June 8, 2013. Abstract

- ↑ Wu-chang Feng. "Blue". Web page. Retrieved June 8, 2013.

- ↑ Kernel Newbies - Linux 2.6.39 - Networking

- ↑ "SFB Linux kernel network scheduler module". kernel.org . Retrieved 2013-09-07.

- ↑ Juliusz Chroboczek. "Stochastic Fair Blue for the Linux kernel" . Retrieved June 8, 2013.