| c-chart | |

|---|---|

| Originally proposed by | Walter A. Shewhart |

| Process observations | |

| Rational subgroup size | n > 1 |

| Measurement type | Number of nonconformances in a sample |

| Quality characteristic type | Attributes data |

| Underlying distribution | Poisson distribution |

| Performance | |

| Size of shift to detect | ≥ 1.5σ |

| Process variation chart | |

| Not applicable | |

| Process mean chart | |

| |

| Center line | |

| Control limits | |

| Plotted statistic | |

In statistical quality control, the c-chart is a type of control chart used to monitor "count"-type data, typically total number of nonconformities per unit. [1] It is also occasionally used to monitor the total number of events occurring in a given unit of time.

Statistical process control (SPC) is a method of quality control which employs statistical methods to monitor and control a process. This helps to ensure that the process operates efficiently, producing more specification-conforming products with less waste. SPC can be applied to any process where the "conforming product" output can be measured. Key tools used in SPC include run charts, control charts, a focus on continuous improvement, and the design of experiments. An example of a process where SPC is applied is manufacturing lines.

Control charts, also known as Shewhart charts or process-behavior charts, are a statistical process control tool used to determine if a manufacturing or business process is in a state of control. It is more appropriate to say that the control charts are the graphical device for Statistical Process Monitoring (SPM). Traditional control charts are mostly designed to monitor process parameters when underlying form of the process distributions are known. However, more advanced techniques are available in the 21st century where incoming data streaming can-be monitored even without any knowledge of the underlying process distributions. Distribution-free control charts are becoming increasingly popular.

The c-chart differs from the p-chart in that it accounts for the possibility of more than one nonconformity per inspection unit, and that (unlike the p-chart and u-chart) it requires a fixed sample size. The p-chart models "pass"/"fail"-type inspection only, while the c-chart (and u-chart) give the ability to distinguish between (for example) 2 items which fail inspection because of one fault each and the same two items failing inspection with 5 faults each; in the former case, the p-chart will show two non-conformant items, while the c-chart will show 10 faults.

In statistical quality control, the p-chart is a type of control chart used to monitor the proportion of nonconforming units in a sample, where the sample proportion nonconforming is defined as the ratio of the number of nonconforming units to the sample size, n.

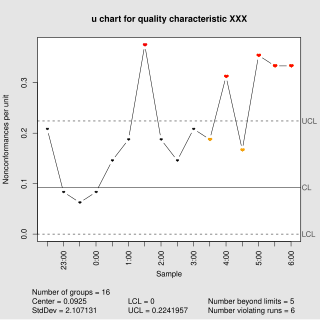

In statistical quality control, the u-chart is a type of control chart used to monitor "count"-type data where the sample size is greater than one, typically the average number of nonconformities per unit.

Nonconformities may also be tracked by type or location which can prove helpful in tracking down assignable causes.

Examples of processes suitable for monitoring with a c-chart include:

- Monitoring the number of voids per inspection unit in injection molding or casting processes

- Monitoring the number of discrete components that must be re-soldered per printed circuit board

- Monitoring the number of product returns per day

Casting is a manufacturing process in which a liquid material is usually poured into a mold, which contains a hollow cavity of the desired shape, and then allowed to solidify. The solidified part is also known as a casting, which is ejected or broken out of the mold to complete the process. Casting materials are usually metals or various time setting materials that cure after mixing two or more components together; examples are epoxy, concrete, plaster and clay. Casting is most often used for making complex shapes that would be otherwise difficult or uneconomical to make by other methods. Heavy equipment like machine tool beds, ships' propellers, etc. can be cast easily in the required size, rather than fabricating by joining several small pieces.

A printed circuit board (PCB) mechanically supports and electrically connects electronic components or electrical components using conductive tracks, pads and other features etched from one or more sheet layers of copper laminated onto and/or between sheet layers of a non-conductive substrate. Components are generally soldered onto the PCB to both electrically connect and mechanically fasten them to it.

The Poisson distribution is the basis for the chart and requires the following assumptions: [2]

In probability theory and statistics, the Poisson distribution, named after French mathematician Siméon Denis Poisson, is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant rate and independently of the time since the last event. The Poisson distribution can also be used for the number of events in other specified intervals such as distance, area or volume.

- The number of opportunities or potential locations for nonconformities is very large

- The probability of nonconformity at any location is small and constant

- The inspection procedure is same for each sample and is carried out consistently from sample to sample

The control limits for this chart type are where is the estimate of the long-term process mean established during control-chart setup.