Related Research Articles

Corpus linguistics is the study of a language as that language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference. The large collections of text allow linguistics to run quantitative analyses on linguistic concepts, otherwise harder to quantify.

Computer-assisted language learning (CALL), British, or computer-aided instruction (CAI)/computer-aided language instruction (CALI), American, is briefly defined in a seminal work by Levy as "the search for and study of applications of the computer in language teaching and learning". CALL embraces a wide range of information and communications technology applications and approaches to teaching and learning foreign languages, from the "traditional" drill-and-practice programs that characterised CALL in the 1960s and 1970s to more recent manifestations of CALL, e.g. as used in a virtual learning environment and Web-based distance learning. It also extends to the use of corpora and concordancers, interactive whiteboards, computer-mediated communication (CMC), language learning in virtual worlds, and mobile-assisted language learning (MALL).

Computer-aided translation (CAT), also referred to as computer-assisted translation or computer-aided human translation (CAHT), is the use of software to assist a human translator in the translation process. The translation is created by a human, and certain aspects of the process are facilitated by software; this is in contrast with machine translation (MT), in which the translation is created by a computer, optionally with some human intervention.

A concordancer is a computer program that automatically constructs a concordance. The output of a concordancer may serve as input to a translation memory system for computer-assisted translation, or as an early step in machine translation.

A concordance is an alphabetical list of the principal words used in a book or body of work, listing every instance of each word with its immediate context. Historically, concordances have been compiled only for works of special importance, such as the Vedas, Bible, Qur'an or the works of Shakespeare, James Joyce or classical Latin and Greek authors, because of the time, difficulty, and expense involved in creating a concordance in the pre-computer era.

Digital humanities (DH) is an area of scholarly activity at the intersection of computing or digital technologies and the disciplines of the humanities. It includes the systematic use of digital resources in the humanities, as well as the analysis of their application. DH can be defined as new ways of doing scholarship that involve collaborative, transdisciplinary, and computationally engaged research, teaching, and publishing. It brings digital tools and methods to the study of the humanities with the recognition that the printed word is no longer the main medium for knowledge production and distribution.

Martin Kay was a computer scientist, known especially for his work in computational linguistics.

Intelligent Computer Assisted Language Learning (ICALL), or Intelligent Computer Assisted Language Instruction (ICALI), involves the application of computing technologies to the teaching and learning of second or foreign languages. ICALL combines Artificial intelligence with Computer Assisted Language Learning (CALL) systems to provide software that interacts intelligently with students, responding flexibly and dynamically to student's learning progress.

Data-driven learning (DDL) is an approach to foreign language learning. Whereas most language learning is guided by teachers and textbooks, data-driven learning treats language as data and students as researchers undertaking guided discovery tasks. Underpinning this pedagogical approach is the data - information - knowledge paradigm. It is informed by a pattern-based approach to grammar and vocabulary, and a lexicogrammatical approach to language in general. Thus the basic task in DDL is to identify patterns at all levels of language. From their findings, foreign language students can see how an aspect of language is typically used, which in turn informs how they can use it in their own speaking and writing. Learning how to frame language questions and use the resources to obtain data and interpret it is fundamental to learner autonomy. When students arrive at their own conclusions through such procedures, they use their higher order thinking skills and are creating knowledge.

Computational humor is a branch of computational linguistics and artificial intelligence which uses computers in humor research. It is a relatively new area, with the first dedicated conference organized in 1996.

Digital history is the use of digital media to further historical analysis, presentation, and research. It is a branch of the digital humanities and an extension of quantitative history, cliometrics, and computing. Digital history is commonly digital public history, concerned primarily with engaging online audiences with historical content, or, digital research methods, that further academic research. Digital history outputs include: digital archives, online presentations, data visualizations, interactive maps, timelines, audio files, and virtual worlds to make history more accessible to the user. Recent digital history projects focus on creativity, collaboration, and technical innovation, text mining, corpus linguistics, network analysis, 3D modeling, and big data analysis. By utilizing these resources, the user can rapidly develop new analyses that can link to, extend, and bring to life existing histories

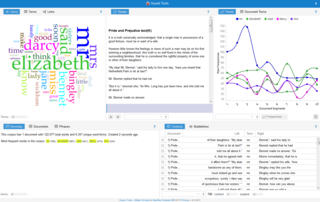

WordSmith Tools is a software package primarily for linguists, in particular for work in the field of corpus linguistics. It is a collection of modules for searching patterns in a language. The software handles many languages.

The following outline is provided as an overview of and topical guide to natural-language processing:

COCOA was an early text file utility and associated file format for digital humanities, then known as humanities computing. It was approximately 4000 punched cards of FORTRAN and created in the late 1960s and early 1970s at University College London and the Atlas Computer Laboratory in Harwell, Oxfordshire. Functionality included word-counting and concordance building.

A corpus manager is a tool for multilingual corpus analysis, which allows effective searching in corpora.

Susan Hockey is an Emeritus Professor of Library and Information Studies at University College London. She has written about the history of digital humanities, the development of text analysis applications, electronic textual mark-up, teaching computing in the humanities, and the role of libraries in managing digital resources. In 2014, University College London created a Digital Humanities lecture series in her honour.

KH Coder is an open source software for computer assisted qualitative data analysis, particularly quantitative content analysis and text mining. It can be also used for computational linguistics. It supports processing and etymological information of text in several languages, such as Japanese, English, French, German, Italian, Portuguese and Spanish. Specifically, it can contribute factual examination co-event system hub structure, computerized arranging guide, multidimensional scaling and comparative calculations. Word frequency statistics, part-of-speech analysis, grouping, correlation analysis, and visualization are among the features offered by KH Coder.

The Oxford Concordance Program (OCP) was first released in 1981 and was a result of a project started in 1978 by Oxford University Computing Services (OUCS) to create a machine independent text analysis program for producing word lists, indexes and concordances in a variety of languages and alphabets.

Lou Burnard is an internationally recognised expert in digital humanities, particularly in the area of text encoding and digital libraries. He was assistant director of Oxford University Computing Services (OUCS) from 2001 to September 2010, when he officially retired from OUCS. Before that, he was manager of the Humanities Computing Unit at OUCS for five years. He has worked in ICT support for research in the humanities since the 1990s. He was one of the founding editors of the Text Encoding Initiative (TEI) and continues to play an active part in its maintenance and development, as a consultant to the TEI Technical Council and as an elected TEI board member. He has played a key role in the establishment of many other activities and initiatives in this area, such as the UK Arts and Humanities Data Service and the British National Corpus, and has published and lectured widely. Since 2008 he has worked as a Member of the Conseil Scientifique for the CNRS-funded "Adonis" TGE.

CorCenCC or the National Corpus of Contemporary Welsh is a language resource for Welsh speakers, Welsh learners, Welsh language researchers, and anyone who is interested in the Welsh language. CorCenCC is a freely accessible collection of multiple language samples, gathered from real-life communication, and presented in the searchable online CorCenCC text corpus. The corpus is accompanied by an online teaching and learning toolkit – Y Tiwtiadur – which draws directly on the data from the corpus to provide resources for Welsh language learning at all ages and levels.

References

- ↑ Concordancing tools - Lancs University website

- ↑ https://www.fujitsu.com/uk/Images/ICL-Technical-Journal-v01i03.pdf Susan Hockey, 1979. Computing in the Humanities - ICL Technical Journal Vol 1 Issue 3 pp 289

- ↑ "Susan Hockey".

- ↑ https://www.laurenceanthony.net/research/20130827_linguistic_research_paper/linguistic_research_paper_final.pdf Laurence Anthony (2013), A critical look at software tools in corpus linguistics, Linguistic Research 30(2), 141-161

- ↑ https://www.academia.edu/3735394/Software_review_CLOC review CLOC by Lou Burnard Computers and the humanities 14 (1980) 259-260