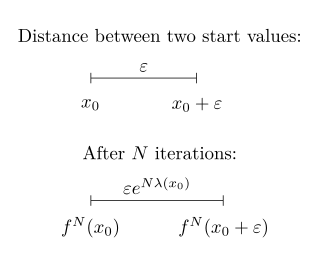

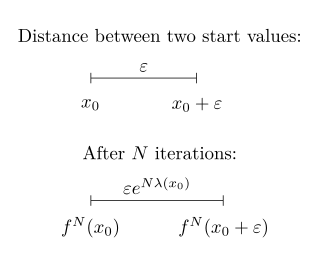

In mathematics, the Lyapunov exponent or Lyapunov characteristic exponent of a dynamical system is a quantity that characterizes the rate of separation of infinitesimally close trajectories. Quantitatively, two trajectories in phase space with initial separation vector diverge at a rate given by

Simultaneous equations models are a type of statistical model in which the dependent variables are functions of other dependent variables, rather than just independent variables. This means some of the explanatory variables are jointly determined with the dependent variable, which in economics usually is the consequence of some underlying equilibrium mechanism. Take the typical supply and demand model: whilst typically one would determine the quantity supplied and demanded to be a function of the price set by the market, it is also possible for the reverse to be true, where producers observe the quantity that consumers demand and then set the price.

In statistics, multicollinearity is a phenomenon in which one predictor variable in a multiple regression model can be linearly predicted from the others with a substantial degree of accuracy. In this situation, the coefficient estimates of the multiple regression may change erratically in response to small changes in the model or the data. Multicollinearity does not reduce the predictive power or reliability of the model as a whole, at least within the sample data set; it only affects calculations regarding individual predictors. That is, a multivariable regression model with collinear predictors can indicate how well the entire bundle of predictors predicts the outcome variable, but it may not give valid results about any individual predictor, or about which predictors are redundant with respect to others.

In statistics, econometrics, epidemiology and related disciplines, the method of instrumental variables (IV) is used to estimate causal relationships when controlled experiments are not feasible or when a treatment is not successfully delivered to every unit in a randomized experiment. Intuitively, IVs are used when an explanatory variable of interest is correlated with the error term, in which case ordinary least squares and ANOVA give biased results. A valid instrument induces changes in the explanatory variable but has no independent effect on the dependent variable, allowing a researcher to uncover the causal effect of the explanatory variable on the dependent variable.

Panel (data) analysis is a statistical method, widely used in social science, epidemiology, and econometrics to analyze two-dimensional panel data. The data are usually collected over time and over the same individuals and then a regression is run over these two dimensions. Multidimensional analysis is an econometric method in which data are collected over more than two dimensions.

In probability theory and statistics, a unit root is a feature of some stochastic processes that can cause problems in statistical inference involving time series models. A linear stochastic process has a unit root if 1 is a root of the process's characteristic equation. Such a process is non-stationary but does not always have a trend.

In statistics, Poisson regression is a generalized linear model form of regression analysis used to model count data and contingency tables. Poisson regression assumes the response variable Y has a Poisson distribution, and assumes the logarithm of its expected value can be modeled by a linear combination of unknown parameters. A Poisson regression model is sometimes known as a log-linear model, especially when used to model contingency tables.

In statistics, a fixed effects model is a statistical model in which the model parameters are fixed or non-random quantities. This is in contrast to random effects models and mixed models in which all or some of the model parameters are random variables. In many applications including econometrics and biostatistics a fixed effects model refers to a regression model in which the group means are fixed (non-random) as opposed to a random effects model in which the group means are a random sample from a population. Generally, data can be grouped according to several observed factors. The group means could be modeled as fixed or random effects for each grouping. In a fixed effects model each group mean is a group-specific fixed quantity.

Proportional hazards models are a class of survival models in statistics. Survival models relate the time that passes, before some event occurs, to one or more covariates that may be associated with that quantity of time. In a proportional hazards model, the unique effect of a unit increase in a covariate is multiplicative with respect to the hazard rate. For example, taking a drug may halve one's hazard rate for a stroke occurring, or, changing the material from which a manufactured component is constructed may double its hazard rate for failure. Other types of survival models such as accelerated failure time models do not exhibit proportional hazards. The accelerated failure time model describes a situation where the biological or mechanical life history of an event is accelerated.

A mixed model, mixed-effects model or mixed error-component model is a statistical model containing both fixed effects and random effects. These models are useful in a wide variety of disciplines in the physical, biological and social sciences. They are particularly useful in settings where repeated measurements are made on the same statistical units, or where measurements are made on clusters of related statistical units. Because of their advantage in dealing with missing values, mixed effects models are often preferred over more traditional approaches such as repeated measures analysis of variance.

In statistics, a random effects model, also called a variance components model, is a statistical model where the model parameters are random variables. It is a kind of hierarchical linear model, which assumes that the data being analysed are drawn from a hierarchy of different populations whose differences relate to that hierarchy. A random effects model is a special case of a mixed model.

In statistics and econometrics, a distributed lag model is a model for time series data in which a regression equation is used to predict current values of a dependent variable based on both the current values of an explanatory variable and the lagged values of this explanatory variable.

The Heckman correction is a statistical technique to correct bias from non-randomly selected samples or otherwise incidentally truncated dependent variables, a pervasive issue in quantitative social sciences when using observational data. Conceptually, this is achieved by explicitly modelling the individual sampling probability of each observation together with the conditional expectation of the dependent variable. The resulting likelihood function is mathematically similar to the tobit model for censored dependent variables, a connection first drawn by James Heckman in 1974. Heckman also developed a two-step control function approach to estimate this model, which avoids the computational burden of having to estimate both equations jointly, albeit at the cost of inefficiency. Heckman received the Nobel Memorial Prize in Economic Sciences in 2000 for his work in this field.

In statistics, errors-in-variables models or measurement error models are regression models that account for measurement errors in the independent variables. In contrast, standard regression models assume that those regressors have been measured exactly, or observed without error; as such, those models account only for errors in the dependent variables, or responses.

In econometrics, the Arellano–Bond estimator is a generalized method of moments estimator used to estimate dynamic models of panel data. It was proposed in 1991 by Manuel Arellano and Stephen Bond, based on the earlier work by Alok Bhargava and John Denis Sargan in 1983, for addressing certain endogeneity problems. The GMM-SYS estimator is a system that contains both the levels and the first difference equations. It provides an alternative to the standard first difference GMM estimator.

Partial (pooled) likelihood estimation for panel data is a quasi-maximum likelihood method for panel analysis that assumes that density of yit given xit is correctly specified for each time period but it allows for misspecification in the conditional density of yi≔(yi1,...,yiT) given xi≔(xi1,...,xiT).

Control functions are statistical methods to correct for endogeneity problems by modelling the endogeneity in the error term. The approach thereby differs in important ways from other models that try to account for the same econometric problem. Instrumental variables, for example, attempt to model the endogenous variable X as an often invertible model with respect to a relevant and exogenous instrument Z. Panel analysis uses special data properties to difference out unobserved heterogeneity that is assumed to be fixed over time.

In statistics, fixed-effect Poisson models are used for static panel data when the outcome variable is count data. Hausman, Hall, and Griliches pioneered the method in the mid 1980s. Their outcome of interest was the number of patents filed by firms, where they wanted to develop methods to control for the firm fixed effects. Linear panel data models use the linear additivity of the fixed effects to difference them out and circumvent the incidental parameter problem. Even though Poisson models are inherently nonlinear, the use of the linear index and the exponential link function lead to multiplicative separability, more specifically

In applied statistics, fractional models are, to some extent, related to binary response models. However, instead of estimating the probability of being in one bin of a dichotomous variable, the fractional model typically deals with variables that take on all possible values in the unit interval. One can easily generalize this model to take on values on any other interval by appropriate transformations. Examples range from participation rates in 401(k) plans to television ratings of NBA games.

A dynamic unobserved effects model is a statistical model used in econometrics for panel analysis. It is characterized by the influence of previous values of the dependent variable on its present value, and by the presence of unobservable explanatory variables.