Converting a grammar to Chomsky normal form

To convert a grammar to Chomsky normal form, a sequence of simple transformations is applied in a certain order; this is described in most textbooks on automata theory. [4] : 87–94 [5] [6] [7] The presentation here follows Hopcroft, Ullman (1979), but is adapted to use the transformation names from Lange, Leiß (2009). [8] [note 2] Each of the following transformations establishes one of the properties required for Chomsky normal form.

START: Eliminate the start symbol from right-hand sides

Introduce a new start symbol S0, and a new rule

- S0 → S,

where S is the previous start symbol. This does not change the grammar's produced language, and S0 will not occur on any rule's right-hand side.

TERM: Eliminate rules with nonsolitary terminals

To eliminate each rule

- A → X1 ... a ... Xn

with a terminal symbol a being not the only symbol on the right-hand side, introduce, for every such terminal, a new nonterminal symbol Na, and a new rule

- Na → a.

Change every rule

- A → X1 ... a ... Xn

to

- A → X1 ... Na ... Xn.

If several terminal symbols occur on the right-hand side, simultaneously replace each of them by its associated nonterminal symbol. This does not change the grammar's produced language. [4] : 92

BIN: Eliminate right-hand sides with more than 2 nonterminals

Replace each rule

- A → X1X2 ... Xn

with more than 2 nonterminals X1,...,Xn by rules

- A → X1A1,

- A1 → X2A2,

- ... ,

- An-2 → Xn-1Xn,

where Ai are new nonterminal symbols. Again, this does not change the grammar's produced language. [4] : 93

DEL: Eliminate ε-rules

An ε-rule is a rule of the form

- A → ε,

where A is not S0, the grammar's start symbol.

To eliminate all rules of this form, first determine the set of all nonterminals that derive ε. Hopcroft and Ullman (1979) call such nonterminals nullable, and compute them as follows:

- If a rule A → ε exists, then A is nullable.

- If a rule A → X1 ... Xn exists, and every single Xi is nullable, then A is nullable, too.

Obtain an intermediate grammar by replacing each rule

- A → X1 ... Xn

by all versions with some nullable Xi omitted. By deleting in this grammar each ε-rule, unless its left-hand side is the start symbol, the transformed grammar is obtained. [4] : 90

For example, in the following grammar, with start symbol S0,

- S0 → AbB | C

- B → AA | AC

- C → b | c

- A → a | ε

the nonterminal A, and hence also B, is nullable, while neither C nor S0 is. Hence the following intermediate grammar is obtained: [note 3]

- S0 → AbB | Ab

B|AbB |AbB| C - B → AA |

AA | AA|AεA| AC |AC - C → b | c

- A → a | ε

In this grammar, all ε-rules have been "inlined at the call site". [note 4] In the next step, they can hence be deleted, yielding the grammar:

- S0 → AbB | Ab | bB | b | C

- B → AA | A | AC | C

- C → b | c

- A → a

This grammar produces the same language as the original example grammar, viz. {ab,aba,abaa,abab,abac,abb,abc,b,bab,bac,bb,bc,c}, but has no ε-rules.

UNIT: Eliminate unit rules

A unit rule is a rule of the form

- A → B,

where A, B are nonterminal symbols. To remove it, for each rule

- B → X1 ... Xn,

where X1 ... Xn is a string of nonterminals and terminals, add rule

- A → X1 ... Xn

unless this is a unit rule which has already been (or is being) removed. The skipping of nonterminal symbol B in the resulting grammar is possible due to B being a member of the unit closure of nonterminal symbol A. [9]

Order of transformations

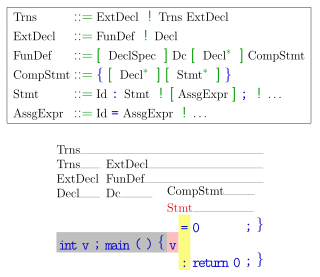

| Transformation Xalways preserves ( resp. may destroy ( | |||||

Y X | START | TERM | BIN | DEL | UNIT |

|---|---|---|---|---|---|

| START | |||||

| TERM | |||||

| BIN | |||||

| DEL | |||||

| UNIT | ( | ||||

| *UNIT preserves the result of DEL if START had been called before. | |||||

When choosing the order in which the above transformations are to be applied, it has to be considered that some transformations may destroy the result achieved by other ones. For example, START will re-introduce a unit rule if it is applied after UNIT. The table shows which orderings are admitted.

Moreover, the worst-case bloat in grammar size [note 5] depends on the transformation order. Using |G| to denote the size of the original grammar G, the size blow-up in the worst case may range from |G|2 to 22 |G|, depending on the transformation algorithm used. [8] : 7 The blow-up in grammar size depends on the order between DEL and BIN. It may be exponential when DEL is done first, but is linear otherwise. UNIT can incur a quadratic blow-up in the size of the grammar. [8] : 5 The orderings START,TERM,BIN,DEL,UNIT and START,BIN,DEL,UNIT,TERM lead to the least (i.e. quadratic) blow-up.