The Internet protocol suite, commonly known as TCP/IP, is a framework for organizing the set of communication protocols used in the Internet and similar computer networks according to functional criteria. The foundational protocols in the suite are the Transmission Control Protocol (TCP), the User Datagram Protocol (UDP), and the Internet Protocol (IP). Early versions of this networking model were known as the Department of Defense (DoD) model because the research and development were funded by the United States Department of Defense through DARPA.

Quality of service (QoS) is the description or measurement of the overall performance of a service, such as a telephony or computer network, or a cloud computing service, particularly the performance seen by the users of the network. To quantitatively measure quality of service, several related aspects of the network service are often considered, such as packet loss, bit rate, throughput, transmission delay, availability, jitter, etc.

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the Transport layer of the TCP/IP suite. SSL/TLS often runs on top of TCP.

In computer networking, the transport layer is a conceptual division of methods in the layered architecture of protocols in the network stack in the Internet protocol suite and the OSI model. The protocols of this layer provide end-to-end communication services for applications. It provides services such as connection-oriented communication, reliability, flow control, and multiplexing.

Virtual private network (VPN) is a network architecture for virtually extending a private network across one or multiple other networks which are either untrusted or need to be isolated.

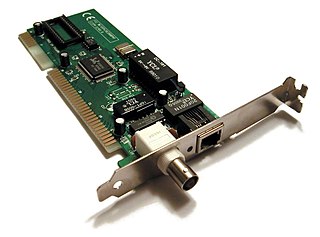

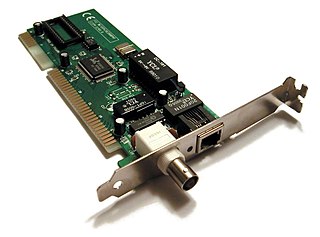

A network interface controller is a computer hardware component that connects a computer to a computer network.

Explicit Congestion Notification (ECN) is an extension to the Internet Protocol and to the Transmission Control Protocol and is defined in RFC 3168 (2001). ECN allows end-to-end notification of network congestion without dropping packets. ECN is an optional feature that may be used between two ECN-enabled endpoints when the underlying network infrastructure also supports it.

Deep packet inspection (DPI) is a type of data processing that inspects in detail the data being sent over a computer network, and may take actions such as alerting, blocking, re-routing, or logging it accordingly. Deep packet inspection is often used for baselining application behavior, analyzing network usage, troubleshooting network performance, ensuring that data is in the correct format, checking for malicious code, eavesdropping, and internet censorship, among other purposes. There are multiple headers for IP packets; network equipment only needs to use the first of these for normal operation, but use of the second header is normally considered to be shallow packet inspection despite this definition.

TCP offload engine (TOE) is a technology used in some network interface cards (NIC) to offload processing of the entire TCP/IP stack to the network controller. It is primarily used with high-speed network interfaces, such as gigabit Ethernet and 10 Gigabit Ethernet, where processing overhead of the network stack becomes significant. TOEs are often used as a way to reduce the overhead associated with Internet Protocol (IP) storage protocols such as iSCSI and Network File System (NFS).

Netfilter is a framework provided by the Linux kernel that allows various networking-related operations to be implemented in the form of customized handlers. Netfilter offers various functions and operations for packet filtering, network address translation, and port translation, which provide the functionality required for directing packets through a network and prohibiting packets from reaching sensitive locations within a network.

Linux Virtual Server (LVS) is load balancing software for Linux kernel–based operating systems.

Transmission Control Protocol (TCP) uses a congestion control algorithm that includes various aspects of an additive increase/multiplicative decrease (AIMD) scheme, along with other schemes including slow start and a congestion window (CWND), to achieve congestion avoidance. The TCP congestion-avoidance algorithm is the primary basis for congestion control in the Internet. Per the end-to-end principle, congestion control is largely a function of internet hosts, not the network itself. There are several variations and versions of the algorithm implemented in protocol stacks of operating systems of computers that connect to the Internet.

In computer networking, STREAMS is the native framework in Unix System V for implementing character device drivers, network protocols, and inter-process communication. In this framework, a stream is a chain of coroutines that pass messages between a program and a device driver. STREAMS originated in Version 8 Research Unix, as Streams.

Bandwidth management is the process of measuring and controlling the communications on a network link, to avoid filling the link to capacity or overfilling the link, which would result in network congestion and poor performance of the network. Bandwidth is described by bit rate and measured in units of bits per second (bit/s) or bytes per second (B/s).

A network socket is a software structure within a network node of a computer network that serves as an endpoint for sending and receiving data across the network. The structure and properties of a socket are defined by an application programming interface (API) for the networking architecture. Sockets are created only during the lifetime of a process of an application running in the node.

In computing, Microsoft's Windows Vista and Windows Server 2008 introduced in 2007/2008 a new networking stack named Next Generation TCP/IP stack, to improve on the previous stack in several ways. The stack includes native implementation of IPv6, as well as a complete overhaul of IPv4. The new TCP/IP stack uses a new method to store configuration settings that enables more dynamic control and does not require a computer restart after a change in settings. The new stack, implemented as a dual-stack model, depends on a strong host-model and features an infrastructure to enable more modular components that one can dynamically insert and remove.

In computer networking, tcpcrypt is a transport layer communication encryption protocol. Unlike prior protocols like TLS (SSL), tcpcrypt is implemented as a TCP extension. It was designed by a team of six security and networking experts: Andrea Bittau, Mike Hamburg, Mark Handley, David Mazières, Dan Boneh and Quinn Slack. Tcpcrypt has been published as an Internet Draft. Experimental user-space implementations are available for Linux, Mac OS X, FreeBSD and Windows. There is also a Linux kernel implementation.

The Stream Control Transmission Protocol (SCTP) is a computer networking communications protocol in the transport layer of the Internet protocol suite. Originally intended for Signaling System 7 (SS7) message transport in telecommunication, the protocol provides the message-oriented feature of the User Datagram Protocol (UDP), while ensuring reliable, in-sequence transport of messages with congestion control like the Transmission Control Protocol (TCP). Unlike UDP and TCP, the protocol supports multihoming and redundant paths to increase resilience and reliability.

A network scheduler, also called packet scheduler, queueing discipline (qdisc) or queueing algorithm, is an arbiter on a node in a packet switching communication network. It manages the sequence of network packets in the transmit and receive queues of the protocol stack and network interface controller. There are several network schedulers available for the different operating systems, that implement many of the existing network scheduling algorithms.

Multipath TCP (MPTCP) is an ongoing effort of the Internet Engineering Task Force's (IETF) Multipath TCP working group, that aims at allowing a Transmission Control Protocol (TCP) connection to use multiple paths to maximize throughput and increase redundancy.