Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an information need. The information need can be specified in the form of a search query. In the case of document retrieval, queries can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds.

A citation is a reference to a source. More precisely, a citation is an abbreviated alphanumeric expression embedded in the body of an intellectual work that denotes an entry in the bibliographic references section of the work for the purpose of acknowledging the relevance of the works of others to the topic of discussion at the spot where the citation appears.

Scientific citation is providing detailed reference in a scientific publication, typically a paper or book, to previous published communications which have a bearing on the subject of the new publication. The purpose of citations in original work is to allow readers of the paper to refer to cited work to assist them in judging the new work, source background information vital for future development, and acknowledge the contributions of earlier workers. Citations in, say, a review paper bring together many sources, often recent, in one place.

Apache Lucene is a free and open-source search engine software library, originally written in Java by Doug Cutting. It is supported by the Apache Software Foundation and is released under the Apache Software License. Lucene is widely used as a standard foundation for production search applications.

A recommender system, or a recommendation system, is a subclass of information filtering system that provides suggestions for items that are most pertinent to a particular user. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer.

A paraphrase or rephrase is the rendering of the same text in different words without losing the meaning of the text itself. More often than not, a paraphrased text can convey its meaning better than the original words. In other words, it is a copy of the text in meaning, but which is different from the original. For example, when someone tells a story they heard in their own words, they paraphrase, with the meaning being the same. The term itself is derived via Latin paraphrasis, from Ancient Greek παράφρασις (paráphrasis) 'additional manner of expression'. The act of paraphrasing is also called paraphrasis.

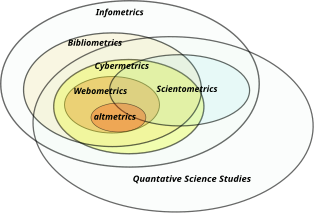

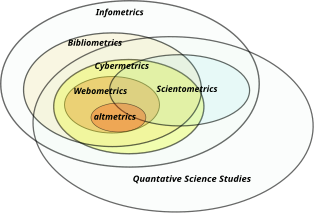

Scientometrics is a subfield of informetrics that studies quantitative aspects of scholarly literature. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts. In practice there is a significant overlap between scientometrics and other scientific fields such as information systems, information science, science of science policy, sociology of science, and metascience. Critics have argued that overreliance on scientometrics has created a system of perverse incentives, producing a publish or perish environment that leads to low-quality research.

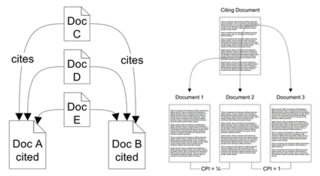

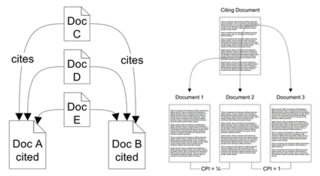

Citation analysis is the examination of the frequency, patterns, and graphs of citations in documents. It uses the directed graph of citations — links from one document to another document — to reveal properties of the documents. A typical aim would be to identify the most important documents in a collection. A classic example is that of the citations between academic articles and books. For another example, judges of law support their judgements by referring back to judgements made in earlier cases. An additional example is provided by patents which contain prior art, citation of earlier patents relevant to the current claim. The digitization of patent data and increasing computing power have led to a community of practice that uses these citation data to measure innovation attributes, trace knowledge flows, and map innovation networks.

Semantic similarity is a metric defined over a set of documents or terms, where the idea of distance between items is based on the likeness of their meaning or semantic content as opposed to lexicographical similarity. These are mathematical tools used to estimate the strength of the semantic relationship between units of language, concepts or instances, through a numerical description obtained according to the comparison of information supporting their meaning or describing their nature. The term semantic similarity is often confused with semantic relatedness. Semantic relatedness includes any relation between two terms, while semantic similarity only includes "is a" relations. For example, "car" is similar to "bus", but is also related to "road" and "driving".

Google Scholar is a freely accessible web search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines. Released in beta in November 2004, the Google Scholar index includes peer-reviewed online academic journals and books, conference papers, theses and dissertations, preprints, abstracts, technical reports, and other scholarly literature, including court opinions and patents.

Informetrics is the study of quantitative aspects of information, it is an extension and evolution of traditional bibliometrics and scientometrics. Informetrics uses bibliometrics and scientometrics methods to study mainly the problems of literature information management and evaluation of science and technology. Informetrics is an independent discipline that uses quantitative methods from mathematics and statistics to study the process, phenomena, and law of informetrics. Informetrics has gained more attention as it is a common scientific method for academic evaluation, research hotspots in discipline, and trend analysis.

In information retrieval, tf–idf, short for term frequency–inverse document frequency, is a measure of importance of a word to a document in a collection or corpus, adjusted for the fact that some words appear more frequently in general. It was often used as a weighting factor in searches of information retrieval, text mining, and user modeling. A survey conducted in 2015 showed that 83% of text-based recommender systems in digital libraries used tf–idf.

Bibliographic coupling, like co-citation, is a similarity measure that uses citation analysis to establish a similarity relationship between documents. Bibliographic coupling occurs when two works reference a common third work in their bibliographies. It is an indication that a probability exists that the two works treat a related subject matter.

JabRef is an open-source, cross-platform citation and reference management software. It is used to collect, organize and search bibliographic information.

Plagiarism detection or content similarity detection is the process of locating instances of plagiarism or copyright infringement within a work or document. The widespread use of computers and the advent of the Internet have made it easier to plagiarize the work of others.

Co-citation is the frequency with which two documents are cited together by other documents. If at least one other document cites two documents in common, these documents are said to be co-cited. The more co-citations two documents receive, the higher their co-citation strength, and the more likely they are semantically related. Like bibliographic coupling, co-citation is a semantic similarity measure for documents that makes use of citation analyses.

A citation graph, in information science and bibliometrics, is a directed graph that describes the citations within a collection of documents.

In natural language processing, entity linking, also referred to as named-entity linking (NEL), named-entity disambiguation (NED), named-entity recognition and disambiguation (NERD) or named-entity normalization (NEN) is the task of assigning a unique identity to entities mentioned in text. For example, given the sentence "Paris is the capital of France", the idea is to determine that "Paris" refers to the city of Paris and not to Paris Hilton or any other entity that could be referred to as "Paris". Entity linking is different from named-entity recognition (NER) in that NER identifies the occurrence of a named entity in text but it does not identify which specific entity it is.

Paraphrase or paraphrasing in computational linguistics is the natural language processing task of detecting and generating paraphrases. Applications of paraphrasing are varied including information retrieval, question answering, text summarization, and plagiarism detection. Paraphrasing is also useful in the evaluation of machine translation, as well as semantic parsing and generation of new samples to expand existing corpora.

Ronald Rousseau is a Belgian mathematician and information scientist. He has obtained an international reputation for his research on indicators and citation analysis in the fields of bibliometrics and scientometrics.