A citation is a reference to a source. More precisely, a citation is an abbreviated alphanumeric expression embedded in the body of an intellectual work that denotes an entry in the bibliographic references section of the work for the purpose of acknowledging the relevance of the works of others to the topic of discussion at the spot where the citation appears.

Scientific citation is providing detailed reference in a scientific publication, typically a paper or book, to previous published communications which have a bearing on the subject of the new publication. The purpose of citations in original work is to allow readers of the paper to refer to cited work to assist them in judging the new work, source background information vital for future development, and acknowledge the contributions of earlier workers. Citations in, say, a review paper bring together many sources, often recent, in one place.

The impact factor (IF) or journal impact factor (JIF) of an academic journal is a scientometric index calculated by Clarivate that reflects the yearly mean number of citations of articles published in the last two years in a given journal, as indexed by Clarivate's Web of Science.

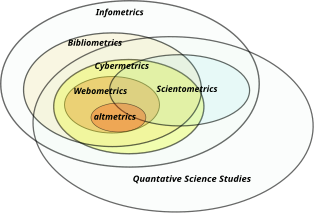

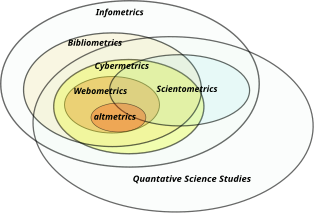

Bibliometrics is the application of statistical methods to the study of bibliographic data, especially in scientific and library and information science contexts, and is closely associated with scientometrics to the point that both fields largely overlap.

Scientometrics is a subfield of informetrics that studies quantitative aspects of scholarly literature. Major research issues include the measurement of the impact of research papers and academic journals, the understanding of scientific citations, and the use of such measurements in policy and management contexts. In practice there is a significant overlap between scientometrics and other scientific fields such as information systems, information science, science of science policy, sociology of science, and metascience. Critics have argued that overreliance on scientometrics has created a system of perverse incentives, producing a publish or perish environment that leads to low-quality research.

Citation analysis is the examination of the frequency, patterns, and graphs of citations in documents. It uses the directed graph of citations — links from one document to another document — to reveal properties of the documents. A typical aim would be to identify the most important documents in a collection. A classic example is that of the citations between academic articles and books. For another example, judges of law support their judgements by referring back to judgements made in earlier cases. An additional example is provided by patents which contain prior art, citation of earlier patents relevant to the current claim. The digitization of patent data and increasing computing power have led to a community of practice that uses these citation data to measure innovation attributes, trace knowledge flows, and map innovation networks.

Google Scholar is a freely accessible web search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines. Released in beta in November 2004, the Google Scholar index includes peer-reviewed online academic journals and books, conference papers, theses and dissertations, preprints, abstracts, technical reports, and other scholarly literature, including court opinions and patents.

Informetrics is the study of quantitative aspects of information, it is an extension and evolution of traditional bibliometrics and scientometrics. Informetrics uses bibliometrics and scientometrics methods to study mainly the problems of literature information management and evaluation of science and technology. Informetrics is an independent discipline that uses quantitative methods from mathematics and statistics to study the process, phenomena, and law of informetrics. Informetrics has gained more attention as it is a common scientific method for academic evaluation, research hotspots in discipline, and trend analysis.

Citation impact or citation rate is a measure of how many times an academic journal article or book or author is cited by other articles, books or authors. Citation counts are interpreted as measures of the impact or influence of academic work and have given rise to the field of bibliometrics or scientometrics, specializing in the study of patterns of academic impact through citation analysis. The importance of journals can be measured by the average citation rate, the ratio of number of citations to number articles published within a given time period and in a given index, such as the journal impact factor or the citescore. It is used by academic institutions in decisions about academic tenure, promotion and hiring, and hence also used by authors in deciding which journal to publish in. Citation-like measures are also used in other fields that do ranking, such as Google's PageRank algorithm, software metrics, college and university rankings, and business performance indicators.

The h-index is an author-level metric that measures both the productivity and citation impact of the publications, initially used for an individual scientist or scholar. The h-index correlates with success indicators such as winning the Nobel Prize, being accepted for research fellowships and holding positions at top universities. The index is based on the set of the scientist's most cited papers and the number of citations that they have received in other publications. The index has more recently been applied to the productivity and impact of a scholarly journal as well as a group of scientists, such as a department or university or country. The index was suggested in 2005 by Jorge E. Hirsch, a physicist at UC San Diego, as a tool for determining theoretical physicists' relative quality and is sometimes called the Hirsch index or Hirsch number.

Journal ranking is widely used in academic circles in the evaluation of an academic journal's impact and quality. Journal rankings are intended to reflect the place of a journal within its field, the relative difficulty of being published in that journal, and the prestige associated with it. They have been introduced as official research evaluation tools in several countries.

The Eigenfactor score, developed by Jevin West and Carl Bergstrom at the University of Washington, is a rating of the total importance of a scientific journal. Journals are rated according to the number of incoming citations, with citations from highly ranked journals weighted to make a larger contribution to the eigenfactor than those from poorly ranked journals. As a measure of importance, the Eigenfactor score scales with the total impact of a journal. All else equal, journals generating higher impact to the field have larger Eigenfactor scores. Citation metrics like eigenfactor or PageRank-based scores reduce the effect of self-referential groups.

In scholarly and scientific publishing, altmetrics are non-traditional bibliometrics proposed as an alternative or complement to more traditional citation impact metrics, such as impact factor and h-index. The term altmetrics was proposed in 2010, as a generalization of article level metrics, and has its roots in the #altmetrics hashtag. Although altmetrics are often thought of as metrics about articles, they can be applied to people, journals, books, data sets, presentations, videos, source code repositories, web pages, etc.

Johan Lambert Trudo Maria Bollen is a scientist investigating complex systems and networks, the relation between social media and a variety of socio-economic phenomena such as the financial markets, public health, and social well-being, as well as Science of Science with a focus on impact metrics derived from usage data. He presently works as associate professor at the Indiana University School of Informatics of Indiana University Bloomington and a fellow at the SparcS Institute of Wageningen University and Research Centre in the Netherlands. He is best known for his work on scholarly impact metrics, measuring public well-being from large-scale social media data, and correlating Twitter mood to stock market prices.

Altmetric, or altmetric.com, is a data science company that tracks where published research is mentioned online, and provides tools and services to institutions, publishers, researchers, funders and other organisations to monitor this activity, commonly referred to as altmetrics. Altmetric was recognized by European Commissioner Máire Geoghegan-Quinn in 2014 as a company challenging the traditional reputation systems.

Author-level metrics are citation metrics that measure the bibliometric impact of individual authors, researchers, academics, and scholars. Many metrics have been developed that take into account varying numbers of factors.

Metascience is the use of scientific methodology to study science itself. Metascience seeks to increase the quality of scientific research while reducing inefficiency. It is also known as "research on research" and "the science of science", as it uses research methods to study how research is done and find where improvements can be made. Metascience concerns itself with all fields of research and has been described as "a bird's eye view of science". In the words of John Ioannidis, "Science is the best thing that has happened to human beings ... but we can do it better."

There are a number of approaches to ranking academic publishing groups and publishers. Rankings rely on subjective impressions by the scholarly community, on analyses of prize winners of scientific associations, discipline, a publisher's reputation, and its impact factor.

The Leiden Manifesto for research metrics (LM) is a list of "ten principles to guide research evaluation", published as a comment in Volume 520, Issue 7548 of Nature, on 22 April 2015. It was formulated by public policy professor Diana Hicks, scientometrics professor Paul Wouters, and their colleagues at the 19th International Conference on Science and Technology Indicators, held between 3–5 September 2014 in Leiden, The Netherlands.

The open science movement has expanded the uses scientific output beyond specialized academic circles.