Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

A geographic information system (GIS) consists of integrated computer hardware and software that store, manage, analyze, edit, output, and visualize geographic data. Much of this often happens within a spatial database, however, this is not essential to meet the definition of a GIS. In a broader sense, one may consider such a system also to include human users and support staff, procedures and workflows, the body of knowledge of relevant concepts and methods, and institutional organizations.

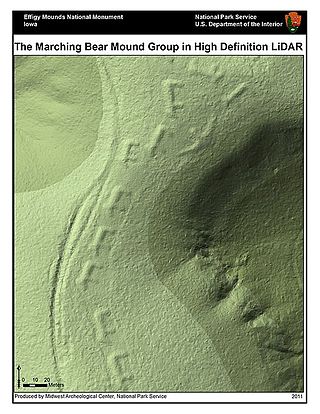

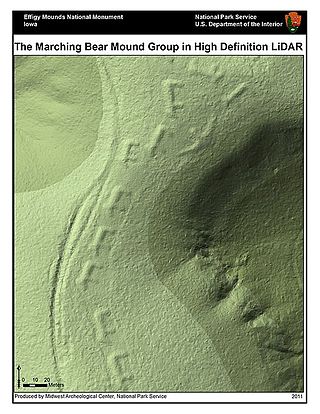

Lidar is a method for determining ranges by targeting an object or a surface with a laser and measuring the time for the reflected light to return to the receiver. Lidar may operate in a fixed direction or it may scan multiple directions, in which case it is known as lidar scanning or 3D laser scanning, a special combination of 3-D scanning and laser scanning. Lidar has terrestrial, airborne, and mobile applications.

Topography is the study of the forms and features of land surfaces. The topography of an area may refer to the land forms and features themselves, or a description or depiction in maps.

Imaging is the representation or reproduction of an object's form; especially a visual representation.

Remote sensing is the acquisition of information about an object or phenomenon without making physical contact with the object, in contrast to in situ or on-site observation. The term is applied especially to acquiring information about Earth and other planets. Remote sensing is used in numerous fields, including geophysics, geography, land surveying and most Earth science disciplines. It also has military, intelligence, commercial, economic, planning, and humanitarian applications, among others.

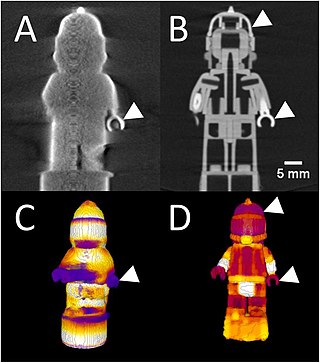

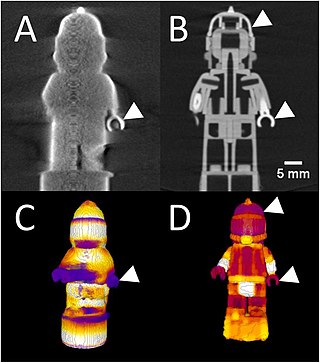

Microscope image processing is a broad term that covers the use of digital image processing techniques to process, analyze and present images obtained from a microscope. Such processing is now commonplace in a number of diverse fields such as medicine, biological research, cancer research, drug testing, metallurgy, etc. A number of manufacturers of microscopes now specifically design in features that allow the microscopes to interface to an image processing system.

Ground truth is information that is known to be real or true, provided by direct observation and measurement as opposed to information provided by inference.

Image analysis or imagery analysis is the extraction of meaningful information from images; mainly from digital images by means of digital image processing techniques. Image analysis tasks can be as simple as reading bar coded tags or as sophisticated as identifying a person from their face.

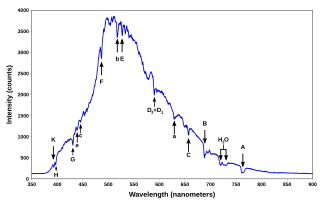

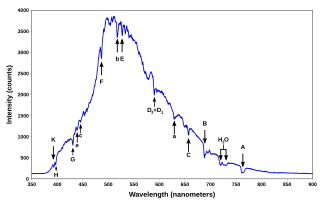

Spectral signature is the variation of reflectance or emittance of a material with respect to wavelengths. The spectral signature of stars indicates the composition of the stellar atmosphere. The spectral signature of an object is a function of the incidental EM wavelength and material interaction with that section of the electromagnetic spectrum.

Multispectral imaging captures image data within specific wavelength ranges across the electromagnetic spectrum. The wavelengths may be separated by filters or detected with the use of instruments that are sensitive to particular wavelengths, including light from frequencies beyond the visible light range, i.e. infrared and ultra-violet. It can allow extraction of additional information the human eye fails to capture with its visible receptors for red, green and blue. It was originally developed for military target identification and reconnaissance. Early space-based imaging platforms incorporated multispectral imaging technology to map details of the Earth related to coastal boundaries, vegetation, and landforms. Multispectral imaging has also found use in document and painting analysis.

Neural network software is used to simulate, research, develop, and apply artificial neural networks, software concepts adapted from biological neural networks, and in some cases, a wider array of adaptive systems such as artificial intelligence and machine learning.

Hyperspectral imaging collects and processes information from across the electromagnetic spectrum. The goal of hyperspectral imaging is to obtain the spectrum for each pixel in the image of a scene, with the purpose of finding objects, identifying materials, or detecting processes. There are three general types of spectral imagers. There are push broom scanners and the related whisk broom scanners, which read images over time, band sequential scanners, which acquire images of an area at different wavelengths, and snapshot hyperspectral imagers, which uses a staring array to generate an image in an instant.

Gerd Binnig is a German physicist. He is most famous for having won the Nobel Prize in Physics jointly with Heinrich Rohrer in 1986 for the invention of the scanning tunneling microscope.

Microsoft PixelSense was an interactive surface computing platform that allowed one or more people to use and touch real-world objects, and share digital content at the same time. The PixelSense platform consists of software and hardware products that combine vision based multitouch PC hardware, 360-degree multiuser application design, and Windows software to create a natural user interface (NUI).

A remote sensing software is a software application that processes remote sensing data. Remote sensing applications are similar to graphics software, but they enable generating geographic information from satellite and airborne sensor data. Remote sensing applications read specialized file formats that contain sensor image data, georeferencing information, and sensor metadata. Some of the more popular remote sensing file formats include: GeoTIFF, NITF, JPEG 2000, ECW, MrSID, HDF, and NetCDF.

Multispectral remote sensing is the collection and analysis of reflected, emitted, or back-scattered energy from an object or an area of interest in multiple bands of regions of the electromagnetic spectrum. Subcategories of multispectral remote sensing include hyperspectral, in which hundreds of bands are collected and analyzed, and ultraspectral remote sensing where many hundreds of bands are used. The main purpose of multispectral imaging is the potential to classify the image using multispectral classification. This is a much faster method of image analysis than is possible by human interpretation.

Dragon is a remote sensing image processing software package. This software provides capabilities for displaying, analyzing, and interpreting digital images from earth satellites and raster data files that represent spatially distributed data. All the Dragon packages are derived from the code created by Goldin-Rudahl.

The Aphelion Imaging Software Suite is a software suite that includes three base products - Aphelion Lab, Aphelion Dev, and Aphelion SDK for addressing image processing and image analysis applications. The suite also includes a set of extension programs to implement specific vertical applications that benefit from imaging techniques.

Land cover maps are tools that provide vital information about the Earth's land use and cover patterns. They aid policy development, urban planning, and forest and agricultural monitoring.