Related Research Articles

While Hypertext Markup Language (HTML) has been in use since 1991, HTML 4.0 from December 1997 was the first standardized version where international characters were given reasonably complete treatment. When an HTML document includes special characters outside the range of seven-bit ASCII, two goals are worth considering: the information's integrity, and universal browser display.

Multipurpose Internet Mail Extensions (MIME) is an Internet standard that extends the format of email messages to support text in character sets other than ASCII, as well as attachments of audio, video, images, and application programs. Message bodies may consist of multiple parts, and header information may be specified in non-ASCII character sets. Email messages with MIME formatting are typically transmitted with standard protocols, such as the Simple Mail Transfer Protocol (SMTP), the Post Office Protocol (POP), and the Internet Message Access Protocol (IMAP).

In computing, plain text is a loose term for data that represent only characters of readable material but not its graphical representation nor other objects. It may also include a limited number of "whitespace" characters that affect simple arrangement of text, such as spaces, line breaks, or tabulation characters. Plain text is different from formatted text, where style information is included; from structured text, where structural parts of the document such as paragraphs, sections, and the like are identified; and from binary files in which some portions must be interpreted as binary objects.

Unicode, formally The Unicode Standard, is an information technology standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems. The standard, which is maintained by the Unicode Consortium, defines as of the current version (15.0) 149,186 characters covering 161 modern and historic scripts, as well as symbols, thousands of emoji, and non-visual control and formatting codes.

Web pages authored using HyperText Markup Language (HTML) may contain multilingual text represented with the Unicode universal character set. Key to the relationship between Unicode and HTML is the relationship between the "document character set", which defines the set of characters that may be present in an HTML document and assigns numbers to them, and the "external character encoding", or "charset", used to encode a given document as a sequence of bytes.

UTF-8 is a variable-length character encoding standard used for electronic communication. Defined by the Unicode Standard, the name is derived from UnicodeTransformation Format – 8-bit.

8-bit clean is an attribute of computer systems, communication channels, and other devices and software, that handle 8-bit character encodings correctly. Such encoding include the ISO 8859 series and the UTF-8 encoding of Unicode.

The byte order mark (BOM) is a particular usage of the special Unicode character, U+FEFFZERO WIDTH NO-BREAK SPACE, whose appearance as a magic number at the start of a text stream can signal several things to a program reading the text:

Mojibake is the garbled text that is the result of text being decoded using an unintended character encoding. The result is a systematic replacement of symbols with completely unrelated ones, often from a different writing system.

In computer programming, Base64 is a group of binary-to-text encoding schemes that represent binary data in sequences of 24 bits that can be represented by four 6-bit Base64 digits.

Windows-1252 or CP-1252 is a single-byte character encoding of the Latin alphabet that was used by default in Microsoft Windows for English and many Romance and Germanic languages including Spanish, Portuguese, French, and German. This character-encoding scheme is used throughout the Americas, Western Europe, Oceania, and much of Africa. All modern operating systems, including Windows, now use Unicode code points and text encodings by default, which are portable across all of the world's major languages.

UTF-7 is an obsolete variable-length character encoding for representing Unicode text using a stream of ASCII characters. It was originally intended to provide a means of encoding Unicode text for use in Internet E-mail messages that was more efficient than the combination of UTF-8 with quoted-printable.

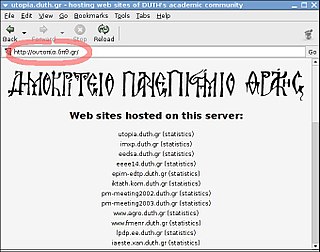

An internationalized domain name (IDN) is an Internet domain name that contains at least one label displayed in software applications, in whole or in part, in non-latin script or alphabet or in the Latin alphabet-based characters with diacritics or ligatures. These writing systems are encoded by computers in multibyte Unicode. Internationalized domain names are stored in the Domain Name System (DNS) as ASCII strings using Punycode transcription.

In the context of an HTTP transaction, basic access authentication is a method for an HTTP user agent to provide a user name and password when making a request. In basic HTTP authentication, a request contains a header field in the form of Authorization: Basic <credentials>, where credentials is the Base64 encoding of ID and password joined by a single colon :.

The data URI scheme is a uniform resource identifier (URI) scheme that provides a way to include data in-line in Web pages as if they were external resources. It is a form of file literal or here document. This technique allows normally separate elements such as images and style sheets to be fetched in a single Hypertext Transfer Protocol (HTTP) request, which may be more efficient than multiple HTTP requests, and used by several browser extensions to package images as well as other multimedia contents in a single HTML file for page saving. As of 2022, data URIs are fully supported by most major browsers, and partially supported in Internet Explorer.

Many email clients now offer some support for Unicode. Some clients will automatically choose between a legacy encoding and Unicode depending on the mail's content, either automatically or when the user requests it.

Windows code pages are sets of characters or code pages used in Microsoft Windows from the 1980s and 1990s. Windows code pages were gradually superseded when Unicode was implemented in Windows, although they are still supported both within Windows and other platforms, and still apply when Alt code shortcuts are used.

A media type is a two-part identifier for file formats and format contents transmitted on the Internet. The Internet Assigned Numbers Authority (IANA) is the official authority for the standardization and publication of these classifications. Media types were originally defined in Request for Comments RFC 2045 (MIME) Part One: Format of Internet Message Bodies in November 1996 as a part of the MIME specification, for denoting type of email message content and attachments; hence the original name, MIME type. Media types are also used by other internet protocols such as HTTP and document file formats such as HTML, for similar purposes.

The Web Hypertext Application Technology Working Group (WHATWG) is a community of people interested in evolving HTML and related technologies. The WHATWG was founded by individuals from Apple Inc., the Mozilla Foundation and Opera Software, leading Web browser vendors, in 2004.

Character encoding detection, charset detection, or code page detection is the process of heuristically guessing the character encoding of a series of bytes that represent text. The technique is recognised to be unreliable and is only used when specific metadata, such as a HTTP Content-Type: header is either not available, or is assumed to be untrustworthy.

References

- ↑ "MIME Type Detection in Windows Internet Explorer". Microsoft. Retrieved 2012-07-14.

- ↑ Barth, Adam. "Secure Content Sniffing for Web Browsers, or How to Stop Papers from Reviewing Themselves" (PDF).

- ↑ Henry Sudhof (11 February 2009). "Risky sniffing: MIME sniffing in Internet Explorer enables cross-site scripting attacks". The H. Retrieved 2012-07-14.

- ↑ Adam Barth, Ian Hickson. "Mime Sniffing". WHATWG. Retrieved 2012-07-14.

- ↑ "Event 1058 - Codepage Sniffing". Internet Explorer. MSDN . Retrieved 2012-07-14.