Related Research Articles

A mainframe computer, informally called a mainframe or big iron, is a computer used primarily by large organizations for critical applications like bulk data processing for tasks such as censuses, industry and consumer statistics, enterprise resource planning, and large-scale transaction processing. A mainframe computer is large but not as large as a supercomputer and has more processing power than some other classes of computers, such as minicomputers, servers, workstations, and personal computers. Most large-scale computer-system architectures were established in the 1960s, but they continue to evolve. Mainframe computers are often used as servers.

Middleware in the context of distributed applications is software that provides services beyond those provided by the operating system to enable the various components of a distributed system to communicate and manage data. Middleware supports and simplifies complex distributed applications. It includes web servers, application servers, messaging and similar tools that support application development and delivery. Middleware is especially integral to modern information technology based on XML, SOAP, Web services, and service-oriented architecture.

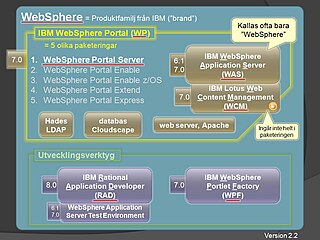

IBM WebSphere refers to a brand of proprietary computer software products in the genre of enterprise software known as "application and integration middleware". These software products are used by end-users to create and integrate applications with other applications. IBM WebSphere has been available to the general market since 1998.

Total cost of ownership (TCO) is a financial estimate intended to help buyers and owners determine the direct and indirect costs of a product or service. It is a management accounting concept that can be used in full cost accounting or even ecological economics where it includes social costs.

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer network. Failover and switchover are essentially the same operation, except that failover is automatic and usually operates without warning, while switchover requires human intervention.

The Service Availability Forum is a consortium that develops, publishes, educates on and promotes open specifications for carrier-grade and mission-critical systems. Formed in 2001, it promotes development and deployment of commercial off-the-shelf (COTS) technology.

A LAMP is one of the most common software stacks for the web's most popular applications. Its generic software stack model has largely interchangeable components.

Reliability, availability and serviceability (RAS), also known as reliability, availability, and maintainability (RAM), is a computer hardware engineering term involving reliability engineering, high availability, and serviceability design. The phrase was originally used by IBM as a term to describe the robustness of their mainframe computers.

High availability (HA) is a characteristic of a system that aims to ensure an agreed level of operational performance, usually uptime, for a higher than normal period.

The term downtime is used to refer to periods when a system is unavailable. The unavailability is the proportion of a time-span that a system is unavailable or offline. This is usually a result of the system failing to function because of an unplanned event, or because of routine maintenance.

Database administration is the function of managing and maintaining database management systems (DBMS) software. Mainstream DBMS software such as Oracle, IBM Db2 and Microsoft SQL Server need ongoing management. As such, corporations that use DBMS software often hire specialized information technology personnel called database administrators or DBAs.

Hardware virtualization is the virtualization of computers as complete hardware platforms, certain logical abstractions of their componentry, or only the functionality required to run various operating systems. Virtualization emulates the hardware environment of its host architecture, allowing multiple OSes to run unmodified and in isolation. At its origins, the software that controlled virtualization was called a "control program", but the terms "hypervisor" or "virtual machine monitor" became preferred over time.

In software engineering and hardware engineering, serviceability is one of the -ilities or aspects. It refers to the ability of technical support personnel to install, configure, and monitor computer products, identify exceptions or faults, debug or isolate faults to root cause analysis, and provide hardware or software maintenance in pursuit of solving a problem and restoring the product into service. Incorporating serviceability facilitating features typically results in more efficient product maintenance and reduces operational costs and maintains business continuity.

4690 Operating System is a specially designed point of sale (POS) operating system, originally sold by IBM. In 2012, IBM sold its retail business, including this product, to Toshiba, which assumed support. 4690 is widely used by IBM and Toshiba retail customers to run retail systems which run their own applications and others.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

Dynamic Infrastructure is an information technology concept related to the design of data centers, whereby the underlying hardware and software can respond dynamically and more efficiently to changing levels of demand. In other words, data center assets such as storage and processing power can be provisioned to meet surges in user's needs. The concept has also been referred to as Infrastructure 2.0 and Next Generation Data Center.

Middleware analysts are computer software engineers with a specialization in products that connect two different computer systems together. These products can be open-source or proprietary. As the term implies, the software, tools, and technologies used by Middleware analysts sit "in-the-middle", between two or more systems; the purpose being to enable two systems to communicate and share information.

Software-defined storage (SDS) is a marketing term for computer data storage software for policy-based provisioning and management of data storage independent of the underlying hardware. Software-defined storage typically includes a form of storage virtualization to separate the storage hardware from the software that manages it. The software enabling a software-defined storage environment may also provide policy management for features such as data deduplication, replication, thin provisioning, snapshots and backup.

High availability software is software used to ensure that systems are running and available most of the time. High availability is a high percentage of time that the system is functioning. It can be formally defined as *100%. Although the minimum required availability varies by task, systems typically attempt to achieve 99.999% (5-nines) availability. This characteristic is weaker than fault tolerance, which typically seeks to provide 100% availability, albeit with significant price and performance penalties.

Data center management is the collection of tasks performed by those responsible for managing ongoing operation of a data center. This includes Business service management and planning for the future.

References

- ↑ Business Continuity: Delivering Data and Applications Through Continuous Availability, A META Group White Paper, June 2003 Archived 2012-04-25 at the Wayback Machine

- ↑ Gartner Survey Shows IT Availability Remain Top Priorities for U.S. IT Services Buyers, September 2010

- ↑ High availability (again) versus continuous availability, IBM WebSphere Developer Technical Journal, April 14, 2010

- ↑ Bob Dickerson: Service Recovery & Availability, IEEE Computer Society, 2010 Meeting

- ↑ itSM Solutions Newsletter December 2006: The Paradox of the 9s