In mathematics, and more specifically in linear algebra, a linear map is a mapping between two vector spaces that preserves the operations of vector addition and scalar multiplication. The same names and the same definition are also used for the more general case of modules over a ring; see Module homomorphism.

Linear algebra is the branch of mathematics concerning linear equations such as:

In linear algebra, the rank of a matrix A is the dimension of the vector space generated by its columns. This corresponds to the maximal number of linearly independent columns of A. This, in turn, is identical to the dimension of the vector space spanned by its rows. Rank is thus a measure of the "nondegenerateness" of the system of linear equations and linear transformation encoded by A. There are multiple equivalent definitions of rank. A matrix's rank is one of its most fundamental characteristics.

In mathematics, a tensor is an algebraic object that describes a multilinear relationship between sets of algebraic objects related to a vector space. Tensors may map between different objects such as vectors, scalars, and even other tensors. There are many types of tensors, including scalars and vectors, dual vectors, multilinear maps between vector spaces, and even some operations such as the dot product. Tensors are defined independent of any basis, although they are often referred to by their components in a basis related to a particular coordinate system; those components form an array, which can be thought of as a high-dimensional matrix.

In mathematics, the dimension of a vector space V is the cardinality of a basis of V over its base field. It is sometimes called Hamel dimension or algebraic dimension to distinguish it from other types of dimension.

In algebra, the kernel of a homomorphism is generally the inverse image of 0. An important special case is the kernel of a linear map. The kernel of a matrix, also called the null space, is the kernel of the linear map defined by the matrix.

In linear algebra, the column space of a matrix A is the span of its column vectors. The column space of a matrix is the image or range of the corresponding matrix transformation.

In linear algebra, the singular value decomposition (SVD) is a factorization of a real or complex matrix into a rotation, followed by a rescaling followed by another rotation. It generalizes the eigendecomposition of a square normal matrix with an orthonormal eigenbasis to any matrix. It is related to the polar decomposition.

In combinatorics, a branch of mathematics, a matroid is a structure that abstracts and generalizes the notion of linear independence in vector spaces. There are many equivalent ways to define a matroid axiomatically, the most significant being in terms of: independent sets; bases or circuits; rank functions; closure operators; and closed sets or flats. In the language of partially ordered sets, a finite simple matroid is equivalent to a geometric lattice.

The rank–nullity theorem is a theorem in linear algebra, which asserts:

In mathematics, the dimension theorem for vector spaces states that all bases of a vector space have equally many elements. This number of elements may be finite or infinite, and defines the dimension of the vector space.

In mathematics, a bilinear form is a bilinear map V × V → K on a vector space V over a field K. In other words, a bilinear form is a function B : V × V → K that is linear in each argument separately:

In linear algebra, the quotient of a vector space by a subspace is a vector space obtained by "collapsing" to zero. The space obtained is called a quotient space and is denoted .

In mathematics, the kernel of a linear map, also known as the null space or nullspace, is the part of the domain which is mapped to the zero vector of the co-domain; the kernel is always a linear subspace of the domain. That is, given a linear map L : V → W between two vector spaces V and W, the kernel of L is the vector space of all elements v of V such that L(v) = 0, where 0 denotes the zero vector in W, or more symbolically:

In linear algebra, an eigenvector or characteristic vector is a vector that has its direction unchanged by a given linear transformation. More precisely, an eigenvector, , of a linear transformation, , is scaled by a constant factor, , when the linear transformation is applied to it: . It is often important to know these vectors in linear algebra. The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

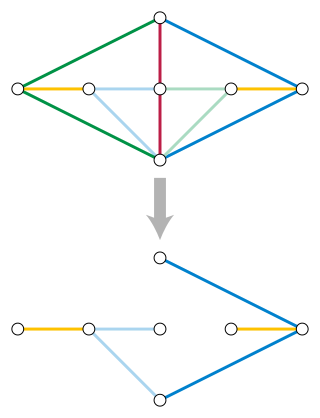

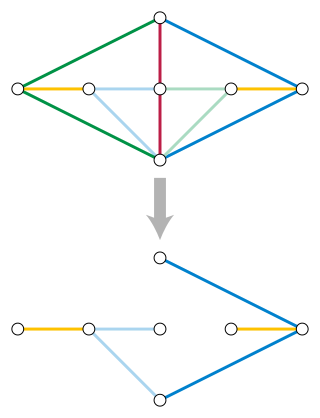

In the mathematics of structural rigidity, a rigidity matroid is a matroid that describes the number of degrees of freedom of an undirected graph with rigid edges of fixed lengths, embedded into Euclidean space. In a rigidity matroid for a graph with n vertices in d-dimensional space, a set of edges that defines a subgraph with k degrees of freedom has matroid rank dn − k. A set of edges is independent if and only if, for every edge in the set, removing the edge would increase the number of degrees of freedom of the remaining subgraph.

In the mathematical theory of matroids, the rank of a matroid is the maximum size of an independent set in the matroid. The rank of a subset S of elements of the matroid is, similarly, the maximum size of an independent subset of S, and the rank function of the matroid maps sets of elements to their ranks.

In the mathematical theory of matroids, a matroid representation is a family of vectors whose linear independence relation is the same as that of a given matroid. Matroid representations are analogous to group representations; both types of representation provide abstract algebraic structures with concrete descriptions in terms of linear algebra.

In linear algebra and matroid theory, Rota's basis conjecture is an unproven conjecture concerning rearrangements of bases, named after Gian-Carlo Rota. It states that, if X is either a vector space of dimension n or more generally a matroid of rank n, with n disjoint bases Bi, then it is possible to arrange the elements of these bases into an n × n matrix in such a way that the rows of the matrix are exactly the given bases and the columns of the matrix are also bases. That is, it should be possible to find a second set of n disjoint bases Ci, each of which consists of one element from each of the bases Bi.

In combinatorial optimization, the matroid parity problem is a problem of finding the largest independent set of paired elements in a matroid. The problem was formulated by Lawler (1976) as a common generalization of graph matching and matroid intersection. It is also known as polymatroid matching, or the matchoid problem.