UNICOS is a range of Unix and after it Linux operating system (OS) variants developed by Cray for its supercomputers. UNICOS is the successor of the Cray Operating System (COS). It provides network clustering and source code compatibility layers for some other Unixes. UNICOS was originally introduced in 1985 with the Cray-2 system and later ported to other Cray models. The original UNICOS was based on UNIX System V Release 2, and had many Berkeley Software Distribution (BSD) features added to it.

In computing, floating point operations per second is a measure of computer performance, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second.

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed in the TOP500, which ranks the most powerful supercomputers in the world.

The Cray T3E was Cray Research's second-generation massively parallel supercomputer architecture, launched in late November 1995. The first T3E was installed at the Pittsburgh Supercomputing Center in 1996. Like the previous Cray T3D, it was a fully distributed memory machine using a 3D torus topology interconnection network. The T3E initially used the DEC Alpha 21164 (EV5) microprocessor and was designed to scale from 8 to 2,176 Processing Elements (PEs). Each PE had between 64 MB and 2 GB of DRAM and a 6-way interconnect router with a payload bandwidth of 480 MB/s in each direction. Unlike many other MPP systems, including the T3D, the T3E was fully self-hosted and ran the UNICOS/mk distributed operating system with a GigaRing I/O subsystem integrated into the torus for network, disk and tape I/O.

EPCC, formerly the Edinburgh Parallel Computing Centre, is a supercomputing centre based at the University of Edinburgh. Since its foundation in 1990, its stated mission has been to accelerate the effective exploitation of novel computing throughout industry, academia and commerce.

Red Storm is a supercomputer architecture designed for the US Department of Energy’s National Nuclear Security Administration Advanced Simulation and Computing Program. Cray, Inc developed it based on the contracted architectural specifications provided by Sandia National Laboratories. The architecture was later commercially produced as the Cray XT3.

The Cray XT3 is a distributed memory massively parallel MIMD supercomputer designed by Cray Inc. with Sandia National Laboratories under the codename Red Storm. Cray turned the design into a commercial product in 2004. The XT3 derives much of its architecture from the previous Cray T3E system, and also from the Intel ASCI Red supercomputer.

Cray XMT is a scalable multithreaded shared memory supercomputer architecture by Cray, based on the third generation of the Tera MTA architecture, targeted at large graph problems. Presented in 2005, it supersedes the earlier unsuccessful Cray MTA-2. It uses the Threadstorm3 CPUs inside Cray XT3 blades. Designed to make use of commodity parts and existing subsystems for other commercial systems, it alleviated the shortcomings of Cray MTA-2's high cost of fully custom manufacture and support. It brought various substantial improvements over Cray MTA-2, most notably nearly tripling the peak performance, and vastly increased maximum CPU count to 8,192 and maximum memory to 128 TB, with a data TLB of maximal 512 TB.

HECToR was a British academic national supercomputer service funded by EPSRC, Natural Environment Research Council (NERC) and BBSRC for the UK academic community. The HECToR service was run by partners including EPCC, Science and Technology Facilities Council (STFC) and Numerical Algorithms Group (NAG).

The Cray X2 is a vector processing node for the Cray XT5h supercomputer, developed and sold by Cray Inc. and launched in 2007.

The Bigben supercomputer was a Cray XT3 MPP system with 2068 nodes located at Pittsburgh Supercomputing Center. It was decommissioned on March 31, 2010. Bigben was a part of the TeraGrid.

The Cray XT5 is an updated version of the Cray XT4 supercomputer, launched on November 6, 2007. It includes a faster version of the XT4's SeaStar2 interconnect router called SeaStar2+, and can be configured either with XT4 compute blades, which have four dual-core AMD Opteron processor sockets, or XT5 blades, with eight sockets supporting dual or quad-core Opterons. The XT5 uses a 3-dimensional torus network topology.

The Cray CX1 is a deskside high-performance workstation designed by Cray Inc., based on the x86-64 processor architecture. It was launched on September 16, 2008, and was discontinued in early 2012. It comprises a single chassis blade server design that supports a maximum of eight modular single-width blades, giving up to 96 processor cores. Computational load can be run independently on each blade and/or combined using clustering techniques.

Compute Node Linux (CNL) is a runtime environment based on the Linux kernel for the Cray XT3, Cray XT4, Cray XT5, Cray XT6, Cray XE6 and Cray XK6 supercomputer systems based on SUSE Linux Enterprise Server. CNL forms part of the Cray Linux Environment. As of November 2011 systems running CNL were ranked 3rd, 6th and 8th among the fastest supercomputers in the world.

Jaguar or OLCF-2 was a petascale supercomputer built by Cray at Oak Ridge National Laboratory (ORNL) in Oak Ridge, Tennessee. The massively parallel Jaguar had a peak performance of just over 1,750 teraFLOPS. It had 224,256 x86-based AMD Opteron processor cores, and operated with a version of Linux called the Cray Linux Environment. Jaguar was a Cray XT5 system, a development from the Cray XT4 supercomputer.

The Cray XT6 is an updated version of the Cray XT5 supercomputer, launched on 16 November 2009. The dual- or quad-core AMD Opteron 2000-series processors of the XT5 are replaced in the XT6 with eight- or 12-core Opteron 6100 processors, giving up to 2,304 cores per cabinet. The XT6 includes the same SeaStar2+ interconnect router as the XT5, which is used to provide a 3-dimensional torus network topology between nodes. Each XT6 node has two processor sockets, one SeaStar2+ router and either 32 or 64 GB of DDR3 SDRAM memory. Four nodes form one X6 compute blade.

The Cray XE6 made by Cray is an enhanced version of the Cray XT6 supercomputer, officially announced on 25 May 2010. The XE6 uses the same computer blade found in the XT6, with eight- or 12-core Opteron 6100 processors giving up to 3,072 cores per cabinet, but replaces the SeaStar2+ interconnect router used in the Cray XT5 and XT6 with the faster and more scalable Gemini router ASIC. This is used to provide a 3-dimensional torus network topology between nodes. Each XE6 node has two processor sockets and either 32 or 64 GB of DDR3 SDRAM memory. Two nodes share one Gemini router ASIC.

The Cray CX1000 is a family of high-performance computers which is manufactured by Cray Inc., and consists of two individual groups of computer systems. The first group is intended for scale-up symmetric multiprocessing (SMP), and consists of the CX1000-SM and CX1000-SC nodes. The second group is meant for scale-out cluster computing, and consists of the CX1000 Blade Enclosure, and the CX1000-HN, CX1000-C and CX1000-G nodes.

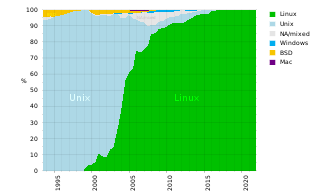

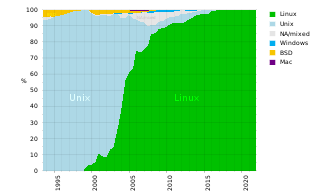

A supercomputer operating system is an operating system intended for supercomputers. Since the end of the 20th century, supercomputer operating systems have undergone major transformations, as fundamental changes have occurred in supercomputer architecture. While early operating systems were custom tailored to each supercomputer to gain speed, the trend has been moving away from in-house operating systems and toward some form of Linux, with it running all the supercomputers on the TOP500 list in November 2017. In 2021, top 10 computers run for instance Red Hat Enterprise Linux (RHEL), or some variant of it or other Linux distribution e.g. Ubuntu.

SHMEM is a family of parallel programming libraries, providing one-sided, RDMA, parallel-processing interfaces for low-latency distributed-memory supercomputers. The SHMEM acronym was subsequently reverse engineered to mean "Symmetric Hierarchical MEMory”. Later it was expanded to distributed memory parallel computer clusters, and is used as parallel programming interface or as low-level interface to build partitioned global address space (PGAS) systems and languages. “Libsma”, the first SHMEM library, was created by Richard Smith at Cray Research in 1993 as a set of thin interfaces to access the CRAY T3D’s inter-processor-communication hardware. SHMEM has been implemented by Cray Research, SGI, Cray Inc., Quadrics, HP, GSHMEM, IBM, QLogic, Mellanox, Universities of Houston and Florida; there is also open-source OpenSHMEM.