In computer networking, a thin client, sometimes called slim client or lean client, is a simple (low-performance) computer that has been optimized for establishing a remote connection with a server-based computing environment. They are sometimes known as network computers, or in their simplest form as zero clients. The server does most of the work, which can include launching software programs, performing calculations, and storing data. This contrasts with a rich client or a conventional personal computer; the former is also intended for working in a client–server model but has significant local processing power, while the latter aims to perform its function mostly locally.

A server farm or server cluster is a collection of computer servers, usually maintained by an organization to supply server functionality far beyond the capability of a single machine. They often consist of thousands of computers which require a large amount of power to run and to keep cool. At the optimum performance level, a server farm has enormous financial and environmental costs. They often include backup servers that can take over the functions of primary servers that may fail. Server farms are typically collocated with the network switches and/or routers that enable communication between different parts of the cluster and the cluster's users. Server "farmers" typically mount computers, routers, power supplies and related electronics on 19-inch racks in a server room or data center.

A data center is a building, a dedicated space within a building, or a group of buildings used to house computer systems and associated components, such as telecommunications and storage systems.

A central heating system provides warmth to a number of spaces within a building from one main source of heat. It is a component of heating, ventilation, and air conditioning systems, which can both cool and warm interior spaces.

Green computing, green IT, or ICT sustainability, is the study and practice of environmentally sustainable computing or IT.

Utility computing, or computer utility, is a service provisioning model in which a service provider makes computing resources and infrastructure management available to the customer as needed, and charges them for specific usage rather than a flat rate. Like other types of on-demand computing, the utility model seeks to maximize the efficient use of resources and/or minimize associated costs. Utility is the packaging of system resources, such as computation, storage and services, as a metered service. This model has the advantage of a low or no initial cost to acquire computer resources; instead, resources are essentially rented.

Software multitenancy is a software architecture in which a single instance of software runs on a server and serves multiple tenants. Systems designed in such manner are "shared". A tenant is a group of users who share a common access with specific privileges to the software instance. With a multitenant architecture, a software application is designed to provide every tenant a dedicated share of the instance—including its data, configuration, user management, tenant individual functionality and non-functional properties. Multitenancy contrasts with multi-instance architectures, where separate software instances operate on behalf of different tenants.

Renewable heat is an application of renewable energy referring to the generation of heat from renewable sources; for example, feeding radiators with water warmed by focused solar radiation rather than by a fossil fuel boiler. Renewable heat technologies include renewable biofuels, solar heating, geothermal heating, heat pumps and heat exchangers. Insulation is almost always an important factor in how renewable heating is implemented.

Cloud storage is a model of computer data storage in which data, said to be on "the cloud", is stored remotely in logical pools and is accessible to users over a network, typically the Internet. The physical storage spans multiple servers, and the physical environment is typically owned and managed by a cloud computing provider. These cloud storage providers are responsible for keeping the data available and accessible, and the physical environment secured, protected, and running. People and organizations buy or lease storage capacity from the providers to store user, organization, or application data.

Infrastructure as a service (IaaS) is a cloud computing service model where a cloud services vendor provides computing resources such as storage, network, servers, and virtualization. This service frees users from maintaining their own data center, but they must install and maintain the operating system and application software. Iaas provides users high-level APIs to control details of underlying network infrastructure such as backup, data partitioning, scaling, security and physical computing resources. Services can be scaled on-demand by the user. According to the Internet Engineering Task Force (IETF), such infrastructure is the most basic cloud-service model. IaaS can be hosted in a public cloud, a private cloud, or a hybrid cloud.

A server room is a room, usually air-conditioned, devoted to the continuous operation of computer servers. An entire building or station devoted to this purpose is a data center.

In computing, virtualization (v12n) is a series of technologies that allows dividing of physical computing resources into a series of virtual machines, operating systems, processes or containers.

The Rackspace Cloud is a set of cloud computing products and services billed on a utility computing basis from the US-based company Rackspace. Offerings include Cloud Storage, virtual private server, load balancers, databases, backup, and monitoring.

"Cloud computing is a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand." according to ISO.

Dynamic Infrastructure is an information technology concept related to the design of data centers, whereby the underlying hardware and software can respond dynamically and more efficiently to changing levels of demand. In other words, data center assets such as storage and processing power can be provisioned to meet surges in user's needs. The concept has also been referred to as Infrastructure 2.0 and Next Generation Data Center.

Wyse Technology, Inc., or simply Wyse, was an independent American manufacturer of cloud computing systems. Wyse are best remembered for their video terminal line introduced in the 1980s, which competed with the market-leading Digital. They also had a successful line of IBM PC compatible workstations in the mid-to-late 1980s. But starting late in the decade, Wyse were outcompeted by companies such as eventual parent Dell. Current products include thin client hardware and software as well as desktop virtualization solutions. Other products include cloud software-supporting desktop computers, laptops, and mobile devices. Dell Cloud Client Computing is partnered with IT vendors such as Citrix, IBM, Microsoft, and VMware.

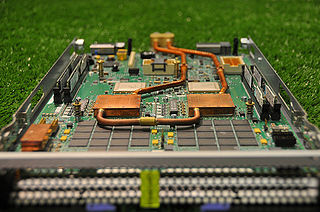

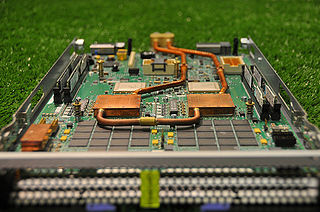

Aquasar is a supercomputer prototype created by IBM Labs in collaboration with ETH Zurich in Zürich, Switzerland and ETH Lausanne in Lausanne, Switzerland. While most supercomputers use air as their coolant of choice, the Aquasar uses hot water to achieve its great computing efficiency. Along with using hot water as the main coolant, an air-cooled section is also included to be used to compare the cooling efficiency of both coolants. The comparison could later be used to help improve the hot water coolant's performance. The research program was first termed to be: "Direct use of waste heat from liquid-cooled supercomputers: the path to energy saving, emission-high performance computers and data centers." The waste heat produced by the cooling system is able to be recycled back in the building's heating system, potentially saving money. Beginning in 2009, the three-year collaborative project was introduced and developed in the interest of saving energy and being environmentally-safe while delivering top-tier performance.

A data processing unit (DPU) is a programmable computer processor that tightly integrates a general-purpose CPU with network interface hardware. Sometimes they are called "IPUs" or "SmartNICs". They can be used in place of traditional NICs to relieve the main CPU of complex networking responsibilities and other "infrastructural" duties; although their features vary, they may be used to perform encryption/decryption, serve as a firewall, handle TCP/IP, process HTTP requests, or even function as a hypervisor or storage controller. These devices can be attractive to cloud computing providers whose servers might otherwise spend a significant amount of CPU time on these tasks, cutting into the cycles they can provide to guests.

Immersion cooling is an IT cooling practice by which servers are completely or partially immersed in a dielectric fluid that has significantly higher thermal conductivity than air. Heat is removed from the system by putting the coolant in direct contact with hot components, and circulating the heated liquid through heat exchangers. This practice is highly effective as liquid coolants can absorb more heat from the system than air. Immersion cooling has many benefits, including but not limited to: sustainability, performance, reliability, and cost.

Ampere Computing LLC is an American fabless semiconductor company based in Santa Clara, California that develops processors for servers operating in large scale environments. It was founded in 2017 by Renée James.