In the context of an operating system, a device driver is a computer program that operates or controls a particular type of device that is attached to a computer or automaton. A driver provides a software interface to hardware devices, enabling operating systems and other computer programs to access hardware functions without needing to know precise details about the hardware being used.

An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

In computing, a segmentation fault or access violation is a fault, or failure condition, raised by hardware with memory protection, notifying an operating system (OS) the software has attempted to access a restricted area of memory. On standard x86 computers, this is a form of general protection fault. The operating system kernel will, in response, usually perform some corrective action, generally passing the fault on to the offending process by sending the process a signal. Processes can in some cases install a custom signal handler, allowing them to recover on their own, but otherwise the OS default signal handler is used, generally causing abnormal termination of the process, and sometimes a core dump.

In computer science, static program analysis is the analysis of computer programs performed without executing them, in contrast with dynamic program analysis, which is performed on programs during their execution in the integrated environment.

An embedded system is a specialized computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is embedded as part of a complete device often including electrical or electronic hardware and mechanical parts. Because an embedded system typically controls physical operations of the machine that it is embedded within, it often has real-time computing constraints. Embedded systems control many devices in common use. In 2009, it was estimated that ninety-eight percent of all microprocessors manufactured were used in embedded systems.

In computer engineering, a hardware description language (HDL) is a specialized computer language used to describe the structure and behavior of electronic circuits, usually to design application-specific integrated circuits (ASICs) and to program field-programmable gate arrays (FPGAs).

In computer science, formal methods are mathematically rigorous techniques for the specification, development, analysis, and verification of software and hardware systems. The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design.

System software is software designed to provide a platform for other software. Examples of system software include operating systems (OS).

In the context of hardware and software systems, formal verification is the act of proving or disproving the correctness of a system with respect to a certain formal specification or property, using formal methods of mathematics. Formal verification is a key incentive for formal specification of systems, and is at the core of formal methods. It represents an important dimension of analysis and verification in electronic design automation and is one approach to software verification. The use of formal verification enables the highest Evaluation Assurance Level (EAL7) in the framework of common criteria for computer security certification.

Hardware abstractions are sets of routines in software that provide programs with access to hardware resources through programming interfaces. The programming interface allows all devices in a particular class C of hardware devices to be accessed through identical interfaces even though C may contain different subclasses of devices that each provide a different hardware interface.

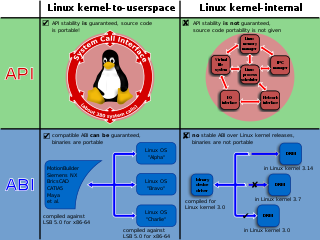

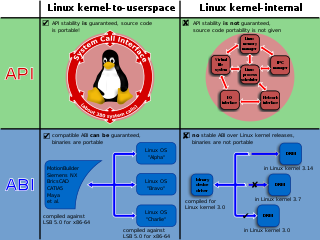

The Linux kernel provides multiple interfaces to user-space and kernel-mode code that are used for varying purposes and that have varying properties by design. There are two types of application programming interface (API) in the Linux kernel:

- the "kernel–user space" API; and

- the "kernel internal" API.

In software engineering, profiling is a form of dynamic program analysis that measures, for example, the space (memory) or time complexity of a program, the usage of particular instructions, or the frequency and duration of function calls. Most commonly, profiling information serves to aid program optimization, and more specifically, performance engineering.

Advanced Configuration and Power Interface (ACPI) is an open standard that operating systems can use to discover and configure computer hardware components, to perform power management, auto configuration, and status monitoring. It was first released in December 1996. ACPI aims to replace Advanced Power Management (APM), the MultiProcessor Specification, and the Plug and Play BIOS (PnP) Specification. ACPI brings power management under the control of the operating system, as opposed to the previous BIOS-centric system that relied on platform-specific firmware to determine power management and configuration policies. The specification is central to the Operating System-directed configuration and Power Management (OSPM) system. ACPI defines hardware abstraction interfaces between the device's firmware, the computer hardware components, and the operating systems.

This is an alphabetical list of articles pertaining specifically to software engineering.

Runtime verification is a computing system analysis and execution approach based on extracting information from a running system and using it to detect and possibly react to observed behaviors satisfying or violating certain properties. Some very particular properties, such as datarace and deadlock freedom, are typically desired to be satisfied by all systems and may be best implemented algorithmically. Other properties can be more conveniently captured as formal specifications. Runtime verification specifications are typically expressed in trace predicate formalisms, such as finite state machines, regular expressions, context-free patterns, linear temporal logics, etc., or extensions of these. This allows for a less ad-hoc approach than normal testing. However, any mechanism for monitoring an executing system is considered runtime verification, including verifying against test oracles and reference implementations. When formal requirements specifications are provided, monitors are synthesized from them and infused within the system by means of instrumentation. Runtime verification can be used for many purposes, such as security or safety policy monitoring, debugging, testing, verification, validation, profiling, fault protection, behavior modification, etc. Runtime verification avoids the complexity of traditional formal verification techniques, such as model checking and theorem proving, by analyzing only one or a few execution traces and by working directly with the actual system, thus scaling up relatively well and giving more confidence in the results of the analysis, at the expense of less coverage. Moreover, through its reflective capabilities runtime verification can be made an integral part of the target system, monitoring and guiding its execution during deployment.

In Linux systems, initrd is a scheme for loading a temporary root file system into memory, to be used as part of the Linux startup process. initrd and initramfs refer to two different methods of achieving this. Both are commonly used to make preparations before the real root file system can be mounted.

In the context of free and open-source software, proprietary software only available as a binary executable is referred to as a blob or binary blob. The term usually refers to a device driver module loaded into the kernel of an open-source operating system, and is sometimes also applied to code running outside the kernel, such as system firmware images, microcode updates, or userland programs. The term blob was first used in database management systems to describe a collection of binary data stored as a single entity.

In computer science, fault injection is a testing technique for understanding how computing systems behave when stressed in unusual ways. This can be achieved using physical- or software-based means, or using a hybrid approach. Widely studied physical fault injections include the application of high voltages, extreme temperatures and electromagnetic pulses on electronic components, such as computer memory and central processing units. By exposing components to conditions beyond their intended operating limits, computing systems can be coerced into mis-executing instructions and corrupting critical data.

The kernel is a computer program at the core of a computer's operating system and generally has complete control over everything in the system. The kernel is also responsible for preventing and mitigating conflicts between different processes. It is the portion of the operating system code that is always resident in memory and facilitates interactions between hardware and software components. A full kernel controls all hardware resources via device drivers, arbitrates conflicts between processes concerning such resources, and optimizes the utilization of common resources e.g. CPU & cache usage, file systems, and network sockets. On most systems, the kernel is one of the first programs loaded on startup. It handles the rest of the startup as well as memory, peripherals, and input/output (I/O) requests from software, translating them into data-processing instructions for the central processing unit.

In computer science, dynamic software updating (DSU) is a field of research pertaining to upgrading programs while they are running. DSU is not currently widely used in industry. However, researchers have developed a wide variety of systems and techniques for implementing DSU. These systems are commonly tested on real-world programs.