In probability theory, the expected value of a random variable, intuitively, is the long-run average value of repetitions of the same experiment it represents. For example, the expected value in rolling a six-sided die is 3.5, because the average of all the numbers that come up is 3.5 as the number of rolls approaches infinity. In other words, the law of large numbers states that the arithmetic mean of the values almost surely converges to the expected value as the number of repetitions approaches infinity. The expected value is also known as the expectation, mathematical expectation, EV, average, mean value, mean, or first moment.

The Fock space is an algebraic construction used in quantum mechanics to construct the quantum states space of a variable or unknown number of identical particles from a single particle Hilbert space H. It is named after V. A. Fock who first introduced it in his 1932 paper "Konfigurationsraum und zweite Quantelung".

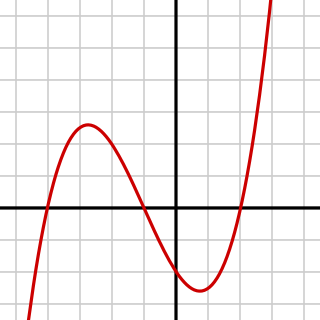

In mathematical analysis, a function of bounded variation, also known as BV function, is a real-valued function whose total variation is bounded (finite): the graph of a function having this property is well behaved in a precise sense. For a continuous function of a single variable, being of bounded variation means that the distance along the direction of the y-axis, neglecting the contribution of motion along x-axis, traveled by a point moving along the graph has a finite value. For a continuous function of several variables, the meaning of the definition is the same, except for the fact that the continuous path to be considered cannot be the whole graph of the given function, but can be every intersection of the graph itself with a hyperplane parallel to a fixed x-axis and to the y-axis.

In mathematics, a Sobolev space is a vector space of functions equipped with a norm that is a combination of Lp-norms of the function itself and its derivatives up to a given order. The derivatives are understood in a suitable weak sense to make the space complete, thus a Banach space. Intuitively, a Sobolev space is a space of functions with sufficiently many derivatives for some application domain, such as partial differential equations, and equipped with a norm that measures both the size and regularity of a function.

In mathematics and computer algebra, automatic differentiation (AD), also called algorithmic differentiation or computational differentiation, is a set of techniques to numerically evaluate the derivative of a function specified by a computer program. AD exploits the fact that every computer program, no matter how complicated, executes a sequence of elementary arithmetic operations and elementary functions. By applying the chain rule repeatedly to these operations, derivatives of arbitrary order can be computed automatically, accurately to working precision, and using at most a small constant factor more arithmetic operations than the original program.

In linear algebra, the Gram matrix of a set of vectors in an inner product space is the Hermitian matrix of inner products, whose entries are given by .

A locally compact quantum group is a relatively new C*-algebraic approach toward quantum groups that generalizes the Kac algebra, compact-quantum-group and Hopf-algebra approaches. Earlier attempts at a unifying definition of quantum groups using, for example, multiplicative unitaries have enjoyed some success but have also encountered several technical problems.

In mathematics, in particular in algebraic geometry and differential geometry, Dolbeault cohomology is an analog of de Rham cohomology for complex manifolds. Let M be a complex manifold. Then the Dolbeault cohomology groups depend on a pair of integers p and q and are realized as a subquotient of the space of complex differential forms of degree (p,q).

In complex analysis, functional analysis and operator theory, a Bergman space is a function space of holomorphic functions in a domain D of the complex plane that are sufficiently well-behaved at the boundary that they are absolutely integrable. Specifically, for 0 < p < ∞, the Bergman space Ap(D) is the space of all holomorphic functions in D for which the p-norm is finite:

Weak formulations are important tools for the analysis of mathematical equations that permit the transfer of concepts of linear algebra to solve problems in other fields such as partial differential equations. In a weak formulation, an equation is no longer required to hold absolutely and has instead weak solutions only with respect to certain "test vectors" or "test functions". This is equivalent to formulating the problem to require a solution in the sense of a distribution.

In mathematics the Petersson inner product is an inner product defined on the space of entire modular forms. It was introduced by the German mathematician Hans Petersson.

In mathematics, the Milliken–Taylor theorem in combinatorics is a generalization of both Ramsey's theorem and Hindman's theorem. It is named after Keith Milliken and Alan D. Taylor.

In mathematics, a holomorphic vector bundle is a complex vector bundle over a complex manifold X such that the total space E is a complex manifold and the projection map π : E → X is holomorphic. Fundamental examples are the holomorphic tangent bundle of a complex manifold, and its dual, the holomorphic cotangent bundle. A holomorphic line bundle is a rank one holomorphic vector bundle.

In many-body theory, the term Green's function is sometimes used interchangeably with correlation function, but refers specifically to correlators of field operators or creation and annihilation operators.

In statistical mechanics, thermal fluctuations are random deviations of a system from its average state, that occur in a system at equilibrium. All thermal fluctuations become larger and more frequent as the temperature increases, and likewise they decrease as temperature approaches absolute zero.

Modulation spaces are a family of Banach spaces defined by the behavior of the short-time Fourier transform with respect to a test function from the Schwartz space. They were originally proposed by Hans Georg Feichtinger and are recognized to be the right kind of function spaces for time-frequency analysis. Feichtinger's algebra, while originally introduced as a new Segal algebra, is identical to a certain modulation space and has become a widely used space of test functions for time-frequency analysis.

Coherent states have been introduced in a physical context, first as quasi-classical states in quantum mechanics, then as the backbone of quantum optics and they are described in that spirit in the article Coherent states. However, they have generated a huge variety of generalizations, which have led to a tremendous literature in mathematical physics. In this article, we sketch the main directions of research on this line. For further details, we refer to several existing surveys.

In mathematics, the Grunsky matrices, or Grunsky operators, are matrices introduced by Grunsky (1939) in complex analysis and geometric function theory. They correspond to either a single holomorphic function on the unit disk or a pair of holomorphic functions on the unit disk and its complement. The Grunsky inequalities express boundedness properties of these matrices, which in general are contraction operators or in important special cases unitary operators. As Grunsky showed, these inequalities hold if and only if the holomorphic function is univalent. The inequalities are equivalent to the inequalities of Goluzin, discovered in 1947. Roughly speaking, the Grunsky inequalities give information on the coefficients of the logarithm of a univalent function; later generalizations by Milin, starting from the Lebedev–Milin inequality, succeeded in exponentiating the inequalities to obtain inequalities for the coefficients of the univalent function itself. Historically the inequalities were used in proving special cases of the Bieberbach conjecture up to the sixth coefficient; the exponentiated inequalities of Milin were used by de Branges in the final solution. The Grunsky operators and their Fredholm determinants are related to spectral properties of bounded domains in the complex plane. The operators have further applications in conformal mapping, Teichmüller theory and conformal field theory.

In mathematics, the Neumann–Poincaré operator or Poincaré–Neumann operator, named after Carl Neumann and Henri Poincaré, is a non-self-adjoint compact operator introduced by Poincaré to solve boundary value problems for the Laplacian on bounded domains in Euclidean space. Within the language of potential theory it reduces the partial differential equation to an integral equation on the boundary to which the theory of Fredholm operators can be applied. The theory is particularly simple in two dimensions—the case treated in detail in this article—where it is related to complex function theory, the conjugate Beurling transform or complex Hilbert transform and the Fredholm eigenvalues of bounded planar domains.