Related Research Articles

Digital image processing is the use of a digital computer to process digital images through an algorithm. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise and distortion during processing. Since images are defined over two dimensions digital image processing may be modeled in the form of multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics ; third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has increased.

The Canny edge detector is an edge detection operator that uses a multi-stage algorithm to detect a wide range of edges in images. It was developed by John F. Canny in 1986. Canny also produced a computational theory of edge detection explaining why the technique works.

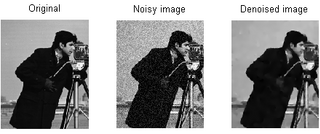

Noise reduction is the process of removing noise from a signal. Noise reduction techniques exist for audio and images. Noise reduction algorithms may distort the signal to some degree. Noise rejection is the ability of a circuit to isolate an undesired signal component from the desired signal component, as with common-mode rejection ratio.

In digital image processing and computer vision, image segmentation is the process of partitioning a digital image into multiple image segments, also known as image regions or image objects. The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

The diffusion equation is a parabolic partial differential equation. In physics, it describes the macroscopic behavior of many micro-particles in Brownian motion, resulting from the random movements and collisions of the particles. In mathematics, it is related to Markov processes, such as random walks, and applied in many other fields, such as materials science, information theory, and biophysics. The diffusion equation is a special case of the convection–diffusion equation when bulk velocity is zero. It is equivalent to the heat equation under some circumstances.

In mathematics, the discrete Laplace operator is an analog of the continuous Laplace operator, defined so that it has meaning on a graph or a discrete grid. For the case of a finite-dimensional graph, the discrete Laplace operator is more commonly called the Laplacian matrix.

Scale-space theory is a framework for multi-scale signal representation developed by the computer vision, image processing and signal processing communities with complementary motivations from physics and biological vision. It is a formal theory for handling image structures at different scales, by representing an image as a one-parameter family of smoothed images, the scale-space representation, parametrized by the size of the smoothing kernel used for suppressing fine-scale structures. The parameter in this family is referred to as the scale parameter, with the interpretation that image structures of spatial size smaller than about have largely been smoothed away in the scale-space level at scale .

The topological derivative is, conceptually, a derivative of a shape functional with respect to infinitesimal changes in its topology, such as adding an infinitesimal hole or crack. When used in higher dimensions than one, the term topological gradient is also used to name the first-order term of the topological asymptotic expansion, dealing only with infinitesimal singular domain perturbations. It has applications in shape optimization, topology optimization, image processing and mechanical modeling.

Compressed sensing is a signal processing technique for efficiently acquiring and reconstructing a signal, by finding solutions to underdetermined linear systems. This is based on the principle that, through optimization, the sparsity of a signal can be exploited to recover it from far fewer samples than required by the Nyquist–Shannon sampling theorem. There are two conditions under which recovery is possible. The first one is sparsity, which requires the signal to be sparse in some domain. The second one is incoherence, which is applied through the isometric property, which is sufficient for sparse signals. Compressed sensing has applications in, for example, MRI where the incoherence condition is typically satisfied.

In mathematics, a graph partition is the reduction of a graph to a smaller graph by partitioning its set of nodes into mutually exclusive groups. Edges of the original graph that cross between the groups will produce edges in the partitioned graph. If the number of resulting edges is small compared to the original graph, then the partitioned graph may be better suited for analysis and problem-solving than the original. Finding a partition that simplifies graph analysis is a hard problem, but one that has applications to scientific computing, VLSI circuit design, and task scheduling in multiprocessor computers, among others. Recently, the graph partition problem has gained importance due to its application for clustering and detection of cliques in social, pathological and biological networks. For a survey on recent trends in computational methods and applications see Buluc et al. (2013). Two common examples of graph partitioning are minimum cut and maximum cut problems.

A bilateral filter is a non-linear, edge-preserving, and noise-reducing smoothing filter for images. It replaces the intensity of each pixel with a weighted average of intensity values from nearby pixels. This weight can be based on a Gaussian distribution. Crucially, the weights depend not only on Euclidean distance of pixels, but also on the radiometric differences. This preserves sharp edges.

In image processing, contourlets form a multiresolution directional tight frame designed to efficiently approximate images made of smooth regions separated by smooth boundaries. The contourlet transform has a fast implementation based on a Laplacian pyramid decomposition followed by directional filterbanks applied on each bandpass subband.

In image processing and computer vision, anisotropic diffusion, also called Perona–Malik diffusion, is a technique aiming at reducing image noise without removing significant parts of the image content, typically edges, lines or other details that are important for the interpretation of the image. Anisotropic diffusion resembles the process that creates a scale space, where an image generates a parameterized family of successively more and more blurred images based on a diffusion process. Each of the resulting images in this family are given as a convolution between the image and a 2D isotropic Gaussian filter, where the width of the filter increases with the parameter. This diffusion process is a linear and space-invariant transformation of the original image. Anisotropic diffusion is a generalization of this diffusion process: it produces a family of parameterized images, but each resulting image is a combination between the original image and a filter that depends on the local content of the original image. As a consequence, anisotropic diffusion is a non-linear and space-variant transformation of the original image.

In signal processing, particularly image processing, total variation denoising, also known as total variation regularization or total variation filtering, is a noise removal process (filter). It is based on the principle that signals with excessive and possibly spurious detail have high total variation, that is, the integral of the image gradient magnitude is high. According to this principle, reducing the total variation of the signal—subject to it being a close match to the original signal—removes unwanted detail whilst preserving important details such as edges. The concept was pioneered by L. I. Rudin, S. Osher, and E. Fatemi in 1992 and so is today known as the ROF model.

Locally Optimal Block Preconditioned Conjugate Gradient (LOBPCG) is a matrix-free method for finding the largest eigenvalues and the corresponding eigenvectors of a symmetric generalized eigenvalue problem

Non-local means is an algorithm in image processing for image denoising. Unlike "local mean" filters, which take the mean value of a group of pixels surrounding a target pixel to smooth the image, non-local means filtering takes a mean of all pixels in the image, weighted by how similar these pixels are to the target pixel. This results in much greater post-filtering clarity, and less loss of detail in the image compared with local mean algorithms.

Diffusion maps is a dimensionality reduction or feature extraction algorithm introduced by Coifman and Lafon which computes a family of embeddings of a data set into Euclidean space whose coordinates can be computed from the eigenvectors and eigenvalues of a diffusion operator on the data. The Euclidean distance between points in the embedded space is equal to the "diffusion distance" between probability distributions centered at those points. Different from linear dimensionality reduction methods such as principal component analysis (PCA), diffusion maps are part of the family of nonlinear dimensionality reduction methods which focus on discovering the underlying manifold that the data has been sampled from. By integrating local similarities at different scales, diffusion maps give a global description of the data-set. Compared with other methods, the diffusion map algorithm is robust to noise perturbation and computationally inexpensive.

Block-matching and 3D filtering (BM3D) is a 3-D block-matching algorithm used primarily for noise reduction in images. It is one of the expansions of the non-local means methodology. There are two cascades in BM3D: a hard-thresholding and a Wiener filter stage, both involving the following parts: grouping, collaborative filtering, and aggregation. This algorithm depends on an augmented representation in the transformation site.

U-Net is a convolutional neural network that was developed for biomedical image segmentation at the Computer Science Department of the University of Freiburg. The network is based on a fully convolutional neural network whose architecture was modified and extended to work with fewer training images and to yield more precise segmentation. Segmentation of a 512 × 512 image takes less than a second on a modern (2015) GPU using the U-Net architecture.

In mathematics, the graph Fourier transform is a mathematical transform which eigendecomposes the Laplacian matrix of a graph into eigenvalues and eigenvectors. Analogously to the classical Fourier transform, the eigenvalues represent frequencies and eigenvectors form what is known as a graph Fourier basis.

References

- ↑ Tian, D.; Mansour, H.; Knyazev, A.; Vetro, A. (2014). Chebyshev and Conjugate Gradient Filters for Graph Image Denoising. IEEE International Conference on Multimedia and Expo Workshops (ICMEW). pp. 1–6. arXiv: 1509.01624 . doi:10.1109/ICMEW.2014.6890711.

- ↑ Knyazev, A.; Malyshev, A. (2015). Accelerated graph-based spectral polynomial filters. 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA. pp. 1–6. arXiv: 1509.02468 . doi:10.1109/MLSP.2015.7324315.

- ↑ Knyazev, A. (2015). Edge-enhancing Filters with Negative Weights. IEEE Global Conference on Signal and Information Processing (GlobalSIP), Orlando, FL, 14-16 Dec.2015. pp. 260–264. arXiv: 1509.02491 . doi:10.1109/GlobalSIP.2015.7418197.

- ↑ Knyazev, A.; Malyshev, A. (2017). Signal reconstruction via operator guiding. SampTA 2017: Sampling Theory and Applications, 12th International Conference, July 3–7, 2017, Tallinn, Estonia. pp. 630–634. arXiv: 1705.03493 . doi:10.1109/SAMPTA.2017.8024424.