Definition

For a simple graph with vertex set U = {u1, ..., un}, the adjacency matrix is a square n × n matrix A such that its element Aij is 1 when there is an edge from vertex ui to vertex uj, and 0 when there is no edge. [1] The diagonal elements of the matrix are all 0, since edges from a vertex to itself (loops) are not allowed in simple graphs. It is also sometimes useful in algebraic graph theory to replace the nonzero elements with algebraic variables. [2] The same concept can be extended to multigraphs and graphs with loops by storing the number of edges between each two vertices in the corresponding matrix element, and by allowing nonzero diagonal elements. Loops may be counted either once (as a single edge) or twice (as two vertex-edge incidences), as long as a consistent convention is followed. Undirected graphs often use the latter convention of counting loops twice, whereas directed graphs typically use the former convention.

Of a bipartite graph

The adjacency matrix A of a bipartite graph whose two parts have r and s vertices can be written in the form

where B is an r × s matrix, and 0r,r and 0s,s represent the r × r and s × s zero matrices. In this case, the smaller matrix B uniquely represents the graph, and the remaining parts of A can be discarded as redundant. B is sometimes called the biadjacency matrix.

Formally, let G = (U, V, E) be a bipartite graph with parts U = {u1, ..., ur}, V = {v1, ..., vs} and edges E. The biadjacency matrix is the r × s 0–1 matrix B in which bi,j = 1 if and only if (ui, vj) ∈ E.

If G is a bipartite multigraph or weighted graph, then the elements bi,j are taken to be the number of edges between the vertices or the weight of the edge (ui, vj), respectively.

Variations

An (a, b, c)-adjacency matrix A of a simple graph has Ai,j = a if (i, j) is an edge, b if it is not, and c on the diagonal. The Seidel adjacency matrix is a (−1, 1, 0)-adjacency matrix. This matrix is used in studying strongly regular graphs and two-graphs. [3]

The distance matrix has in position (i, j) the distance between vertices vi and vj. The distance is the length of a shortest path connecting the vertices. Unless lengths of edges are explicitly provided, the length of a path is the number of edges in it. The distance matrix resembles a high power of the adjacency matrix, but instead of telling only whether or not two vertices are connected (i.e., the connection matrix, which contains Boolean values), it gives the exact distance between them.

Examples

Undirected graphs

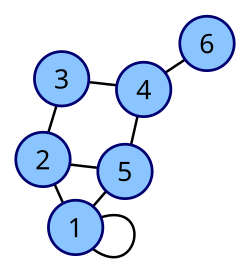

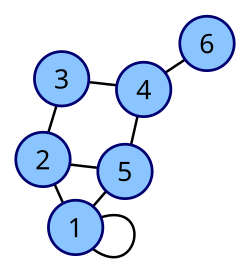

The convention followed here (for undirected graphs) is that each edge adds 1 to the appropriate cell in the matrix, and each loop (an edge from a vertex to itself) adds 2 to the appropriate cell on the diagonal in the matrix. [4] This allows the degree of a vertex to be easily found by taking the sum of the values in either its respective row or column in the adjacency matrix.

| Labeled graph | Adjacency matrix |

|---|

|

Coordinates are 1–6.

|

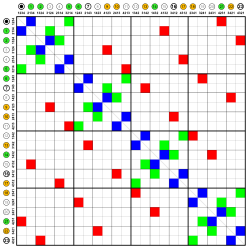

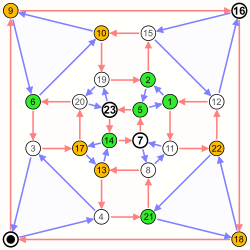

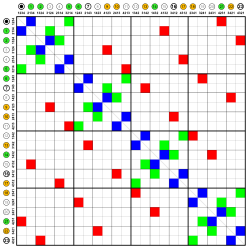

Nauru graph

|

Coordinates are 0–23.

White fields are zeros, colored fields are ones.

|

Directed graphs

The adjacency matrix of a directed graph can be asymmetric. One can define the adjacency matrix of a directed graph either such that

- a non-zero element Aij indicates an edge from i to j or

- it indicates an edge from j to i.

The former definition is commonly used in graph theory and social network analysis (e.g., sociology, political science, economics, psychology). [5] The latter is more common in other applied sciences (e.g., dynamical systems, physics, network science) where A is sometimes used to describe linear dynamics on graphs. [6]

Using the first definition, the in-degrees of a vertex can be computed by summing the entries of the corresponding column and the out-degree of vertex by summing the entries of the corresponding row. When using the second definition, the in-degree of a vertex is given by the corresponding row sum and the out-degree is given by the corresponding column sum.

Trivial graphs

The adjacency matrix of a complete graph contains all ones except along the diagonal where there are only zeros. The adjacency matrix of an empty graph is a zero matrix.

Properties

Spectrum

The adjacency matrix of an undirected simple graph is symmetric, and therefore has a complete set of real eigenvalues and an orthogonal eigenvector basis. The set of eigenvalues of a graph is the spectrum of the graph. [7] It is common to denote the eigenvalues by

The greatest eigenvalue  is bounded above by the maximum degree. This can be seen as result of the Perron–Frobenius theorem, but it can be proved easily. Let v be one eigenvector associated to

is bounded above by the maximum degree. This can be seen as result of the Perron–Frobenius theorem, but it can be proved easily. Let v be one eigenvector associated to  and x the entry in which v has maximum absolute value. Without loss of generality assume vx is positive since otherwise you simply take the eigenvector -v, also associated to

and x the entry in which v has maximum absolute value. Without loss of generality assume vx is positive since otherwise you simply take the eigenvector -v, also associated to  . Then

. Then

For d-regular graphs, d is the first eigenvalue of A for the vector v = (1, ..., 1) (it is easy to check that it is an eigenvalue and it is the maximum because of the above bound). The multiplicity of this eigenvalue is the number of connected components of G, in particular  for connected graphs. It can be shown that for each eigenvalue

for connected graphs. It can be shown that for each eigenvalue  , its opposite

, its opposite  is also an eigenvalue of A if G is a bipartite graph. [8] In particular −d is an eigenvalue of any d-regular bipartite graph.

is also an eigenvalue of A if G is a bipartite graph. [8] In particular −d is an eigenvalue of any d-regular bipartite graph.

The difference  is called the spectral gap and it is related to the expansion of G. It is also useful to introduce the spectral radius of

is called the spectral gap and it is related to the expansion of G. It is also useful to introduce the spectral radius of  denoted by

denoted by  . This number is bounded by

. This number is bounded by  . This bound is tight in the Ramanujan graphs.

. This bound is tight in the Ramanujan graphs.

Matrix powers

If A is the adjacency matrix of the directed or undirected graph G, then the matrix An (i.e., the matrix product of n copies of A) has an interesting interpretation: the element (i, j) gives the number of (directed or undirected) walks of length n from vertex i to vertex j. [10] If n is the smallest nonnegative integer, such that for some i, j, the element (i, j) of An is positive, then n is the distance between vertex i and vertex j. A great example of how this is useful is in counting the number of triangles in an undirected graph G, which is exactly the trace of A3 divided by 3 or 6 depending on whether the graph is directed or not. We divide by those values to compensate for the overcounting of each triangle. In an undirected graph, each triangle will be counted twice for all three nodes, because the path can be followed clockwise or counterclockwise : ijk or ikj. The adjacency matrix can be used to determine whether or not the graph is connected.

If a directed graph has a nilpotent adjacency matrix (i.e., if there exists n such that An is the zero matrix), then it is a directed acyclic graph. [11]

Data structures

The adjacency matrix may be used as a data structure for the representation of graphs in computer programs for manipulating graphs. Boolean data types are used, such as True and False in Python. The main alternative data structure, also in use for this application, is the adjacency list. [12] [13]

The space needed to represent an adjacency matrix and the time needed to perform operations on them is dependent on the matrix representation chosen for the underlying matrix. Sparse matrix representations only store non-zero matrix entries and implicitly represent the zero entries. They can, for example, be used to represent sparse graphs without incurring the space overhead from storing the many zero entries in the adjacency matrix of the sparse graph. In the following section the adjacency matrix is assumed to be represented by an array data structure so that zero and non-zero entries are all directly represented in storage.

Because each entry in the adjacency matrix requires only one bit, it can be represented in a very compact way, occupying only |V |2 / 8 bytes to represent a directed graph, or (by using a packed triangular format and only storing the lower triangular part of the matrix) approximately |V |2 / 16 bytes to represent an undirected graph. Although slightly more succinct representations are possible, this method gets close to the information-theoretic lower bound for the minimum number of bits needed to represent all n-vertex graphs. [14] For storing graphs in text files, fewer bits per byte can be used to ensure that all bytes are text characters, for instance by using a Base64 representation. [15] Besides avoiding wasted space, this compactness encourages locality of reference. However, for a large sparse graph, adjacency lists require less storage space, because they do not waste any space representing edges that are not present. [13] [16]

An alternative form of adjacency matrix (which, however, requires a larger amount of space) replaces the numbers in each element of the matrix with pointers to edge objects (when edges are present) or null pointers (when there is no edge). [16] It is also possible to store edge weights directly in the elements of an adjacency matrix. [13]

Besides the space tradeoff, the different data structures also facilitate different operations. Finding all vertices adjacent to a given vertex in an adjacency list is as simple as reading the list, and takes time proportional to the number of neighbors. With an adjacency matrix, an entire row must instead be scanned, which takes a larger amount of time, proportional to the number of vertices in the whole graph. On the other hand, testing whether there is an edge between two given vertices can be determined at once with an adjacency matrix, while requiring time proportional to the minimum degree of the two vertices with the adjacency list. [13] [16]

This page is based on this

Wikipedia article Text is available under the

CC BY-SA 4.0 license; additional terms may apply.

Images, videos and audio are available under their respective licenses.