In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices which are Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries.

In linear algebra, the trace of a square matrix A, denoted tr(A), is defined to be the sum of elements on the main diagonal of A. The trace is only defined for a square matrix.

In linear algebra, the identity matrix of size is the square matrix with ones on the main diagonal and zeros elsewhere.

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices A and B is denoted as AB.

In linear algebra, an invertible complex square matrix U is unitary if its conjugate transpose U* is also its inverse, that is, if

In mathematics, the dot product or scalar product is an algebraic operation that takes two equal-length sequences of numbers, and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two vectors is widely used. It is often called the inner product of Euclidean space, even though it is not the only inner product that can be defined on Euclidean space.

In mathematics, a square matrix is a matrix with the same number of rows and columns. An n-by-n matrix is known as a square matrix of order . Any two square matrices of the same order can be added and multiplied.

In linear algebra, a diagonal matrix is a matrix in which the entries outside the main diagonal are all zero; the term usually refers to square matrices. Elements of the main diagonal can either be zero or nonzero. An example of a 2×2 diagonal matrix is , while an example of a 3×3 diagonal matrix is. An identity matrix of any size, or any multiple of it, is a diagonal matrix.

In mathematics, a Hermitian matrix is a complex square matrix that is equal to its own conjugate transpose—that is, the element in the i-th row and j-th column is equal to the complex conjugate of the element in the j-th row and i-th column, for all indices i and j:

In mathematics, especially the usage of linear algebra in Mathematical physics, Einstein notation is a notational convention that implies summation over a set of indexed terms in a formula, thus achieving brevity. As part of mathematics it is a notational subset of Ricci calculus; however, it is often used in physics applications that do not distinguish between tangent and cotangent spaces. It was introduced to physics by Albert Einstein in 1916.

In linear algebra, the adjugate or classical adjoint of a square matrix A is the transpose of its cofactor matrix and is denoted by adj(A). It is also occasionally known as adjunct matrix, or "adjoint", though the latter term today normally refers to a different concept, the adjoint operator which for a matrix is the conjugate transpose.

In linear algebra, an n-by-n square matrix A is called invertible, if there exists an n-by-n square matrix B such that

In abstract algebra, a matrix ring is a set of matrices with entries in a ring R that form a ring under matrix addition and matrix multiplication. The set of all n × n matrices with entries in R is a matrix ring denoted Mn(R). Some sets of infinite matrices form infinite matrix rings. Any subring of a matrix ring is a matrix ring. Over a rng, one can form matrix rngs.

In mathematics, the Smith normal form is a normal form that can be defined for any matrix with entries in a principal ideal domain (PID). The Smith normal form of a matrix is diagonal, and can be obtained from the original matrix by multiplying on the left and right by invertible square matrices. In particular, the integers are a PID, so one can always calculate the Smith normal form of an integer matrix. The Smith normal form is very useful for working with finitely generated modules over a PID, and in particular for deducing the structure of a quotient of a free module. It is named after the Irish mathematician Henry John Stephen Smith.

In linear algebra, an eigenvector or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by , is the factor by which the eigenvector is scaled.

In mathematics, the split-octonions are an 8-dimensional nonassociative algebra over the real numbers. Unlike the standard octonions, they contain non-zero elements which are non-invertible. Also the signatures of their quadratic forms differ: the split-octonions have a split signature (4,4) whereas the octonions have a positive-definite signature (8,0).

In mathematics, a matrix is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object.

A quaternionic matrix is a matrix whose elements are quaternions.

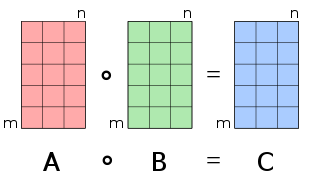

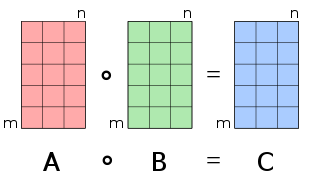

In mathematics, the Hadamard product is a binary operation that takes two matrices of the same dimensions and produces another matrix of the same dimension as the operands, where each element i, j is the product of elements i, j of the original two matrices. It is to be distinguished from the more common matrix product. It is attributed to, and named after, either French mathematician Jacques Hadamard or German Russian mathematician Issai Schur.

Matrix completion is the task of filling in the missing entries of a partially observed matrix, which is equivalent to performing data imputation in statistics. A wide range of datasets are naturally organized in matrix form. One example is the movie-ratings matrix, as appears in the Netflix problem: Given a ratings matrix in which each entry represents the rating of movie by customer , if customer has watched movie and is otherwise missing, we would like to predict the remaining entries in order to make good recommendations to customers on what to watch next. Another example is the document-term matrix: The frequencies of words used in a collection of documents can be represented as a matrix, where each entry corresponds to the number of times the associated term appears in the indicated document.