In linear algebra, a symmetric matrix is a square matrix that is equal to its transpose. Formally,

In mathematics, a square matrix is a matrix with the same number of rows and columns. An n-by-n matrix is known as a square matrix of order . Any two square matrices of the same order can be added and multiplied.

In mathematics, a complex square matrix A is normal if it commutes with its conjugate transpose A*:

In linear algebra, the transpose of a matrix is an operator which flips a matrix over its diagonal; that is, it switches the row and column indices of the matrix A by producing another matrix, often denoted by AT.

In mathematics, the orthogonal group in dimension n, denoted O(n), is the group of distance-preserving transformations of a Euclidean space of dimension n that preserve a fixed point, where the group operation is given by composing transformations. The orthogonal group is sometimes called the general orthogonal group, by analogy with the general linear group. Equivalently, it is the group of n × n orthogonal matrices, where the group operation is given by matrix multiplication (an orthogonal matrix is a real matrix whose inverse equals its transpose). The orthogonal group is an algebraic group and a Lie group. It is compact.

In mathematics, the name symplectic group can refer to two different, but closely related, collections of mathematical groups, denoted Sp(2n, F) and Sp(n) for positive integer n and field F (usually C or R). The latter is called the compact symplectic group and is also denoted by . Many authors prefer slightly different notations, usually differing by factors of 2. The notation used here is consistent with the size of the most common matrices which represent the groups. In Cartan's classification of the simple Lie algebras, the Lie algebra of the complex group Sp(2n, C) is denoted Cn, and Sp(n) is the compact real form of Sp(2n, C). Note that when we refer to the (compact) symplectic group it is implied that we are talking about the collection of (compact) symplectic groups, indexed by their dimension n.

In mathematics, a symplectic matrix is a matrix with real entries that satisfies the condition

In mathematics, the unitary group of degree n, denoted U(n), is the group of n × n unitary matrices, with the group operation of matrix multiplication. The unitary group is a subgroup of the general linear group GL(n, C), and it has as a subgroup the special unitary group, consisting of those unitary matrices with determinant 1.

In mathematics, particularly in linear algebra, a skew-symmetricmatrix is a square matrix whose transpose equals its negative. That is, it satisfies the condition

In mathematics, a Hermitian matrix is a complex square matrix that is equal to its own conjugate transpose—that is, the element in the i-th row and j-th column is equal to the complex conjugate of the element in the j-th row and i-th column, for all indices i and j:

In mathematics, a symplectic vector space is a vector space over a field equipped with a symplectic bilinear form.

In linear algebra, a square matrix with complex entries is said to be skew-Hermitian or anti-Hermitian if its conjugate transpose is the negative of the original matrix. That is, the matrix is skew-Hermitian if it satisfies the relation

In mathematics, the determinant of an m-by-m skew-symmetric matrix can always be written as the square of a polynomial in the matrix entries, a polynomial with integer coefficients that only depends on m. When m is odd, the polynomial is zero, and when m is even, it is a nonzero polynomial of degree m/2, and is unique up to multiplication by ±1. The convention on skew-symmetric tridiagonal matrices, given below in the examples, then determines one specific polynomial, called the Pfaffian polynomial. The value of this polynomial, when applied to the entries of a skew-symmetric matrix, is called the Pfaffian of that matrix. The term Pfaffian was introduced by Cayley, who indirectly named them after Johann Friedrich Pfaff.

In mathematics, a complex structure on a real vector space is an automorphism of that squares to the minus identity, . Such a structure on allows one to define multiplication by complex scalars in a canonical fashion so as to regard as a complex vector space.

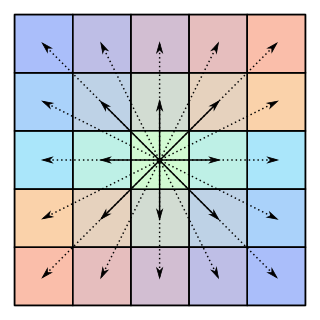

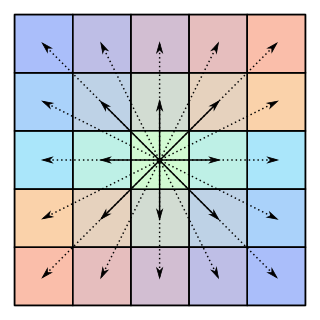

In mathematics and physics, a Hamiltonian vector field on a symplectic manifold is a vector field defined for any energy function or Hamiltonian. Named after the physicist and mathematician Sir William Rowan Hamilton, a Hamiltonian vector field is a geometric manifestation of Hamilton's equations in classical mechanics. The integral curves of a Hamiltonian vector field represent solutions to the equations of motion in the Hamiltonian form. The diffeomorphisms of a symplectic manifold arising from the flow of a Hamiltonian vector field are known as canonical transformations in physics and (Hamiltonian) symplectomorphisms in mathematics.

The Rayleigh–Ritz method is a direct numerical method of approximating eigenvalues, originated in the context of solving physical boundary value problems and named after Lord Rayleigh and Walther Ritz.

In linear algebra, an eigenvector or characteristic vector is a vector that has its direction unchanged by a given linear transformation. More precisely, an eigenvector, , of a linear transformation, , is scaled by a constant factor, , when the linear transformation is applied to it: . The corresponding eigenvalue, characteristic value, or characteristic root is the multiplying factor .

In mathematics, especially in linear algebra and matrix theory, a centrosymmetric matrix is a matrix which is symmetric about its center.

In linear algebra, eigendecomposition is the factorization of a matrix into a canonical form, whereby the matrix is represented in terms of its eigenvalues and eigenvectors. Only diagonalizable matrices can be factorized in this way. When the matrix being factorized is a normal or real symmetric matrix, the decomposition is called "spectral decomposition", derived from the spectral theorem.