The parallelization of graph problems faces significant challenges: Data-driven computations, unstructured problems, poor locality and high data access to computation ratio. [8] [9] The graph representation used for parallel architectures plays a significant role in facing those challenges. Poorly chosen representations may unnecessarily drive up the communication cost of the algorithm, which will decrease its scalability. In the following, shared and distributed memory architectures are considered.

Distributed memory

In the distributed memory model, the usual approach is to partition the vertex set  of the graph into

of the graph into  sets

sets  . Here,

. Here,  is the amount of available processing elements (PE). The vertex set partitions are then distributed to the PEs with matching index, additionally to the corresponding edges. Every PE has its own subgraph representation, where edges with an endpoint in another partition require special attention. For standard communication interfaces like MPI, the ID of the PE owning the other endpoint has to be identifiable. During computation in a distributed graph algorithms, passing information along these edges implies communication. [10]

is the amount of available processing elements (PE). The vertex set partitions are then distributed to the PEs with matching index, additionally to the corresponding edges. Every PE has its own subgraph representation, where edges with an endpoint in another partition require special attention. For standard communication interfaces like MPI, the ID of the PE owning the other endpoint has to be identifiable. During computation in a distributed graph algorithms, passing information along these edges implies communication. [10]

Partitioning the graph needs to be done carefully - there is a trade-off between low communication and even size partitioning [11] But partitioning a graph is a NP-hard problem, so it is not feasible to calculate them. Instead, the following heuristics are used.

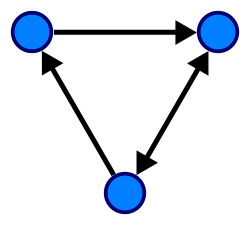

1D partitioning: Every processor gets  vertices and the corresponding outgoing edges. This can be understood as a row-wise or column-wise decomposition of the adjacency matrix. For algorithms operating on this representation, this requires an All-to-All communication step as well as

vertices and the corresponding outgoing edges. This can be understood as a row-wise or column-wise decomposition of the adjacency matrix. For algorithms operating on this representation, this requires an All-to-All communication step as well as  message buffer sizes, as each PE potentially has outgoing edges to every other PE. [12]

message buffer sizes, as each PE potentially has outgoing edges to every other PE. [12]

2D partitioning: Every processor gets a submatrix of the adjacency matrix. Assume the processors are aligned in a rectangle  , where

, where  and

and  are the amount of processing elements in each row and column, respectively. Then each processor gets a submatrix of the adjacency matrix of dimension

are the amount of processing elements in each row and column, respectively. Then each processor gets a submatrix of the adjacency matrix of dimension  . This can be visualized as a checkerboard pattern in a matrix. [12] Therefore, each processing unit can only have outgoing edges to PEs in the same row and column. This bounds the amount of communication partners for each PE to

. This can be visualized as a checkerboard pattern in a matrix. [12] Therefore, each processing unit can only have outgoing edges to PEs in the same row and column. This bounds the amount of communication partners for each PE to  out of

out of  possible ones.

possible ones.