In mathematics, specifically in differential equations, an equilibrium point is a constant solution to a differential equation.

In mathematics, specifically in differential equations, an equilibrium point is a constant solution to a differential equation.

The point is an equilibrium point for the differential equation

if for all .

Similarly, the point is an equilibrium point (or fixed point) for the difference equation

if for .

Equilibria can be classified by looking at the signs of the eigenvalues of the linearization of the equations about the equilibria. That is to say, by evaluating the Jacobian matrix at each of the equilibrium points of the system, and then finding the resulting eigenvalues, the equilibria can be categorized. Then the behavior of the system in the neighborhood of each equilibrium point can be qualitatively determined, (or even quantitatively determined, in some instances), by finding the eigenvector(s) associated with each eigenvalue.

An equilibrium point is hyperbolic if none of the eigenvalues have zero real part. If all eigenvalues have negative real parts, the point is stable. If at least one has a positive real part, the point is unstable. If at least one eigenvalue has negative real part and at least one has positive real part, the equilibrium is a saddle point and it is unstable. If all the eigenvalues are real and have the same sign the point is called a node.

In vector calculus, the Jacobian matrix of a vector-valued function of several variables is the matrix of all its first-order partial derivatives. When this matrix is square, that is, when the function takes the same number of variables as input as the number of vector components of its output, its determinant is referred to as the Jacobian determinant. Both the matrix and the determinant are often referred to simply as the Jacobian in literature.

In mathematics, an eigenfunction of a linear operator D defined on some function space is any non-zero function in that space that, when acted upon by D, is only multiplied by some scaling factor called an eigenvalue. As an equation, this condition can be written as

In control engineering, model based fault detection and system identification a state-space representation is a mathematical model of a physical system specified as a set of input, output and variables related by first-order differential equations or difference equations. Such variables, called state variables, evolve over time in a way that depends on the values they have at any given instant and on the externally imposed values of input variables. Output variables’ values depend on the values of the state variables.

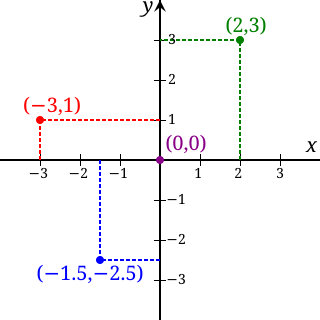

In mathematics, the real coordinate space of dimension n, denoted Rn or , is the set of the n-tuples of real numbers, that is the set of all sequences of n real numbers. Special cases are called the real lineR1 and the real coordinate planeR2. With component-wise addition and scalar multiplication, it is a real vector space, and its elements are called coordinate vectors.

In mathematics, a stiff equation is a differential equation for which certain numerical methods for solving the equation are numerically unstable, unless the step size is taken to be extremely small. It has proven difficult to formulate a precise definition of stiffness, but the main idea is that the equation includes some terms that can lead to rapid variation in the solution.

In applied mathematics, in particular the context of nonlinear system analysis, a phase plane is a visual display of certain characteristics of certain kinds of differential equations; a coordinate plane with axes being the values of the two state variables, say (x, y), or (q, p) etc. (any pair of variables). It is a two-dimensional case of the general n-dimensional phase space.

In linear algebra, an eigenvector or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by , is the factor by which the eigenvector is scaled.

Bifurcation theory is the mathematical study of changes in the qualitative or topological structure of a given family of curves, such as the integral curves of a family of vector fields, and the solutions of a family of differential equations. Most commonly applied to the mathematical study of dynamical systems, a bifurcation occurs when a small smooth change made to the parameter values of a system causes a sudden 'qualitative' or topological change in its behavior. Bifurcations occur in both continuous systems and discrete systems.

Floquet theory is a branch of the theory of ordinary differential equations relating to the class of solutions to periodic linear differential equations of the form

In mathematics, in the theory of differential equations and dynamical systems, a particular stationary or quasistationary solution to a nonlinear system is called linearly unstable if the linearization of the equation at this solution has the form , where r is the perturbation to the steady state, A is a linear operator whose spectrum contains eigenvalues with positive real part. If all the eigenvalues have negative real part, then the solution is called linearlystable. Other names for linear stability include exponential stability or stability in terms of first approximation. If there exist an eigenvalue with zero real part then the question about stability cannot be solved on the basis of the first approximation and we approach the so-called "centre and focus problem".

In the mathematics of evolving systems, the concept of a center manifold was originally developed to determine stability of degenerate equilibria. Subsequently, the concept of center manifolds was realised to be fundamental to mathematical modelling.

Linear dynamical systems are dynamical systems whose evolution functions are linear. While dynamical systems, in general, do not have closed-form solutions, linear dynamical systems can be solved exactly, and they have a rich set of mathematical properties. Linear systems can also be used to understand the qualitative behavior of general dynamical systems, by calculating the equilibrium points of the system and approximating it as a linear system around each such point.

In mathematics, preconditioning is the application of a transformation, called the preconditioner, that conditions a given problem into a form that is more suitable for numerical solving methods. Preconditioning is typically related to reducing a condition number of the problem. The preconditioned problem is then usually solved by an iterative method.

In mathematics, stability theory addresses the stability of solutions of differential equations and of trajectories of dynamical systems under small perturbations of initial conditions. The heat equation, for example, is a stable partial differential equation because small perturbations of initial data lead to small variations in temperature at a later time as a result of the maximum principle. In partial differential equations one may measure the distances between functions using Lp norms or the sup norm, while in differential geometry one may measure the distance between spaces using the Gromov–Hausdorff distance.

Numerical continuation is a method of computing approximate solutions of a system of parameterized nonlinear equations,

In mathematics, in the study of dynamical systems, the Hartman–Grobman theorem or linearisation theorem is a theorem about the local behaviour of dynamical systems in the neighbourhood of a hyperbolic equilibrium point. It asserts that linearisation—a natural simplification of the system—is effective in predicting qualitative patterns of behaviour. The theorem owes its name to Philip Hartman and David M. Grobman.

In mathematical biology, the community matrix is the linearization of a generalized Lotka–Volterra equation at an equilibrium point. The eigenvalues of the community matrix determine the stability of the equilibrium point.

In mathematics, the slow manifold of an equilibrium point of a dynamical system occurs as the most common example of a center manifold. One of the main methods of simplifying dynamical systems, is to reduce the dimension of the system to that of the slow manifold—center manifold theory rigorously justifies the modelling. For example, some global and regional models of the atmosphere or oceans resolve the so-called quasi-geostrophic flow dynamics on the slow manifold of the atmosphere/oceanic dynamics, and is thus crucial to forecasting with a climate model.

In mathematics, an ordinary differential equation (ODE) is a differential equation (DE) dependent on only a single independent variable. As with other DE, its unknown(s) consists of one function(s) and involves the derivatives of those functions. The term "ordinary" is used in contrast with partial differential equations which may be with respect to more than one independent variable.

In dynamical systems theory, the Olech theorem establishes sufficient conditions for global asymptotic stability of a two-equation system of non-linear differential equations. The result was established by Czesław Olech in 1963, based on joint work with Philip Hartman.