Related Research Articles

Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others.

Machine translation, sometimes referred to by the abbreviation MT, is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another.

Corpus linguistics is the study of a language as that language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference.

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference.

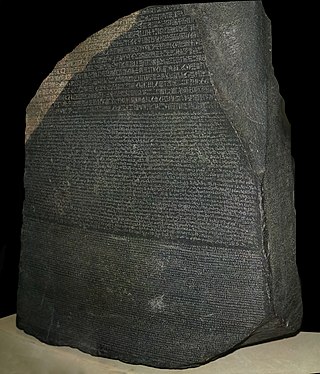

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered.

Statistical machine translation (SMT) is a machine translation paradigm where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, and has more recently been superseded by neural machine translation in many applications.

Linguistic categories include

Various methods for the evaluation for machine translation have been employed. This article focuses on the evaluation of the output of machine translation, rather than on performance or usability evaluation.

Moses is a free software, statistical machine translation engine that can be used to train statistical models of text translation from a source language to a target language, developed by the University of Edinburgh. Moses then allows new source-language text to be decoded using these models to produce automatic translations in the target language. Training requires a parallel corpus of passages in the two languages, typically manually translated sentence pairs. Moses is released under the LGPL licence and available both as source code and binaries for Windows and Linux. Its development is primarily supported by the EuroMatrix project, with funding by the European Commission.

Interactive machine translation (IMT), is a specific sub-field of computer-aided translation. Under this translation paradigm, the computer software that assists the human translator attempts to predict the text the user is going to input by taking into account all the information it has available. Whenever such prediction is wrong and the user provides feedback to the system, a new prediction is performed considering the new information available. Such process is repeated until the translation provided matches the user's expectations.

Philipp Koehn is a computer scientist and researcher in the field of machine translation. His primary research interest is statistical machine translation and he is one of the inventors of a method called phrase based machine translation. This is a sub-field of statistical translation methods that employs sequences of words as the basis of translation, expanding the previous word based approaches. A 2003 paper which he authored with Franz Josef Och and Daniel Marcu called Statistical phrase-based translation has attracted wide attention in Machine translation community and has been cited over a thousand times. Phrase based methods are widely used in machine translation applications in industry.

The LRE Map is a freely accessible large database on resources dedicated to Natural language processing. The original feature of LRE Map is that the records are collected during the submission of different major Natural language processing conferences. The records are then cleaned and gathered into a global database called "LRE Map".

Deep Linguistic Processing with HPSG - INitiative (DELPH-IN) is a collaboration where computational linguists worldwide develop natural language processing tools for deep linguistic processing of human language. The goal of DELPH-IN is to combine linguistic and statistical processing methods in order to computationally understand the meaning of texts and utterances.

The Europarl Corpus is a corpus that consists of the proceedings of the European Parliament from 1996 to 2012. In its first release in 2001, it covered eleven official languages of the European Union. With the political expansion of the EU the official languages of the ten new member states have been added to the corpus data. The latest release (2012) comprised up to 60 million words per language with the newly added languages being slightly underrepresented as data for them is only available from 2007 onwards. This latest version includes 21 European languages: Romanic, Germanic, Slavic, Finno-Ugric, Baltic, and Greek.

The following outline is provided as an overview of and topical guide to natural-language processing:

LEPOR is an automatic language independent machine translation evaluation metric with tunable parameters and reinforced factors.

MateCat is a web-based computer-assisted translation (CAT) tool. MateCat is released as open source software under the Lesser General Public License (LGPL) from the Free Software Foundation.

The EuroMatrixPlus is a project that ran from March 2009 to February 2012. EuroMatrixPlus succeeded a project called EuroMatrix and continued in further development and improvement of machine translation (MT) systems for languages of the European Union (EU).

Walther von Hahn is a German linguist and computer scientist. From 1977 to 2007, von Hahn taught Computer Science and Linguistics at Universität Hamburg.