Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others.

Machine translation, sometimes referred to by the abbreviation MT, is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another.

Corpus linguistics is the study of a language as that language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference.

In linguistics, a corpus or text corpus is a language resource consisting of a large and structured set of texts. In corpus linguistics, they are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory.

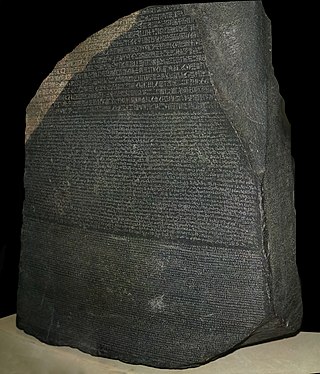

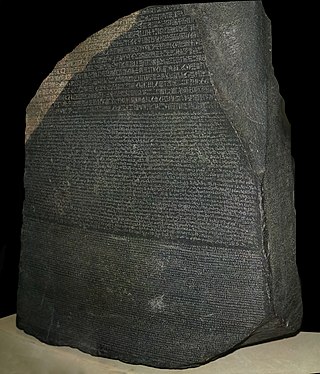

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered.

Machine translation can use a method based on dictionary entries, which means that the words will be translated as a dictionary does – word by word, usually without much correlation of meaning between them. Dictionary lookups may be done with or without morphological analysis or lemmatisation. While this approach to machine translation is probably the least sophisticated, dictionary-based machine translation is ideally suitable for the translation of long lists of phrases on the subsentential level, e.g. inventories or simple catalogs of products and services.

The American National Corpus (ANC) is a text corpus of American English containing 22 million words of written and spoken data produced since 1990. Currently, the ANC includes a range of genres, including emerging genres such as email, tweets, and web data that are not included in earlier corpora such as the British National Corpus. It is annotated for part of speech and lemma, shallow parse, and named entities.

In linguistics, a treebank is a parsed text corpus that annotates syntactic or semantic sentence structure. The construction of parsed corpora in the early 1990s revolutionized computational linguistics, which benefitted from large-scale empirical data.

Statistical machine translation (SMT) is a machine translation paradigm where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, and has more recently been superseded by neural machine translation in many applications.

Linguistic categories include

The Quranic Arabic Corpus is an annotated linguistic resource consisting of 77,430 words of Quranic Arabic. The project aims to provide morphological and syntactic annotations for researchers wanting to study the language of the Quran.

Tatoeba is a free collection of example sentences with translations geared towards foreign language learners. It is available in more than 400 languages. Its name comes from the Japanese phrase "tatoeba" (例えば), meaning "for example". It is written and maintained by a community of volunteers through a model of open collaboration. Individual contributors are known as Tatoebans. It is run by Association Tatoeba, a French non-profit organization funded through donations.

Philipp Koehn is a computer scientist and researcher in the field of machine translation. His primary research interest is statistical machine translation and he is one of the inventors of a method called phrase based machine translation. This is a sub-field of statistical translation methods that employs sequences of words as the basis of translation, expanding the previous word based approaches. A 2003 paper which he authored with Franz Josef Och and Daniel Marcu called Statistical phrase-based translation has attracted wide attention in Machine translation community and has been cited over a thousand times. Phrase based methods are widely used in machine translation applications in industry.

Deep Linguistic Processing with HPSG - INitiative (DELPH-IN) is a collaboration where computational linguists worldwide develop natural language processing tools for deep linguistic processing of human language. The goal of DELPH-IN is to combine linguistic and statistical processing methods in order to computationally understand the meaning of texts and utterances.

The Europarl Corpus is a corpus that consists of the proceedings of the European Parliament from 1996 to 2012. In its first release in 2001, it covered eleven official languages of the European Union. With the political expansion of the EU the official languages of the ten new member states have been added to the corpus data. The latest release (2012) comprised up to 60 million words per language with the newly added languages being slightly underrepresented as data for them is only available from 2007 onwards. This latest version includes 21 European languages: Romanic, Germanic, Slavic, Finno-Ugric, Baltic, and Greek.

The following outline is provided as an overview of and topical guide to natural-language processing:

A corpus manager is a tool for multilingual corpus analysis, which allows effective searching in corpora.

The EuroMatrix is a project that ran from September 2006 to February 2009. The project aimed to develop and improve machine translation (MT) systems between all official languages of the European Union (EU).

The TenTen Corpus Family (also called TenTen corpora) is a set of comparable web text corpora, i.e. collections of texts that have been crawled from the World Wide Web and processed to match the same standards. These corpora are made available through the Sketch Engine corpus manager. There are TenTen corpora for more than 35 languages. Their target size is 10 billion (1010) words per language, which gave rise to the corpus family's name.