Related Research Articles

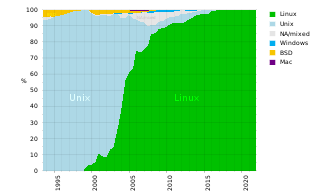

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, supercomputers have existed which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

A Beowulf cluster is a computer cluster of what are normally identical, commodity-grade computers networked into a small local area network with libraries and programs installed which allow processing to be shared among them. The result is a high-performance parallel computing cluster from inexpensive personal computer hardware.

Blue Gene was an IBM project aimed at designing supercomputers that can reach operating speeds in the petaFLOPS (PFLOPS) range, with low power consumption.

PARAM is a series of Indian supercomputers designed and assembled by the Centre for Development of Advanced Computing (C-DAC) in Pune. PARAM means "supreme" in the Sanskrit language, whilst also creating an acronym for "PARAllel Machine". As of November 2022, the fastest machine in the series is the PARAM Siddhi AI which ranks 63rd in world, with an Rpeak of 5.267 petaflops.

Cell is a 64-bit multi-core microprocessor microarchitecture that combines a general-purpose PowerPC core of modest performance with streamlined coprocessing elements which greatly accelerate multimedia and vector processing applications, as well as many other forms of dedicated computation.

ASCI Red was the first computer built under the Accelerated Strategic Computing Initiative (ASCI), the supercomputing initiative of the United States government created to help the maintenance of the United States nuclear arsenal after the 1992 moratorium on nuclear testing.

The Advanced Simulation and Computing Program (ASC) is a super-computing program run by the National Nuclear Security Administration, in order to simulate, test, and maintain the United States nuclear stockpile. The program was created in 1995 in order to support the Stockpile Stewardship Program. The goal of the initiative is to extend the lifetime of the current aging stockpile.

GPFS is high-performance clustered file system software developed by IBM. It can be deployed in shared-disk or shared-nothing distributed parallel modes, or a combination of these. It is used by many of the world's largest commercial companies, as well as some of the supercomputers on the Top 500 List. For example, it is the filesystem of the Summit at Oak Ridge National Laboratory which was the #1 fastest supercomputer in the world in the November 2019 Top 500 List. Summit is a 200 Petaflops system composed of more than 9,000 POWER9 processors and 27,000 NVIDIA Volta GPUs. The storage filesystem is called Alpine.

Patrick J. Miller is a computer scientist and high performance parallel applications developer with a Ph.D. in Computer Science from University of California, Davis, in run-time error detection and correction. Until recently he was with Lawrence Livermore National Laboratory.

The QCDOC is a supercomputer technology focusing on using relatively cheap low power processing elements to produce a massively parallel machine. The machine is custom-made to solve small but extremely demanding problems in the fields of quantum physics.

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing.

SiCortex was a supercomputer manufacturer founded in 2003 and headquartered in Clock Tower Place, Maynard, Massachusetts. On 27 May 2009, HPCwire reported that the company had shut down its operations, laid off most of its staff, and is seeking a buyer for its assets. The Register reported that Gerbsman Partners was hired to sell SiCortex's intellectual properties. While SiCortex had some sales, selling at least 75 prototype supercomputers to several large customers, the company had never produced an operating profit and ran out of venture capital. New funding could not be found.

Coates is a supercomputer installed at Purdue University on July 21, 2009. The high-performance computing cluster is operated by Information Technology at Purdue (ITaP), the university's central information technology organization. ITaP also operates clusters named Steele built in 2008, Rossmann built in 2010, and Hansen and Carter built in 2011. Coates was the largest campus supercomputer in the Big Ten outside a national center when built. It was the first native 10 Gigabit Ethernet (10GigE) cluster to be ranked in the TOP500 and placed 102nd on the June 2010 list.

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems.

A supercomputer operating system is an operating system intended for supercomputers. Since the end of the 20th century, supercomputer operating systems have undergone major transformations, as fundamental changes have occurred in supercomputer architecture. While early operating systems were custom tailored to each supercomputer to gain speed, the trend has been moving away from in-house operating systems and toward some form of Linux, with it running all the supercomputers on the TOP500 list in November 2017. In 2021, top 10 computers run for instance Red Hat Enterprise Linux (RHEL), or some variant of it or other Linux distribution e.g. Ubuntu.

Message passing is an inherent element of all computer clusters. All computer clusters, ranging from homemade Beowulfs to some of the fastest supercomputers in the world, rely on message passing to coordinate the activities of the many nodes they encompass. Message passing in computer clusters built with commodity servers and switches is used by virtually every internet service.

SUPRENUM was a German research project to develop a parallel computer from 1985 through 1990. It was a major effort which was aimed at developing a national expertise in massively parallel processing both at hardware and at software level.

Isra Vision Parsytec AG is a company of Isra Vision, founded in 1985 as Parsytec in Aachen, Germany.

The high performance supercomputing program started in mid-to-late 1980s in Pakistan. Supercomputing is a recent area of Computer science in which Pakistan has made progress, driven in part by the growth of the information technology age in the country. Developing on the ingenious supercomputer program started in 1980s when the deployment of the Cray supercomputers was initially denied.

The Holland Computing Center, often abbreviated HCC, is the high-performance computing core for the University of Nebraska System. HCC has locations in both the University of Nebraska-Lincoln June and Paul Schorr III Center for Computer Science & Engineering and the University of Nebraska Omaha Peter Kiewit Institute. The center was named after Omaha businessman Richard Holland who donated considerably to the university for the project.

References

- Markoff, J. (2004). "Hey, Gang, Let’s Make Our Own Supercomputer", The New York Times, February 23, 2004.